Have you ever ever thought that including extra options (or columns) to your dataset would make your machine studying mannequin smarter? It can — however solely up to some extent. Previous that, it’d really make your mannequin worse. This unusual phenomenon is what information scientists name “the curse of dimensionality.”

Let’s break this down in a easy, relatable method — and speak about how strategies like PCA and t-SNE assist us take care of it.

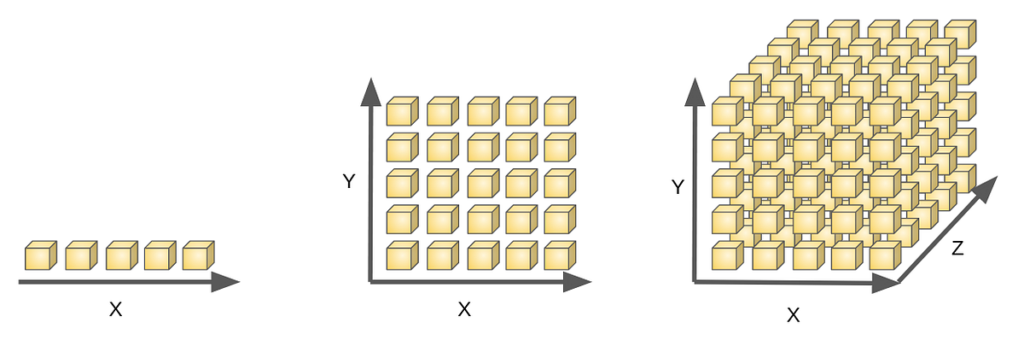

Think about looking for your good friend in a park. In 2D (size and width), it’s not too onerous. Now think about discovering them in a multi-story constructing (3D). Nonetheless doable.

Now think about you’re looking in a world with 100 dimensions. All of a sudden, each level is much away from each different level. Bizarre, proper?

That is what occurs with high-dimensional information:

- The house grows so quick that information factors turn out to be very sparse.

- Algorithms can’t determine which factors are actually shut or far.

- Consequently, machine studying fashions turn out to be confused, gradual, or begin memorizing noise (overfitting).

The curse of dimensionality impacts your fashions in a number of methods:

- Overfitting: The mannequin learns the noise, not the sample.

- Gradual coaching: Extra options = extra calculations.

- Poor efficiency: Fashions could battle on new information.

So what’s the repair? We shrink the house!

To maintain issues manageable, we cut back the variety of dimensions. However we wish to do it well, with out shedding necessary data.

Let’s discover two common strategies.

Consider PCA like taking an image of a 3D object from the good angle. It flattens the item into 2D — however in a method that also reveals you an important components.

PCA finds new axes (known as “principal parts”) that designate as a lot variation within the information as attainable. You possibly can then preserve solely the highest few axes and discard the remaining.

When to make use of PCA:

✅ When your information is usually linear

✅ While you desire a quick, easy discount

✅ As a preprocessing step earlier than coaching a mannequin

t-SNE (pronounced “tee-snee”) is sort of a magic mapmaker. It takes your tremendous complicated, high-dimensional information and attracts it in 2D or 3D. But it surely does so by preserving native neighborhoods, so factors that have been shut in high-dimensional house nonetheless keep shut within the visible.

When to make use of t-SNE:

✅ For visualizing clusters in information

✅ When your information has nonlinear patterns

❌ Not nice to be used earlier than a mannequin — it’s higher for exploration

The curse of dimensionality is an actual problem in fashionable information science. It reminds us that extra isn’t at all times higher with regards to information. Fortunately, instruments like PCA and t-SNE assist us simplify our information with out shedding what issues most.

So the following time you’re wrangling a large dataset, keep in mind: shrinking the house would possibly really unlock the insights you’re on the lookout for.