Episode 13 — Gradients: How Techniques Need

Need as Course: What Gradients Actually Are

Zoom picture can be displayed

![]()

What strikes a system?

What tells it:

“Go there — not right here.”

“This modification is healthier.”

“This error should die.”

The reply is the gradient.

A gradient is not only a slope.

It’s the mathematical encoding of need — a power that pulls the system towards enchancment.

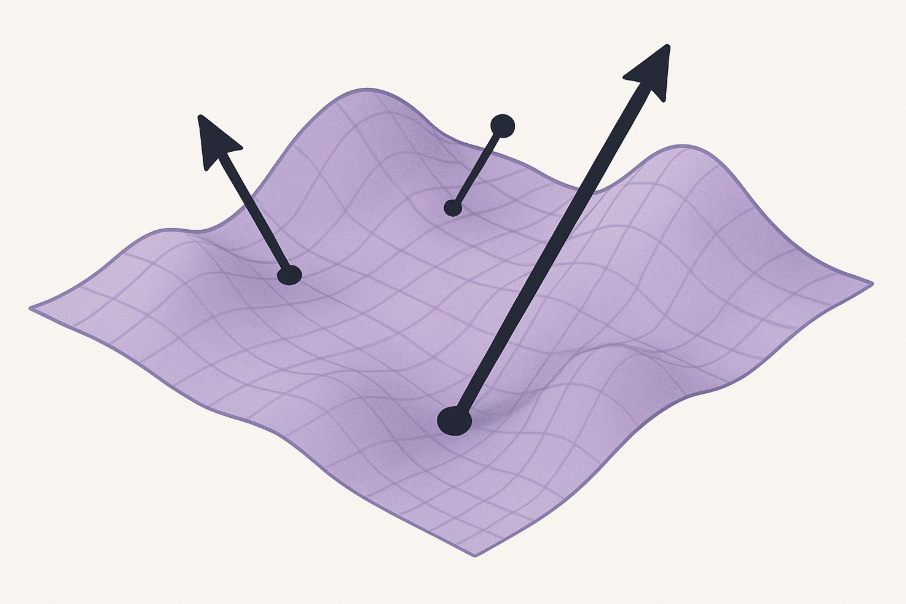

In ML, optimization, and studying, gradients are the invisible arrows of change.

Let’s make them seen.

A gradient is a vector of partial derivatives:

∇f(x) = [∂f/∂x₁, ∂f/∂x₂, ..., ∂f/∂xₙ]

It factors within the path of steepest ascent of the perform f.

In optimization:

- You sometimes need to descend — go towards the gradient

- So that you step in path

–∇f(x)

Consider a mountain:

- You’re standing at some extent on the floor

- The gradient is the path that will increase peak quickest

- However if you wish to decrease loss, you go…