By Bradley Ryan Kinnard, Founding father of Aftermath Applied sciences

Most individuals deal with AI like a merchandising machine. Plug in a immediate, get one thing again, possibly tweak it, transfer on. That’s positive for informal use. However I wasn’t fascinated with informal. I wasn’t fascinated with instruments that parrot. I wished an AI that might retain, adapt, and ultimately suppose like a developer. Not a glorified autocomplete — a self-improving system that understands what it’s constructing and why.

So I began constructing one.

Not a GPT wrapper. Not a plugin for an IDE. A completely autonomous, offline, memory-retaining, self-updating AI software program engineer. My finish aim is straightforward: drop this factor right into a codebase, stroll away, and are available again to one thing higher. One thing structured. One thing intentional. And proper now, I’m already on the level the place it’s pondering again — understanding my instructions, monitoring logic throughout a number of steps, and retaining reminiscence throughout whole periods.

The way forward for software program improvement isn’t assistive. It’s autonomous. And it’s nearer than most individuals suppose.

I’m not at AGI fairly but. However Section One is already operational. The system runs fully offline, with native inference from a light-weight LLM core. No cloud APIs. No fee limits. No surveillance or SaaS dependencies. It’s me, my machine, and the agent.

Proper now, the AI communicates by way of an area command interface. It accepts compound duties and may break them into multi-step reasoning chains. Ask it to summarize a repo, determine key dependencies, and prep for migration — and it tracks that intent throughout each command that follows.

Much more vital: it remembers.

Its reminiscence is presently session-persistent however structured modularly for future extension. It’s not faking reminiscence by jamming outdated messages again into a large context window — it has actual, organized retention. The AI understands prior reasoning, loops it again into present output, and adapts because it goes. After I say it “thinks again,” I imply it speaks with consciousness — of the duty, the historical past, and the place the logic is heading subsequent.

That’s Section One. And it really works.

There are many agent frameworks on the market. LangChain, AutoGPT, CrewAI. All of them promise modularity and reminiscence and planning. I attempted them. Then I tore them out.

Why? As a result of all of them rely on:

- Black field semantic reminiscence you’ll be able to’t examine or management

- Cloud-bound APIs with pricing and privateness dangers

- Inflexible framework logic that breaks as quickly as you ask the AI to do one thing actual

I didn’t need semantic search pretending to be reminiscence. I didn’t need embedding lookups pretending to be reasoning. And I positive as hell didn’t need GPT-4 throttling my very own system’s thought course of.

So I constructed all the pieces from scratch:

- My very own reminiscence construction (flat and scoped for now, embeddings coming later)

- My very own activity router and output interpreter

- My very own plugin logic for tooling, execution, and file-based operations

This factor is constructed to evolve my means, not experience the coattails of half-baked SaaS wrappers.

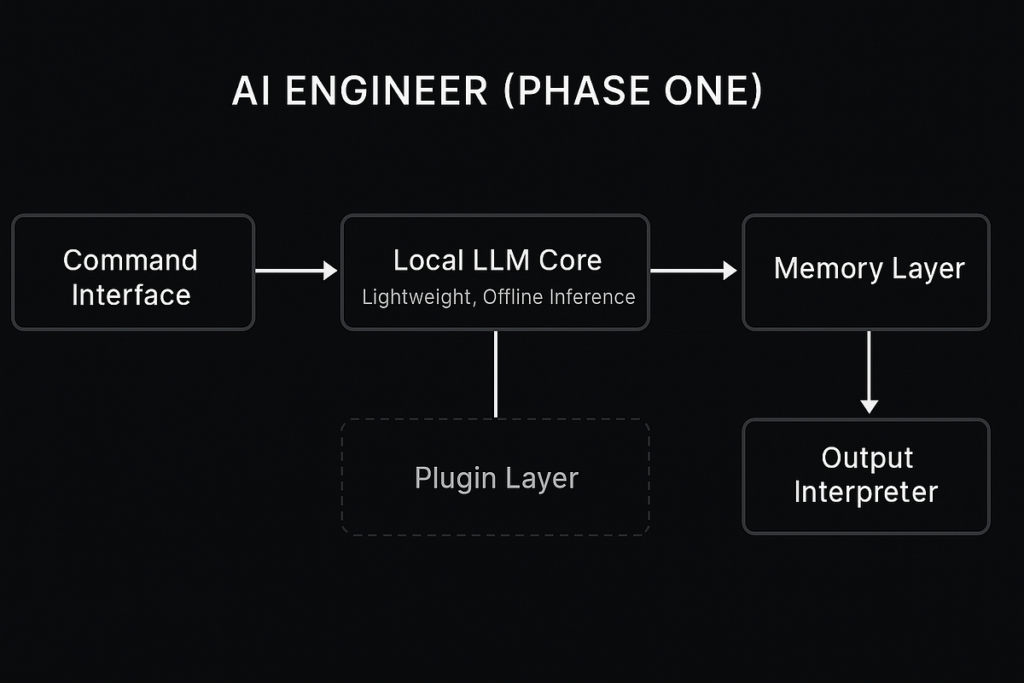

Right here’s the summary system diagram of the AI Engineer core because it stands now:

Key Components:

- Command Interface — the place the consumer inputs pure language duties

- Native LLM Core — light-weight, accelerated, offline inference

- Reminiscence Layer — shops session logic, related previous instructions, and reflections

- Output Interpreter — refines what will get returned, trims noise, and offers the agent character

- Plugin Layer (scaffolded) — deliberate system for code execution, repo crawling, and self-repair

Right here’s the UI imaginative and prescient — darkish mode, hacker aesthetic, intuitive as hell.

This interface doesn’t simply execute instructions. It tracks what the agent is pondering, reveals you what it remembers, and allows you to visualize the development loop in actual time.

Panels:

- Left: “Dev Reminiscence Stack” — reveals anchor duties and present focus

- Heart: Enter + Agent Thought Course of + Output

- Proper: Dwell enchancment loop standing — retry depend, eval rating, self-rating

📸 In regards to the Screenshot: This Section One picture doesn’t but present reminiscence context, enchancment loops, or structure orchestration. That’s intentional. This one proves what issues most: the system is actual, working domestically, and already reasoning. Future photos with full stack reminiscence and scoring loops are coming quickly.

Teaser: Section 1 ✅ | Section 2 🔁 | Section 3 🔒 | Section 4 🛠️ | Section 5 🧬

Tags: 🧠 Reminiscence | 🧪 Eval Loop | ⚙️ Self-Correction | 🛠️ Plugins | 🧬 Nice-Tuning

✅ Section 1Command understanding + reminiscence retention

🔁 Section 2Code era with Git layer + check protection scoring

🔒 Section 3Self-refactoring, bug localization, retry logic

🛠️ Section 4Plugin toolchain: Docker, pytest, shell ops, check matrix

🧬 Section 5Self-improvement loop: agent charges its personal work, rewrites, repeats

Endgame: An AI software program engineer that:

- Takes intent

- Builds modules

- Assessments them

- Improves them

- Paperwork them

- And does it once more — with out intervention

This undertaking rewired how I take into consideration software program improvement:

- Prompting is a crutch — You don’t want higher prompts. You want higher structure.

- Reminiscence > mannequin dimension — GPT-4 forgets what it simply stated. Mine doesn’t.

- Cloud is a lure — When you can’t run it domestically, you’re renting intelligence.

- Simplicity scales — This isn’t a stack of wrappers. It’s a single precision machine.

I’m already planning hybrid extension assist — offline-first by default, however with optionally available safe uplinks to exterior brokers or native cloud companies.

That means you get the very best of each worlds:

- Full management

- No vendor lock-in

- Elective scalability

I’ll be publishing structure papers on:

- Recursive self-evaluation scoring

- Contradiction-driven reminiscence mutation

- Hybrid symbolic+neural perception stacks

This isn’t a playground agent. It’s an actual system. And it’s evolving quick.

I’m not promoting this. Not but. And I’m not releasing it prematurely both. That can come when it’s able to show itself publicly.

Till then?

This can be a peek behind the scenes.

It’s not hype. It’s not fluff.

It’s actual. It’s working. And it’s already studying to suppose.

— Bradley Ryan Kinnard

Founder, Aftermath Applied sciences

aftermathtech.com