In my earlier article on GANs (link to basic GAN article), we explored the foundational ideas of Generative Adversarial Networks (GANs). At present, we’ll dive into one of the important developments in GAN structure—deep Convolutional GANs (DCGANs), launched by Radford et al. of their seminal 2015 paper.

What are DCGANs?

DCGANs characterize a vital development in GAN structure by incorporating convolutional neural networks into the generator and discriminator. Whereas conventional GANs struggled with stability and picture high quality, DCGANs launched architectural tips that made coaching extra steady and improved the standard of generated photos considerably.

The DCGAN structure follows these core rules:

- Substitute Pooling with Strided Convolutions: As a substitute of utilizing pooling layers, DCGANs use strided convolutions within the discriminator and fractional-strided convolutions within the generator. This enables the community to study its personal spatial downsampling/upsampling.

- Batch Normalization: Each generator and discriminator use batch normalization to stabilize studying. Nevertheless, it’s not utilized to the generator’s output layer and discriminator’s enter layer.

- Take away Absolutely Linked Hidden Layers: The mannequin makes use of convolutional options all through, eliminating dense layers aside from enter/output.

- Activation Features:

- Generator: ReLU activation in all layers besides the output (which makes use of Tanh)

- Discriminator: LeakyReLU activation in all layers

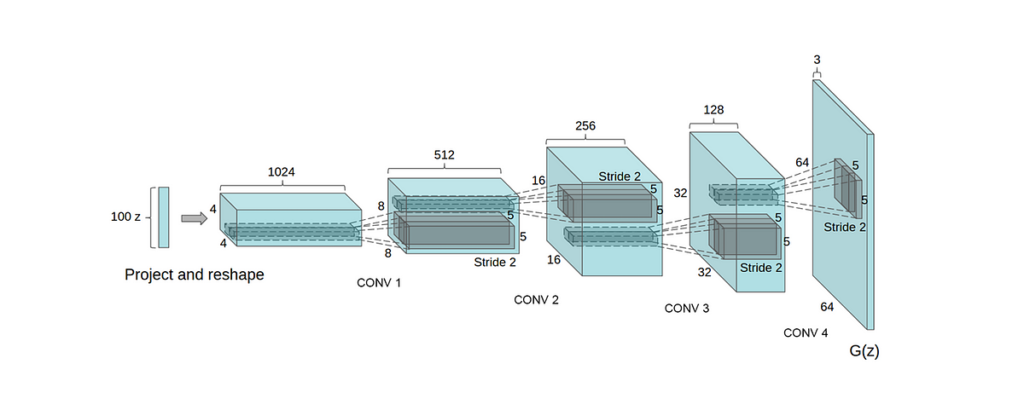

The Generator Structure

The generator transforms a random noise vector into a picture via a collection of upsampling layers:

- Enter: Random noise vector (z) of dimension 100

- Challenge and reshape into 4×4×1024 characteristic maps

- Collection of fractional-strided convolutions to regularly enhance the spatial dimension

- Remaining layer outputs a picture with Tanh’s activation

The Discriminator Structure

The discriminator follows a conventional CNN structure:

1. enter: Picture (e.g., 64×64×3)

2. Collection of strided convolutions with LeakyReLU

3. Flatten and output a single sigmoid chance

Let’s implement DCGAN utilizing PyTorch. We’ll break down the implementation into key elements:

Generator Class

class Generator(nn.Module):

def __init__(self, noise_channels, input_channels, output_channels, input_features):

tremendous(Generator, self).__init__()

self.gen = nn.Sequential(

# Preliminary projection and reshape

self.transposed_convolution_block(noise_channels, input_features*16, 4, 1, 0),

# Collection of transposed convolutions

self.transposed_convolution_block(input_features*16, input_features*8, 4, 2, 1),

self.transposed_convolution_block(input_features*8, input_features*4, 4, 2, 1),

self.transposed_convolution_block(input_features*4, input_features*2, 4, 2, 1),

nn.ConvTranspose2d(input_features*2, output_channels, kernel_size=4, stride=2, padding=1),

nn.Tanh()

)def transposed_convolution_block(self, in_filters, out_filters, kernel_size, stride, padding):

return nn.Sequential(

nn.ConvTranspose2d(in_filters, out_filters, kernel_size, stride, padding, bias=False),

nn.BatchNorm2d(out_filters),

nn.ReLU()

)

def ahead(self, x):

return self.gen(x)

Discriminator Class

class Discriminator(nn.Module):

def __init__(self, input_channels, input_features):

tremendous(Discriminator, self).__init__()

self.disc = nn.Sequential(

# Preliminary convolution

nn.Conv2d(input_channels, input_features, kernel_size=4, stride=2, padding=1),

nn.LeakyReLU(0.2),

# Collection of convolution blocks

self.convolution_block(input_features, input_features*2, 4, 2, 1),

self.convolution_block(input_features*2, input_features*4, 4, 2, 1),

self.convolution_block(input_features*4, input_features*8, 4, 2, 1),

self.convolution_block(input_features*8, input_features*16, 4, 2, 1),

nn.Conv2d(input_features*16, 1, kernel_size=4, stride=2, padding=1),

nn.Sigmoid()

)def convolution_block(self, in_filters, out_filters, kernel_size, stride, padding):

return nn.Sequential(

nn.Conv2d(in_filters, out_filters, kernel_size, stride, padding, bias=False),

nn.BatchNorm2d(out_filters),

nn.LeakyReLU(0.2)

)

def ahead(self, x):

return self.disc(x)

The coaching course of follows these key steps:

- Hyperparameters:

gadget = torch.gadget("cuda" if torch.cuda.is_available() else "cpu")

BATCH_SIZE = 64

LR = 2e-4

image_size = 64

img_channels = 3

Discriminator_features = 64

Generator_features = 64

Noise_dimensions = 100

epochs = 100

2. Information preprocessing and cleansing

knowledge ="BasicGAN/knowledge/img_align_celeba.zip"transforms = transforms.Compose(

[

transforms.Resize((image_size, image_size)),

transforms.ToTensor(),

transforms.Normalize(

[0.5 for _ in range(img_channels)], [0.5 for _ in range(img_channels)]

),

]

)

datasets = datasets.ImageFolder(root=knowledge, rework=transforms)

dataloader = DataLoader(datasets, batch_size=BATCH_SIZE, shuffle=True)

3. Coaching Loop:

generator = Generator(Noise_dimensions, img_channels, img_channels, Generator_features).to(gadget)

discriminator = Discriminator(img_channels, Discriminator_features).to(gadget)

initialize_weights(generator)

initialize_weights(discriminator)criterion = nn.BCELoss()

generator_optimizer = optim.Adam(generator.parameters(), lr=LR, betas=(0.5, 0.999))

discriminator_optimizer = optim.Adam(discriminator.parameters(), lr=LR, betas=(0.5, 0.999))

fixed_noise = torch.randn(32, Noise_dimensions, 1, 1).to(gadget)

for epoch in vary(epochs):

for idx, (actual, _) in enumerate(dataloader):

actual = actual.to(gadget)

noise = torch.randn(BATCH_SIZE, Noise_dimensions, 1, 1).to(gadget)

# print(actual.form)

pretend = generator(noise)

disc_real = discriminator(actual).reshape(-1)

disc_fake = discriminator(pretend).reshape(-1)

#for discriminator

loss_disc_real = criterion(disc_real, torch.ones_like(disc_real))

loss_disc_fake = criterion(disc_fake, torch.zeros_like(disc_fake))

loss_Discriminator = (loss_disc_real + loss_disc_fake) / 2

discriminator.zero_grad()

loss_Discriminator.backward(retain_graph=True)

discriminator_optimizer.step()

#for generator

output = discriminator(pretend).reshape(-1)

loss_Generator = criterion(output, torch.ones_like(disc_fake))

generator.zero_grad()

loss_Generator.backward()

generator_optimizer.step()

writer_fake = SummaryWriter(f"logs/pretend")

writer_real = SummaryWriter(f"logs/actual")

step =0

if idx % 100 == 0:

print(

f"Epoch [{epoch}/{epochs}] Batch {idx}/{len(dataloader)}

Loss D: {loss_Discriminator:.4f}, loss G: {loss_Generator:.4f}"

)with torch.no_grad():

pretend = generator(fixed_noise)

# take out (as much as) 32 examples

img_grid_real = torchvision.utils.make_grid(actual[:32], normalize=True)

img_grid_fake = torchvision.utils.make_grid(pretend[:32], normalize=True)

writer_real.add_image("Actual", img_grid_real, global_step=step)

writer_fake.add_image("Pretend", img_grid_fake, global_step=step)

step += 1