Consideration mechanisms have revolutionized deep studying, enabling fashions to deal with crucial information whereas ignoring irrelevant particulars. This functionality is important for duties like pure language processing, picture captioning, and speech recognition. For those who’re trying to perceive such superior subjects deeply, enrolling in Deep Studying Coaching is very really useful.

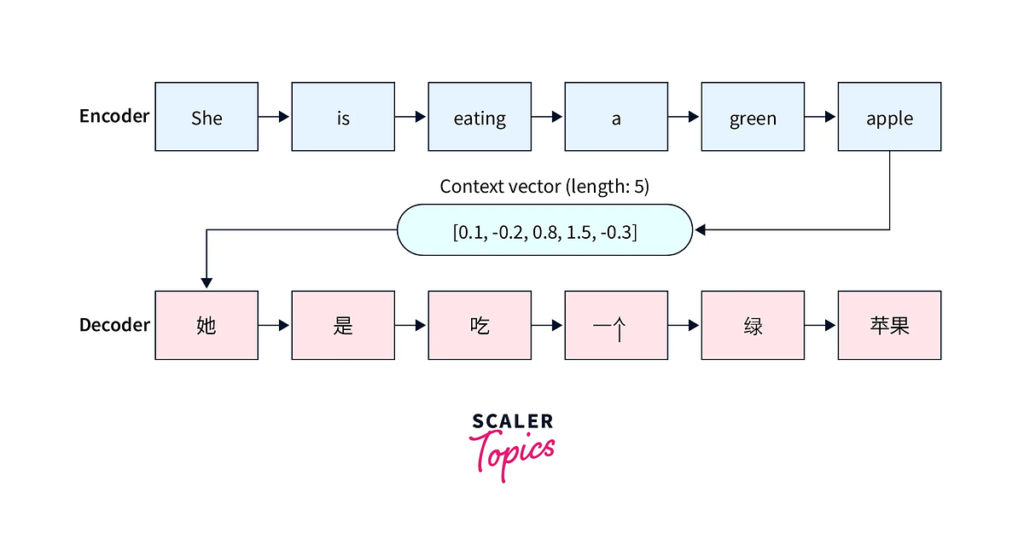

Consideration mechanisms are a vital innovation in deep studying, significantly for processing sequential information reminiscent of textual content, speech, and pictures. They had been launched to handle the constraints of conventional sequence-to-sequence fashions, reminiscent of recurrent neural networks (RNNs) and lengthy short-term reminiscence (LSTM) networks, which battle to deal with long-range dependencies in sequences.

There are a number of ideas in consideration mechanisms:

- Question, Key, and Worth: Within the context of consideration, the enter information is reworked into three vectors: question, key, and worth. The question vector is used to find out the relevance of the important thing vectors, which in flip assist weight the worth vectors throughout the consideration course of.

- Scaled Dot-Product Consideration: It entails calculating the dot product between the question and key vectors, scaling the outcome, and making use of a softmax perform to acquire consideration weights.

- Self-Consideration: Self-attention is a particular case the place the question, key, and worth vectors all come from the identical enter sequence. This permits the mannequin to seize dependencies inside the identical sequence, enabling it to grasp relationships between phrases in a sentence, for instance.

- Multi-Head Consideration: As a substitute of utilizing a single set of consideration weights, multi-head consideration makes use of a number of units (or “heads”) to seize totally different elements of the enter information in parallel. Every consideration head processes the enter independently, and the outcomes are mixed to kind a extra complete understanding of the sequence.

1. Self-Consideration (Scaled Dot-Product Consideration)

● Focuses on relationships inside a single sequence.

● Extensively utilized in NLP duties.

● Method:

2. World vs. Native Consideration

● World: Considers all enter positions.

● Native: Focuses on particular elements of the sequence.

● Pure Language Processing:

○ Machine translation (e.g., English to French).

○ Sentiment evaluation.

○ Chatbots.

● Pc Imaginative and prescient:

○ Picture captioning.

○ Object detection.

○ Medical imaging.

Delhi is a good place to study superior applied sciences like deep studying, due to its robust tech neighborhood and expert trainers. The town additionally gives good alternatives for networking and sensible studying. For higher data, be part of Deep Learning Training in Delhi, which incorporates real-world examples and hands-on initiatives.

Steps in Transformer Structure:

- Enter is tokenized and embedded.

- Positional encodings are added.

- Multi-head consideration layers course of enter.

- Outputs are fed into feed-forward neural networks.

Thriving Tech Scene: Noida is a hub for AI lovers, providing a dynamic surroundings for studying Deep Studying methods like Transformer structure.

Professional Trainers: Study from skilled professionals who provide sensible data and information you thru real-world purposes.

Palms-On Expertise: Dive deep into Transformer structure with immersive, real-world initiatives that carry idea to life.

Principle Meets Observe: Bridge the hole between theoretical data and real-world purposes by way of Deep Learning Training in Noida.

Step into the Deep Learning Online Course, the place you’ll unravel the secrets and techniques of RNNs and Consideration Mechanisms. Prepare to remodel idea into motion, as you acquire hands-on experience and uncover how these AI methods drive real-world innovation.

For foundational data in these ideas, discover Machine Learning Fundamentals as a stepping stone.

Right here is the heatmap visualizing how consideration is distributed throughout enter tokens throughout translation.

Consideration mechanisms are essential in up to date deep studying, fixing advanced challenges throughout varied domains. By successfully specializing in related information, they improve efficiency and effectivity throughout duties. Discover sensible insights and hands-on initiatives by way of superior coaching applications to really grasp this transformative know-how.