AutoGluon is auto ML Python library that streamlines function engineering, mannequin choice, hyperparameter tuning, and analysis. AutoGluon has a TimeSeriesPredictor particularly made for time sequence forecasting.

AutoGluon’s time sequence module automates quite a lot of issues — together with mannequin choice, tuning, and have engineering. It could actually create ensembles by combining a number of fashions. The API is straightforward to make use of (particularly when you’ve got constructed fashions with AWS SageMaker earlier than).

The workflow with AutoGluon for time sequence includes making ready the dataset, initializing the TimeSeriesPredictor , coaching fashions robotically, and producing forecasts.

Right here is an instance with artificial knowledge. We begin by declaring:

- time_column: The timestamp for every remark.

- target_column: The worth to be predicted.

- item_id_column (non-compulsory): Identifies completely different time sequence in a dataset (for multivariate forecasting).

import pandas as pd

import numpy as np

from autogluon.timeseries import TimeSeriesDataFrame

from autogluon.timeseries import TimeSeriesPredictor# Create extra advanced pattern knowledge with seasonal patterns

np.random.seed(42) # for reproducibility

# Generate timestamps for 3 years of month-to-month knowledge

timestamps = pd.date_range("2020-01-01", intervals=36, freq="ME")

# Perform to create seasonal sample

def create_seasonal_data(base_value, development, seasonal_amplitude, noise_level):

time = np.arange(len(timestamps))

# Development element

trend_component = base_value + development * time

# Seasonal element (yearly seasonality)

seasonal_component = seasonal_amplitude * np.sin(2 * np.pi * time / 12)

# Random noise

noise = np.random.regular(0, noise_level, len(time))

return trend_component + seasonal_component + noise

# Create completely different patterns for various objects

knowledge = {

"item_id": ["A"] * 36 + ["B"] * 36 + ["C"] * 36,

"timestamp": timestamps.tolist() * 3,

"gross sales": np.concatenate([

# Item A: Strong seasonality, moderate trend, low noise

create_seasonal_data(base_value=1000, trend=15, seasonal_amplitude=200, noise_level=30),

# Item B: Moderate seasonality, high trend, moderate noise

create_seasonal_data(base_value=500, trend=25, seasonal_amplitude=100, noise_level=50),

# Item C: Weak seasonality, negative trend, high noise

create_seasonal_data(base_value=1500, trend=-10, seasonal_amplitude=50, noise_level=100)

])

}

# Create DataFrame and set multi-index

df = pd.DataFrame(knowledge)

df = df.set_index(['item_id', 'timestamp'])

# Convert to TimeSeriesDataFrame

train_data = TimeSeriesDataFrame.from_data_frame(

df,

id_column='item_id',

timestamp_column='timestamp'

)

Output:

item_id timestamp gross sales

0 A 2020-01-31 100

1 A 2020-02-29 110

2 A 2020-03-31 120

3 A 2020-04-30 130

4 A 2020-05-31 140

Initializing and Coaching the Predictor

To initialize the TimeSeriesPredictor we have to specify the goal, time, and (optionally) the merchandise ID columns.

We don’t should do something for the Predictor — it is going to robotically choose a mannequin for us.

# Initialize predictor

predictor = TimeSeriesPredictor(

prediction_length=6, # Forecast horizon

eval_metric='MASE', # Analysis metric

goal='gross sales', # Goal variable

)# Practice the predictor

predictor.match(train_data=train_data)

The predictor will robotically do function engineering (e.g., lagged values, seasonality options) and prepare a number of fashions, together with statistical fashions (ARIMA), machine studying fashions (LightGBM, CatBoost), and deep studying fashions (N-BEATS).

Generate forecasts for the subsequent prediction_length time steps.

# Generate forecasts

forecasts = predictor.predict(train_data)

print(forecasts.head())# Plot forecasted vs precise values

predictor.plot(train_data)

# Consider mannequin efficiency

efficiency = predictor.consider(train_data)

print(efficiency)

Output:

item_id timestamp gross sales

0 A 2022-01-31 350.1234

1 A 2022-02-28 360.5678

2 A 2022-03-31 370.9876

3 B 2022-01-31 310.4321

4 B 2022-02-28 320.8765

AutoGluon contains built-in visualization instruments.

# Plot forecasted vs precise values

predictor.plot(train_data)

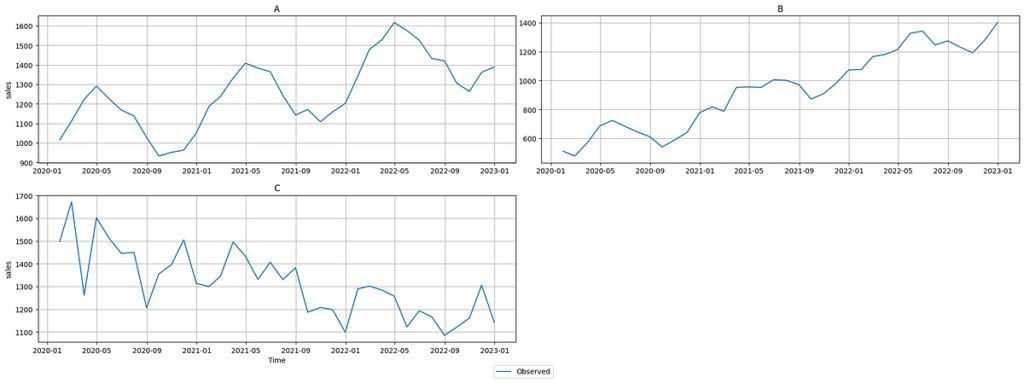

There are three completely different merchandise in our our dataset. So we get a prediction for each.

AutoGluon offers metrics like RMSE, MAPE, and MAE to guage mannequin efficiency.

# Consider mannequin efficiency

efficiency = predictor.consider(df)

print(efficiency)

Validation rating from lowest (finest) to highest (worst):

It takes a very long time to run Autogluon (this run took 24 minutes). You possibly can see the fashions that take a very long time within the chart above — chronos (the LLM mannequin) was the largest wrongdoer requiring 16 and a half minutes by itself.

I don’t discuss mannequin administration in most of my articles however it will be important for deploying fashions and utilizing them in the actual world. autogluon lets us save the skilled mannequin for reuse. we will use this later for testing or we will put this right into a contianer and use it for inference as new knowledge is available in.

predictor.save("timeseries_model")"""

Load the Mannequin

Load the saved mannequin to make predictions on new knowledge.

"""

from autogluon.timeseries import TimeSeriesPredictor

predictor = TimeSeriesPredictor.load("timeseries_model")

new_forecasts = predictor.predict(new_data)

AutoGluon is a pleasant library. I just like the automation however typically it feels prefer it picks actually random fashions that I wouldn’t have picked (perhaps that could be a good factor). I don’t love how the ensemble works and I would like to make use of different libraries for ensembles as a result of it simply take so lengthy to run. If I may solely use one time sequence library, I might not choose AutoGluon.