Turning complicated ML fashions into easy, interpretable guidelines with Human Data Fashions for actionable insights and straightforward inference

Trendy machine studying has delivered unbelievable breakthroughs in a number of fields. These successes come from superior fashions that may uncover intricate patterns in huge datasets.

However there’s one space the place this strategy falls brief: straightforward interpretation. Most ML fashions, also known as “black packing containers,” take huge quantities of information and include 1000’s of coefficients and weights. As an alternative of extracting clear, actionable insights, they go away us with outcomes which might be troublesome for people to grasp or apply.

This hole highlights the necessity for a distinct strategy — one which focuses on discovering concise, interpretable guidelines quite than relying solely on complicated fashions.

Most efforts deal with explaining “black field” fashions quite than straight extracting data from uncooked information. The foundation of the issue lies within the “machine-first” mindset, the place the precedence is constructing optimum algorithms quite than human-like data extraction. People depend on data to generate experiments and new information, however the reverse — changing information into actionable data — is basically ignored.

The search to show complicated information into easy, human-understandable logic has been round for many years. Beginning within the Sixties, analysis on formal logic impressed the creation of rule-learning algorithms like Corels, RuleFit, and Skope-Guidelines. These strategies extract concise Boolean expressions from complicated information, usually utilizing grasping optimization methods. Nonetheless, regardless of progress in interpretable and explainable AI, vital challenges stay.

Not a very long time in the past a gaggle of scientists from Russia and France advised their strategy — Human Data Fashions (HKM), which distill information into the type of easy guidelines.

HKM creates easy guidelines that include primary Boolean operators (AND, OR, NOT) and thresholds. It comes up no more than with 4 guidelines. These guidelines are straightforward to make use of in several domaines, particularly when a human is concerned.

When you could wish to use this:

- predictions will likely be utilized by discipline consultants. For example, a physician would possibly obtain a mannequin’s prediction concerning the chance of a affected person having pneumonia. If the prediction comes from a “black field,” it turns into difficult for the physician to regulate the outcomes based mostly on private expertise, resulting in low belief in such predictions. A simpler strategy could be to distill clear, comprehensible guidelines from affected person medical histories (e.g., “If the affected person’s blood strain is above 120 and their temperature exceeds 38°C, the chance of pneumonia is excessive”).

- When deploying an ML mannequin to manufacturing is unjustifiably costly or complicated, a couple of enterprise guidelines can usually be carried out extra simply, even straight on the front-end.

- When you have got small variety of options or observations and may’t construct a posh mannequin.

HKM coaching

The HKM coaching identifies:

- thresholds to simplify steady information;

- the perfect subset of options;

- the optimum Boolean logic to mannequin decision-making.

The method generates all attainable Boolean features, simplifies them into concise formulation, and evaluates their efficiency. In contrast to conventional machine studying, which adjusts coefficients, HKMs discover completely different combos of options and guidelines to seek out the simplest but human-comprehensible fashions.

HKM coaching avoids imputing lacking information, treating it as invaluable data, and produces a number of top-performing fashions, giving customers flexibility in selecting essentially the most sensible answer.

The place HKMs Fall Brief

HKMs aren’t fitted to each drawback. For duties with excessive branching complexity (massive variety of options) their simplicity turns into an obstacle. These situations require substantial reminiscence and logic that exceed human processing capability. Nonetheless, HKMs can nonetheless play a vital function in utilized fields like healthcare, the place they function a sensible place to begin to handle apparent blind spots.

One other limitation lies in characteristic identification. In contrast to deep-learning fashions that may robotically extract complicated patterns, HKMs rely upon people to outline and measure key options. That’s why characteristic engineering falls on the shoulders of the analyst.

As a toy instance we are going to use a generated Churn prediction dataset.

Set up the libraries:

!pip set up git+https://github.com/EgorDudyrev/PeaViner

!pip set up bitarray

Dataset technology:

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import f1_scorenp.random.seed(42)

n_rows = 1500

charge_amount = np.random.regular(10, np.sqrt(2), n_rows).astype(int)

seconds_of_use = np.random.gamma(form=2, scale=2500, dimension=n_rows).astype(int)

frequency_of_use = np.random.regular(20, np.sqrt(10), n_rows).astype(int)

tariff_plan = np.random.alternative([1, 2], dimension=n_rows)

standing = np.random.alternative([2, 3], dimension=n_rows)

age = np.random.randint(20, 71, dimension=n_rows)

noise = np.random.uniform(0, 1, n_rows)

churn = np.the place(

((seconds_of_use <= 1000) & (age >= 30)) | (frequency_of_use < 16) | (noise < 0.1),

1,

0

)

df = pd.DataFrame({

"Cost Quantity": charge_amount,

"Seconds of Use": seconds_of_use,

"Frequency of use": frequency_of_use,

"Tariff Plan": tariff_plan,

"Standing": standing,

"Age": age,

"Churn": churn

})

The dataset comprises some primary metrics on person traits and the binary goal — Churn. Pattern information:

Break up the dataset into practice and check teams:

X = df.drop(columns=['Churn'])

y = df.ChurnX, y = X.values.astype(float), y.values.astype(int)

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.8, random_state=42)

print(f"Prepare dimension: {len(X_train)}; Check dimension: {len(X_test)}")

Prepare dimension: 1200; Check dimension: 300

Lastly we could apply the mannequin and examine the standard:

from peaviner import PeaClassifiermannequin = PeaClassifier()

mannequin.match(X_train, y_train)

model_scores = f1_score(y_train, mannequin.predict(X_train)),

f1_score(y_test, mannequin.predict(X_test))

print(f"Prepare F1 rating: {model_scores[0]:.2f},

Check F1 rating: {model_scores[1]:.2f}")

Prepare F1 rating: 0.78, Check F1 rating: 0.77

The outcomes are fairly steady, coaching course of took 3 minutes.

Now, we could examine the rule, discovered by the mannequin:

options = [f for f in df if f!='Churn']

mannequin.clarify(options)

(Age >= 30 AND Seconds of Use < 1010) OR Frequency of use < 16

Fairly simple and interpretable. Simply 3 guidelines, no must make inference utilizing the mannequin, you want simply apply this straightforward rule to separate the customers. And as you see it’s nearly similar to theoretical rule of information technology.

Now we’d like to match the efficiency of the mannequin with a number of different in style algorithms. We’ve chosen Determination Tree and XGBoost to match 3 fashions of various stage of complexity.

from sklearn.model_selection import KFold

from sklearn.tree import DecisionTreeClassifier

from xgboost import XGBClassifier

from sklearn.metrics import f1_score

import pandas as pdkf = KFold(n_splits=5, random_state=42, shuffle=True)

def evaluate_model(mannequin, X, y, get_leaves):

scores_cv, leaves = [], []

for train_idx, test_idx in kf.cut up(X, y):

mannequin.match(X[train_idx], y[train_idx])

scores_cv.append((

f1_score(y[train_idx], mannequin.predict(X[train_idx])),

f1_score(y[test_idx], mannequin.predict(X[test_idx]))

))

leaves.append(get_leaves(mannequin))

return scores_cv, leaves

fashions = [

("XGBoost", XGBClassifier(), lambda m: (m.get_booster().trees_to_dataframe()['Feature'] == 'Leaf').sum()),

("Determination Tree", DecisionTreeClassifier(), lambda m: m.get_n_leaves()),

("Human Data Mannequin", PeaClassifier(), lambda m: 1),

]

models_data = []

for model_name, mannequin, get_leaves in fashions:

scores_cv, leaves = evaluate_model(mannequin, X, y, get_leaves)

models_data.lengthen({

'Mannequin': model_name,

'Fold': fold_id,

'Prepare F1': practice,

'Check F1': check,

'Leaves': n_leaves

} for fold_id, ((practice, check), n_leaves) in enumerate(zip(scores_cv, leaves)))

models_data = pd.DataFrame(models_data)

The outcomes for fold 0:

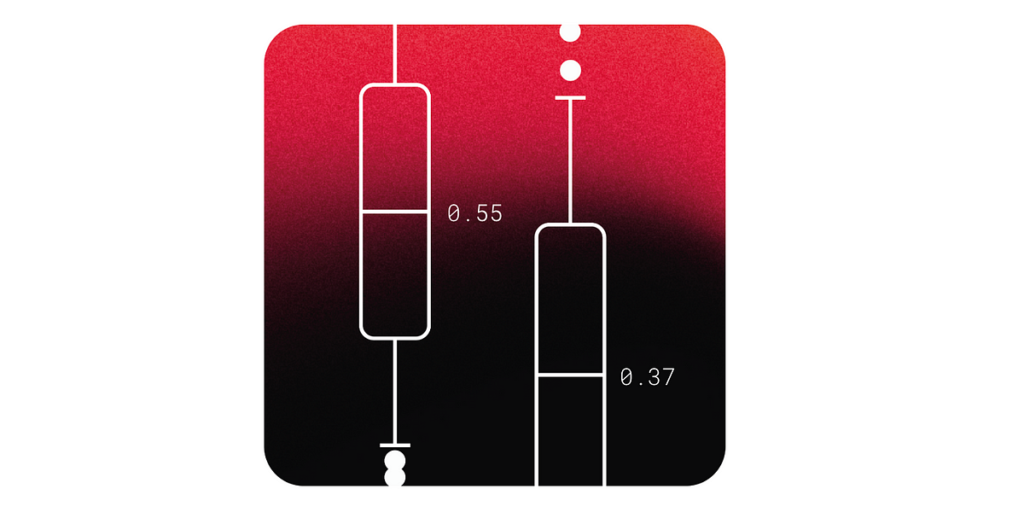

As you see, the Human Data Mannequin used simply 1 rule, Determination Tree — 165 and XGBoost — 1647, however the high quality on the Check group is comparable.

Now we wish to visualize the standard outcomes for all folds:

import matplotlib.pyplot as plt

plt.rcParams["figure.figsize"] = [10, 6]

plt.rcParams["figure.dpi"] = 100

plt.rcParams["figure.facecolor"] = "white"

plt.rcParams['font.family'] = 'monospace'

plt.rcParams['font.size'] = 10%load_ext autoreload

%autoreload 2

%config InlineBackend.figure_format = "retina"

plt.determine(figsize=(8, 5))

for ds_part, shade in zip(['Train', 'Test'], ['black', '#f95d5f']):

y_axis = f'{ds_part} F1'

plt.scatter(models_data[y_axis], 1/models_data['Leaves'], label=ds_part, alpha=0.3, s=200, shade=shade)

avgs = models_data.groupby('Mannequin')['Leaves'].imply().sort_values()

avg_f1 = models_data.groupby('Mannequin')['Test F1'].imply()

# Add vertical strains

for model_name, n_leaves in avgs.objects():

plt.axhline(1/n_leaves, zorder=0, linestyle='--', shade='grey', alpha=0.5, linewidth=0.6)

plt.textual content(0.05, 1/n_leaves*1.1, model_name)

plt.xlabel('F1 rating')

plt.ylabel('1 / Variety of guidelines')

plt.yscale('log')

plt.ylim(0, 3)

plt.xlim(0, 1.05)

# Eradicating prime and proper borders

plt.gca().spines['top'].set_visible(False)

plt.gca().spines['right'].set_visible(False)

plt.present()

As you see, the standard of HKM on the Check subset is occasion higher than extra complicated fashions have. Clearly, that’s as a result of the dataset is comparably small and have dependencies aren’t that complicated. Anyway the foundations generated by HKM could also be simply used for some personalisation provide on the web site. You don’t want any ML infrastructure—the foundations could also be included even on the entrance finish.