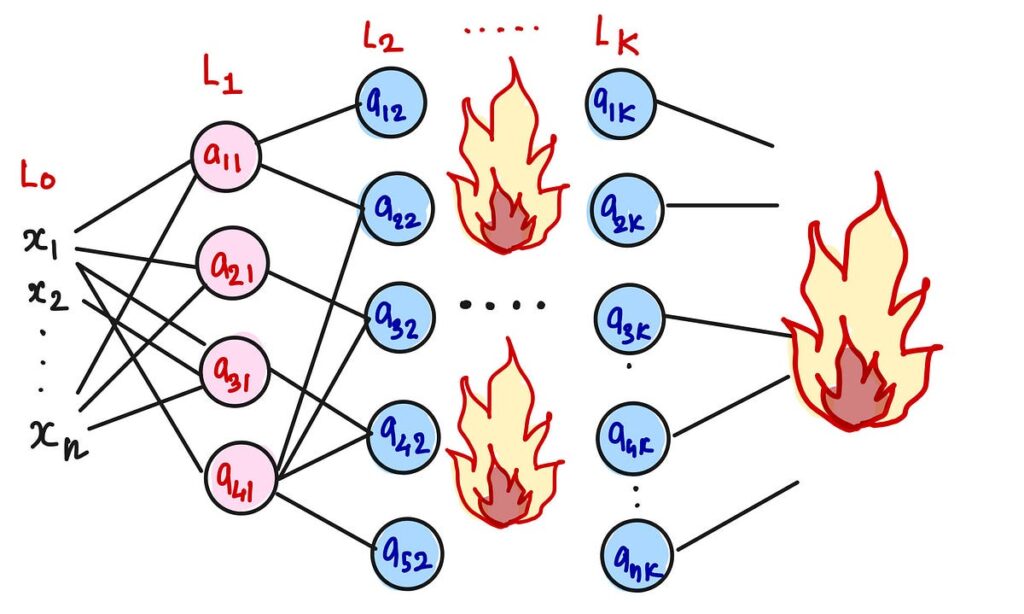

Once I first began working with deep studying, I believed that including extra layers would routinely make a community higher. However I rapidly realized that deep networks usually endure from two large issues: vanishing gradients and exploding gradients. These issues make it onerous to coach deep networks successfully.

Then I found one thing stunning: the approach we initialize weights performs an enormous position in whether or not a community trains efficiently. Particularly, the variance of the weights determines whether or not alerts develop, shrink, or keep steady as they go by the community.

However why does variance matter? And the way do initialization strategies like Xavier and He use easy statistics to stop these issues? Let’s dive in and discover out!

Don’t have Medium account? Use this hyperlink: https://medium.com/@r.siddhesh96/why-deep-networks-explode-or-vanish-and-how-simple-statistics-fix-them-deriving-xavier-and-he-f2b64b89e3b8?sk=682d031a65c00dad50e6399f5599bf1c

To research what’s taking place let’s begin with a easy experiment with Pytorch. Think about you’re constructing a neural community with 100 layers, every with 512 neurons. You…