The idea of “brokers” in AI techniques can range relying on the context. Some outline brokers as absolutely autonomous techniques that function independently over prolonged durations, leveraging varied instruments to carry out complicated duties. Others describe them as techniques with extra prescriptive implementations that observe predefined workflows.

At Anthropic, all such variations are categorized as agentic techniques, with a transparent distinction between workflows and brokers:

- Workflows: Techniques the place giant language fashions (LLMs) and instruments are orchestrated via predefined code paths.

- Brokers: Techniques the place LLMs dynamically handle their processes and gear utilization, sustaining management over activity execution.

On this article, we’ll discover each workflows and brokers intimately and talk about their sensible purposes.

When (and When Not) to Use Brokers?

When growing purposes with LLMs, simplicity ought to at all times be the place to begin. Solely improve complexity if completely crucial. Agentic techniques usually commerce off latency and value for enhanced activity efficiency, so it’s important to evaluate when this tradeoff is sensible.

- Workflows: Supply predictability and consistency, making them supreme for well-defined duties.

- Brokers: Present flexibility and are finest fitted to duties requiring dynamic, model-driven decision-making at scale.

For a lot of purposes, optimizing single LLM calls with retrieval and in-context examples is enough, and agentic techniques might not be crucial.

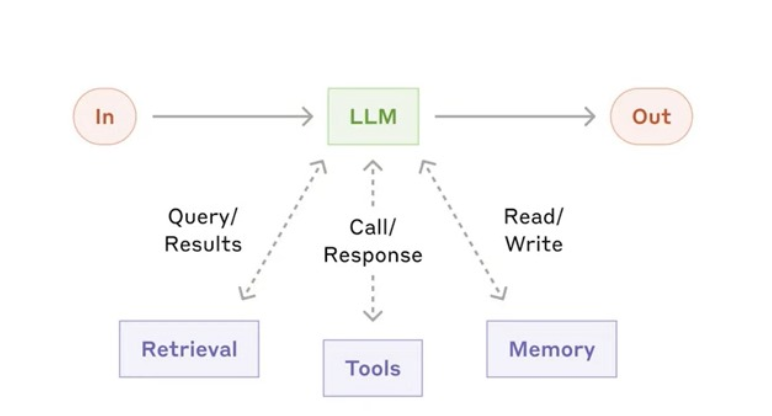

Constructing Block: The Augmented LLM

The inspiration of agentic techniques is an LLM augmented with capabilities like retrieval, instruments, and reminiscence. Present LLMs can actively use these enhancements to:

- Generate search queries.

- Choose and make the most of acceptable instruments.

- Decide and retain related data.

This augmented design is what permits workflows and brokers to operate successfully.

This workflow breaks down a activity into sequential steps. Every LLM name processes the output of the earlier one.

- Programmatic checks (gates) will be added at intermediate steps to make sure the workflow stays on monitor.

Routing classifies inputs and directs them to specialised follow-up duties.

- This method ensures separation of issues and permits for extra specialised prompts.

- It prevents efficiency degradation when optimizing for particular inputs.

Parallelization permits a number of duties to run concurrently, with outputs aggregated programmatically. It contains two principal approaches:

- Sectioning: Dividing duties into impartial subtasks that run in parallel.

- Voting: Working the identical activity a number of instances to generate various outputs.

On this workflow, a central LLM dynamically breaks down duties, delegates them to employee LLMs, and synthesizes their outcomes right into a coherent output.

Right here, one LLM generates a response whereas one other evaluates and supplies suggestions in a loop. This iterative course of helps refine outcomes for increased accuracy.

Brokers: Simplifying Complicated Duties

Brokers can deal with subtle challenges however are sometimes easy in implementation. Primarily, they’re LLMs that leverage instruments in response to environmental suggestions inside a loop.

- Design toolsets thoughtfully with clear documentation.

- Guarantee instruments are user-friendly and align with the system’s objectives.

Prompt Engineering Tools | Learn Prompt Engineering

Agentic techniques, encompassing workflows and brokers, present a strong framework for leveraging LLMs in purposes. Whereas workflows excel in predictability and predefined processes, brokers shine in dynamic, versatile decision-making. By understanding when and easy methods to use these techniques, companies can optimize AI purposes for each efficiency and effectivity.