In recent times, we’ve got witnessed outstanding progress in Generative AI, notably within the growth of Giant Language Fashions (LLMs). It will not be an exaggeration to say that a lot of this development could be attributed to the transformer structure. That is evident from the truth that all main LLMs, together with ChatGPT, LLaMA, BERT, and others, are constructed on transformers.

One of many key improvements launched by the transformer structure is the idea of self-attention. This mechanism revolutionized how fashions course of and perceive relationships inside information by permitting them to weigh the significance of every enter component in relation to others. Self-attention not solely improved the flexibility to seize contextual dependencies throughout lengthy sequences but in addition contributed considerably to the scalability and effectivity of contemporary AI programs.

Self-attention addresses the problem of understanding the contextual that means of phrases in a sentence, paragraph, or a whole textual content. As an example, take into account the sentence, “I like Apple.” It’s ambiguous whether or not “Apple” refers back to the fruit or the corporate. Equally, the phrase “financial institution” can imply a monetary establishment, as in “cash financial institution,” or the facet of a river, as in “river financial institution.”

A extra complicated instance could be, “I’ve used Samsung, however I like Apple.” On this case, a Giant Language Mannequin (LLM) should take into account the whole context to find out that “Apple” probably refers back to the firm, not the fruit. Attaining such nuanced understanding is much from trivial.

Information scientists and engineers had lengthy been making an attempt to unravel this drawback utilizing architectures like Recurrent Neural Networks (RNNs) and Lengthy Quick-Time period Reminiscence (LSTM) networks. Nevertheless, these strategies had limitations, notably in dealing with long-range dependencies and scalability. The introduction of self-attention, as proposed within the transformer structure, revolutionized this area. Self-attention allows fashions to weigh the relevance of each phrase in relation to others, permitting them to seize contextual meanings extra successfully and effectively. This breakthrough has been a cornerstone of developments in fashionable pure language processing.

Machines don’t inherently perceive human languages; they function on binary information and, by extension, numbers. All machine studying algorithms depend on numerical information to carry out calculations and generate outcomes. When processing textual content, corresponding to an article with 10,000 distinctive phrases, the only approach to symbolize these phrases is to assign each a novel quantity and symbolize the whole article as a sequence of those numbers. Nevertheless, this method is extremely simplistic and fails to seize the nuanced relationships and meanings between phrases.

Within the context of Giant Language Fashions (LLMs), the necessities are much more complicated. Past merely figuring out particular person phrases, LLMs should acknowledge relationships between phrases, perceive similarities, and determine contextual associations. For instance, the phrases “king” and “queen” are associated, as are “apple” (the fruit) and “orange.” Representing these relationships numerically in a significant method is essential for duties like semantic understanding and contextual predictions.

That is the place phrase embeddings come into play. Phrase embeddings are a way to symbolize phrases as numerical vectors in a multi-dimensional house, the place comparable phrases are positioned nearer collectively. These embeddings seize semantic relationships, syntactic properties, and contextual similarities, enabling machines to course of language extra successfully.

A number of widespread algorithms and strategies are used to generate phrase embeddings, corresponding to:

- Word2Vec: Launched by Google, this system makes use of neural networks to map phrases right into a vector house primarily based on their contextual utilization in a corpus.

- GloVe (International Vectors for Phrase Illustration): Developed by Stanford, GloVe creates embeddings by analyzing world phrase co-occurrence statistics in a corpus.

- FastText: An extension of Word2Vec by Fb, FastText contains subword info, enabling it to deal with out-of-vocabulary phrases higher.

- ELMo (Embeddings from Language Fashions): Generates context-dependent embeddings, the place the illustration of a phrase adjustments primarily based on its surrounding context in a sentence.

- Transformer-Based mostly Embeddings: Trendy approaches, corresponding to BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), generate extremely contextual embeddings utilizing transformer architectures.

Phrase embeddings type the inspiration of many pure language processing duties, enabling fashions to grasp and course of human language with better depth and accuracy. They bridge the hole between uncooked textual content information and the numerical representations that machines can interpret and compute.

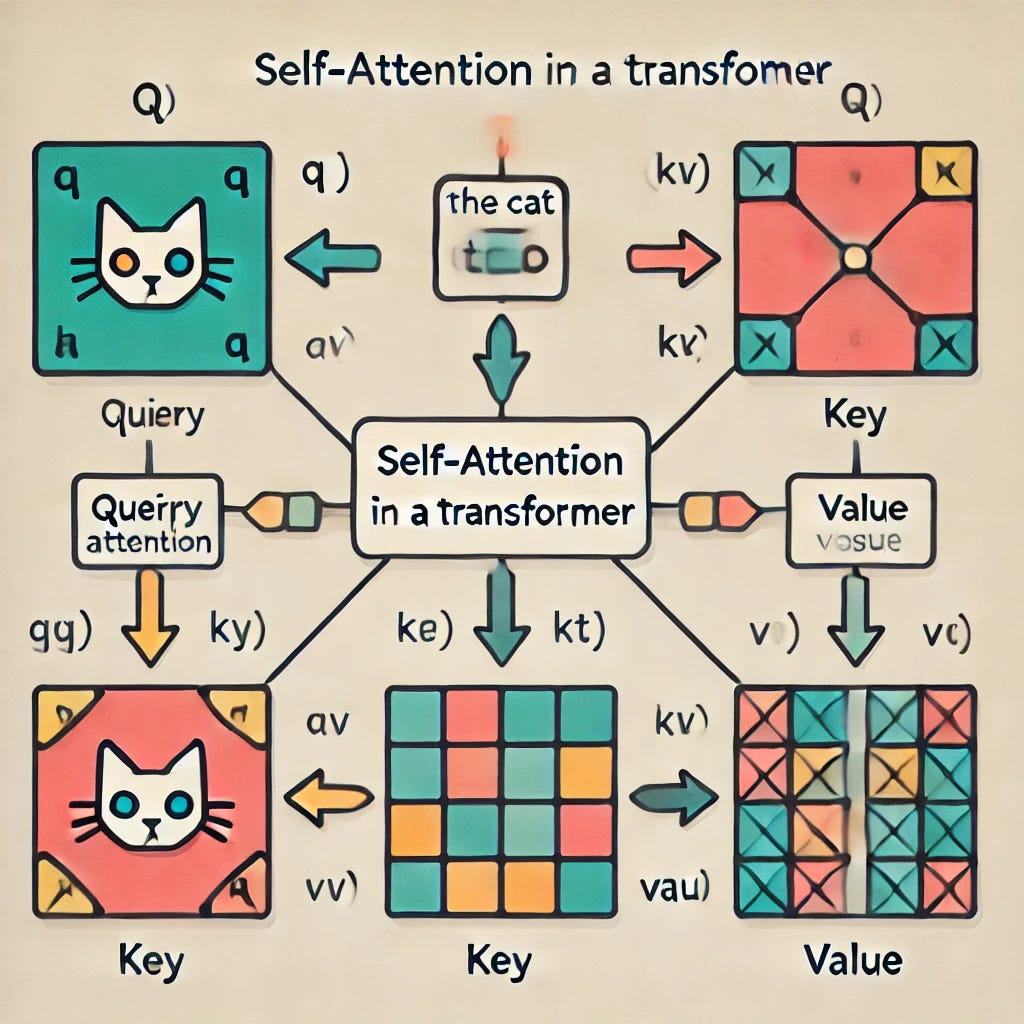

Self-attention is a key mechanism in fashionable neural networks that transforms static phrase embeddings into contextual phrase embeddings, enabling fashions to seize the that means of phrases of their particular context.

To know this, let’s revisit the idea of phrase embeddings. Suppose we’ve got created embeddings for all recognized phrases in a vocabulary. Whereas these embeddings successfully seize relationships between phrases, they continue to be static. Which means a phrase like “financial institution” may have the identical embedding no matter whether or not it seems in “cash financial institution grows” or “river financial institution flows.” Static embeddings fail to account for the truth that the that means of a phrase will depend on its surrounding context.

Capturing Relationships Between Phrases

In a sentence, we calculate the connection or dependency of every phrase with each different phrase. As an example, within the phrase “cash financial institution grows,” we compute how strongly “financial institution” is influenced by the opposite phrases:

- “financial institution” has a 0.25 dependency on “cash” (indicating some relevance attributable to their affiliation in monetary contexts).

- “financial institution” has a 0.7 dependency on itself (indicating self-relevance, as a phrase usually retains its core that means).

- “financial institution” has a 0.05 dependency on “grows” (indicating minimal relevance to the phrase’s main that means).

After we talk about relationships like “financial institution” having a dependency on “cash” or “grows,” it’s vital to notice that these dependencies aren’t represented as English phrases however somewhat as numerical vectors — embeddings. Every phrase is mapped to a multi-dimensional vector, and these embeddings are the inspiration for calculating contextual dependencies.

Calculating Weights: Dot Product and Softmax

To calculate the burden or significance of 1 phrase relative to a different in a sentence, the dot product is used. Right here’s how the method works:

Dot Product for Similarity

The dot product of two phrase embeddings measures their similarity or relevance. For instance, the dot product between the embeddings of “financial institution” and “cash” will yield a price that displays how intently associated these phrases are within the embedding house.

Normalization with Softmax

The uncooked dot product values aren’t instantly usable as weights since they will vary throughout unbounded values and have to be normalized. To realize this:

- The dot product values are handed by a softmax perform.

- Softmax ensures that:

- All weights are normalized to fall inside the vary of 0 to 1.

- The sum of the weights for a phrase’s dependencies equals 1. This makes the weights interpretable as possibilities.

For instance, for the phrase “financial institution” in “cash financial institution grows”:

- The similarity scores (dot merchandise) may initially be: [0.5 (“money”), 1.5 (“bank”), 0.2 (“grows”)].

- After making use of softmax, these values might normalize to: [0.25, 0.7, 0.05].

Weighted Embeddings

The softmax output weights are then multiplied with the corresponding embeddings of the phrases. This step adjusts the unique embeddings to include contextual info:

- This ends in a brand new, contextually conscious illustration of “financial institution,” influenced by the phrases “cash” and “grows” within the sentence.

A whole view of the calculations could be seen right here.