Machine studying is the science (and artwork) of programming computer systems to allow them to study from knowledge.

Conventional Strategy vs ML Strategy

- Issues for which present options require loads of fine-tuning or lengthy lists of rule — A machine studying mannequin can typically simplify code and carry out higher.

- Complicated issues for which utilizing a conventional strategy yields no good resolution — machine studying strategies can maybe discover a resolution.

- Fluctuating environments — A machine studying system can simply be retrained on new knowledge, at all times preserving it updated.

- ML may also help getting insights about complicated issues and enormous quantities of information.

Examples:

- Conventional spam vs ham filter.

- Analyzing photos of merchandise on a manufacturing line to robotically classify them, sometimes carried out utilizing convolutional neural networks.

- Detecting tumors in mind scans is semantic picture segmentation activity, the place every pixel within the picture is assessed to find out the precise location and form of tumors, sometimes utilizing CNNs or transformers.

- Making a chatbot or a private assistant together with pure language understanding (NLU) and question-answering modules.

- Supervised, Unsupervised, Semi-Supervised, Self-Supervised.

- On-line vs Batch Studying.

- Occasion based mostly vs Mannequin based mostly studying.

Supervised studying

- The coaching set you feed to the algorithm consists of the specified options, referred to as goal/labels.

- Classification is a typical supervised activity. Eg. Spam Filter.

- Regression falls in the identical class simply that we predict a goal quantity worth. Eg. Value of a automobile.

Unsupervised studying

- The coaching knowledge is unlabeled. The system tries to study and not using a instructor.

- Dimensionality discount simplifies knowledge by merging strongly correlated options. That is Function extraction, like combining mileage and age, represents automobile put on and tear.

- Discover how animals are moderately nicely separated from automobiles and the way horses are near deer however removed from birds.

- Instance

Semi-supervised studying

- Since labeling knowledge is normally time-consuming and expensive, you’ll typically have loads of unlabeled cases, and few labeled cases.

- Some algorithms can take care of knowledge that’s partially labeled. That is referred to as semi-supervised studying.

- Semi-supervised studying with two courses (triangles and squares):

- the unlabeled examples (circles) assist classify a brand new occasion (the cross) into the triangle class moderately than the sq. class, although it’s nearer to the labeled squares.

- Some photo-hosting companies, similar to Google Pictures, are good examples of this. When you add all your loved ones pictures to the service, it robotically acknowledges that the identical individual.

- Most semi-supervised studying algorithms are combos of unsupervised and supervised algorithms.

- For instance, a clustering algorithm could also be used to group comparable cases collectively, after which each unlabeled occasion might be labeled with the commonest label in its cluster. As soon as the entire dataset is labeled, it’s attainable to make use of any supervised studying algorithm.

Self-supervised studying

- One other strategy to machine studying entails really producing a totally labeled dataset from a totally unlabeled one.

- Once more, as soon as the entire dataset is labeled, any supervised studying algorithm can be utilized. This strategy is known as self-supervised studying.

- For instance, you probably have a big dataset of unlabeled photos, you’ll be able to randomly masks a small a part of every picture after which practice a mannequin to get well the unique picture.

- Throughout coaching, the masked photos are used because the inputs to the mannequin, and the unique photos are used because the labels.

Reinforcement studying

- The educational system, referred to as an agent on this context, can observe the atmosphere, choose and carry out actions, and get rewards in return or penalties within the type of unfavorable rewards.

- It should then study by itself what’s the finest technique, referred to as a coverage, to get essentially the most reward over time.

- A coverage defines what motion the agent ought to select when it’s in a given state of affairs.

Batch vs On-line Studying

Batch studying

- The system is incapable of studying incrementally: it have to be educated utilizing all of the accessible knowledge.

- It will usually take loads of time and computing sources, so it’s sometimes finished offline.

- First the system is educated, after which it’s launched into manufacturing and runs with out studying anymore; it simply applies what it has discovered. That is referred to as offline studying.

- The mannequin classifies photos of cats and canines, its efficiency will decay very slowly, but when the mannequin offers with fast-evolving methods, for instance making predictions on the monetary market, then it’s more likely to decay fairly quick.

On-line studying

- Practice the system incrementally by feeding it knowledge cases sequentially, both individually or in small teams referred to as mini-batches.

- Every studying step is quick and low cost, so the system can study new knowledge on the fly.

- On-line studying is beneficial for methods that have to adapt to vary extraordinarily quickly (e.g., to detect new patterns within the inventory market).

One necessary parameter of on-line studying methods is how briskly they need to adapt to altering knowledge: that is referred to as the studying charge.

- For those who set a excessive studying charge, then your system will quickly adapt to new knowledge, however it can additionally are likely to shortly overlook the previous knowledge.

- Conversely, for those who set a low studying charge, the system may have extra inertia; that’s, it can study extra slowly, however it can even be much less delicate to noise within the new knowledge or in outliers

- Want to observe the system(even i/p or o/p) carefully and promptly change studying off if a drop in efficiency is detected.

Occasion-Primarily based vs Mannequin-Primarily based Studying

Yet another technique to categorize machine studying methods is by how they generalize on new unseen knowledge.

Occasion-based studying

- The system learns the examples by coronary heart, then generalizes to new instances by utilizing a similarity measure to check them to the discovered examples.

- Eg. The brand new occasion could be categorized as a triangle as a result of the vast majority of essentially the most comparable cases belong to that class.

Mannequin-based studying

- Use mannequin to make predictions after which generalize to new knowledge.

- Eg. Coaching a mannequin on home knowledge and predicting home worth of latest homes.

- Our foremost activity is to pick a mannequin and practice it on some knowledge.

- Two issues that may go unsuitable are “dangerous mannequin” and “dangerous knowledge”.

dangerous knowledge

Inadequate Amount of Coaching Knowledge

- For quite simple issues you sometimes want hundreds of examples, and for complicated issues similar to picture or speech recognition chances are you’ll want thousands and thousands of examples.

Nonrepresentative Coaching Knowledge

- Through the use of a non-representative(take into account it to be non correlated) coaching set, you educated a mannequin that’s unlikely to make correct predictions.

Poor-High quality Knowledge

- Clearly, in case your coaching knowledge is filled with errors, outliers, and noise (e.g., attributable to poor-quality measurements), it can make it more durable for the system to detect the underlying patterns, so your system is much less more likely to carry out nicely.

Irrelevant Options

- Because the saying goes: rubbish in, rubbish out. Your system will solely be able to studying if the coaching knowledge incorporates sufficient related options and never too many irrelevant ones.

A vital a part of the success of a machine studying venture is developing with an excellent set of options to coach on.

This course of, referred to as function engineering, entails the next steps:

- Function choice (choosing essentially the most helpful options to coach on amongst present options)

- Function extraction (combining present options to provide a extra helpful one — as we noticed earlier, dimensionality discount algorithms may also help)

- Creating new options by gathering new knowledge.

dangerous algorithms

Overfitting the Coaching Knowledge

- Mannequin performs nicely on the coaching knowledge, nevertheless it doesn’t generalize nicely.

Underfitting the Coaching Knowledge

- When your mannequin is simply too easy to study the underlying construction of the info.

Listed here are the principle choices for fixing this drawback:

- Choose a extra highly effective mannequin, with extra parameters.

- Feed higher options to the training algorithm (function engineering).

- Cut back the constraints on the mannequin (for instance by decreasing the regularization hyperparameter).

Testing and Validating

- The one technique to understand how nicely a mannequin will generalize to new instances is to really strive it out on new instances.

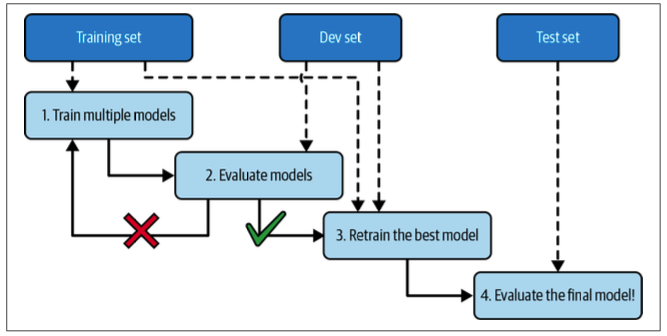

Hyperparameter Tuning and Mannequin Choice

- You practice a number of fashions with varied hyperparameters on the decreased coaching set (i.e., the total coaching set minus the validation set), and you choose the mannequin that performs finest on the validation set.

- Consider the ultimate mannequin on the take a look at set to get an estimate of the generalization error.

A mannequin is a simplified illustration of the info.

- The simplifications are supposed to discard the superfluous particulars which might be unlikely to generalize to new cases.

- When you choose a specific sort of mannequin, you’re implicitly making assumptions concerning the knowledge.

- For instance, for those who select a linear mannequin, you’re implicitly assuming that the info is basically linear and that the space between the cases and the straight line is simply noise, which may safely be ignored.

If you make completely no knowledge assumption, then there isn’t any cause to want one mannequin over one other. That is No Free Lunch theorem.

- For some datasets one of the best mannequin is a linear mannequin, whereas for different datasets it’s a neural community.

- There isn’t any mannequin that may be a priori assured to work higher (therefore the identify of the theory).

- The one technique to know for certain which mannequin is finest is to judge all of them.

- Since this isn’t attainable, in follow you make some cheap assumptions concerning the knowledge and consider just a few cheap fashions.

- For instance, for easy duties chances are you’ll consider linear fashions with varied ranges of regularization, and for a fancy drawback chances are you’ll consider varied neural networks.

Discover me on LinkedIn.