Authors: Shengbang Tong, David Fan, Jiachen Zhu, Yunyang Xiong, Xinlei Chen, Koustuv Sinha, Michael Rabbat, Yann LeCun, Saining Xie, Zhuang Liu

The evolution of Massive Language Fashions (LLMs) has introduced us nearer to a unified mannequin that seamlessly integrates each understanding and technology throughout a number of modalities. MetaMorph, a groundbreaking framework developed by Meta and NYU, demonstrates that with Visible-Predictive Instruction Tuning (VPiT), LLMs can successfully predict each visible and textual content tokens with out intensive architectural modifications or pretraining.

MetaMorph reveals that visible technology emerges naturally from improved visible understanding. Which means when fashions are educated to know, they inherently achieve the power to generate- and vice versa.

Conventional multimodal fashions require hundreds of thousands of samples for efficient visible technology. MetaMorph achieves this with as few as 200K samples, due to the effectivity of co-training technology and understanding duties collectively.

VPiT extends conventional Visible Instruction Tuning to allow steady visible token prediction, considerably enhancing the mannequin’s multimodal reasoning skills. Which means text-based LLMs can now generate coherent and structured visible outputs whereas sustaining their foundational effectivity.

MetaMorph showcases aggressive efficiency throughout each understanding and technology benchmarks, providing sturdy proof of modality unification. By leveraging pretrained LLM information, the mannequin demonstrates the power to carry out implicit reasoning earlier than producing visible tokens.

- Discovering 1: Visible technology emerges naturally from understanding, requiring considerably fewer samples than standalone coaching.

- Discovering 2: Improved visible understanding results in higher technology and vice versa- highlighting a powerful synergy between the 2 duties.

- Discovering 3: Understanding-focused information is more practical for each comprehension and technology, in comparison with information purely targeted on technology.

- Discovering 4: Visible technology correlates strongly with vision-centric duties (resembling textual content & chart evaluation) however much less with knowledge-based duties.

MetaMorph leverages a unified multimodal processing pipeline:

- Multimodal Enter: Processes textual content and visible tokens in any sequence order.

- Unified Processing: Makes use of a single LLM spine with separate heads for textual content and imaginative and prescient.

- Token Technology: Generates each textual content and visible tokens utilizing diffusion-based visualisation.

- Multimodal Subsequent-Token Prediction: Learns from a broad vary of multimodal instruction datasets.

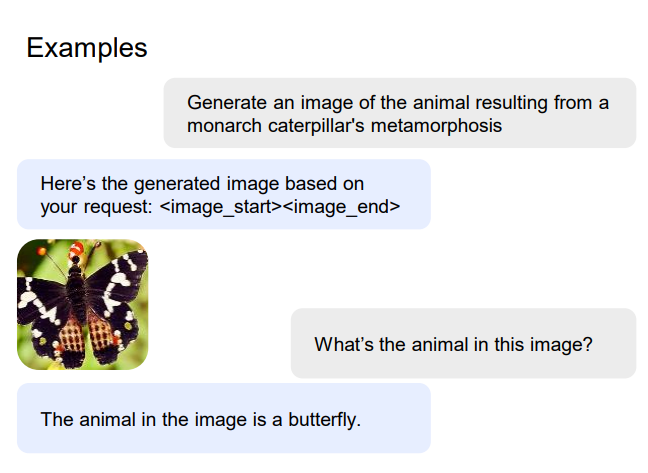

MetaMorph demonstrates the power to carry out implicit multimodal reasoning- a key step towards basic intelligence. For instance, given a picture of a monarch caterpillar, it might probably course of the metamorphosis lifecycle and predict the ultimate transformation right into a monarch butterfly- a sophisticated functionality that mixes each visible understanding and reasoning.

MetaMorph represents a significant leap ahead within the quest for unified AI fashions. By proving that understanding and technology are intrinsically linked, and that LLMs may be effectively tuned for multimodal duties, it paves the best way for next-generation purposes in AI-driven content material creation, interactive multimodal assistants, and extra.

🔗 Learn extra within the authentic paper: MetaMorph: Multimodal Understanding and Generation via Instruction Tuning

#AI #MachineLearning #MultimodalAI #GenerativeAI #MetaMorph #LLM #DeepLearning