The linear regression mannequin does one easy factor, it matches a straight line (or hyperplane) by way of your knowledge.

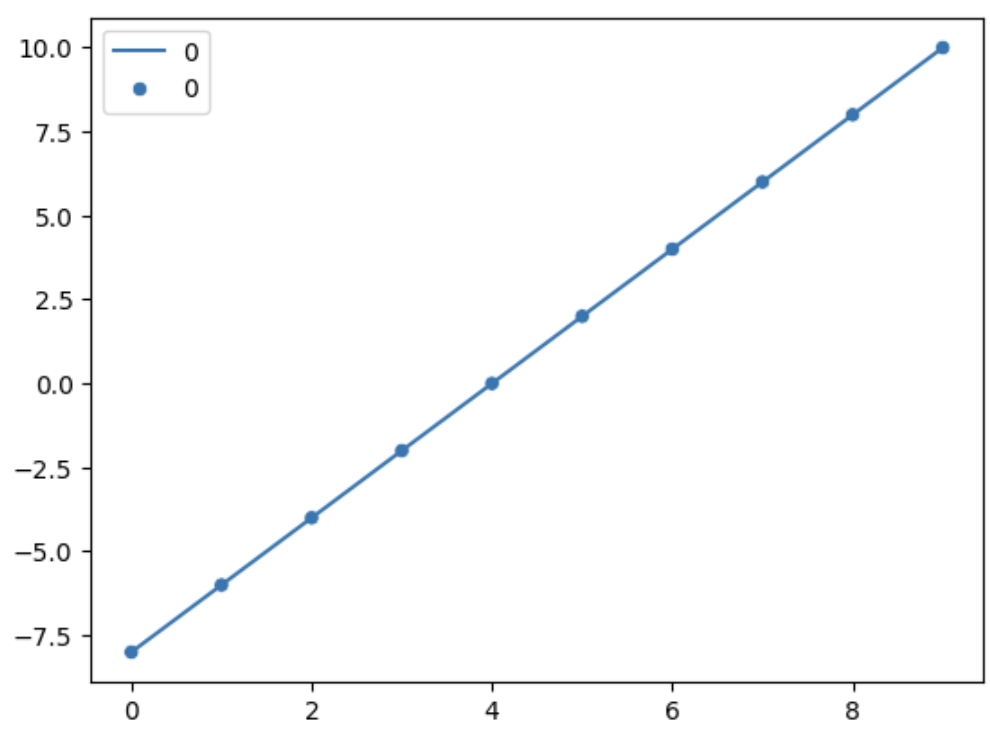

As we are able to see, the factors are the precise values and the road is the prediction made by the mannequin based mostly on the information. So how does linear regression work?

The mannequin

We’ve realized in arithmetic {that a} line might be expressed utilizing the next expression:

y = mx + b

The place m is the slope of the road, and b is the intercept on the y-axis. The linear regression mannequin can be the identical. Linear regression for a single variable is given as:

y = wx + b

the place w is the burden (determines the inclination of the road or hyperplane), b is the bias, and x is known as a function.

In case there’s multiple function(multiple variable) the linear regression mannequin equation would seem like this:

the place w is the burden vector, and x is the vector of options.

For smaller datasets (artificially generated ones specifically) it’s simple to guess what the proper weights are and assign these to our mannequin accordingly, however after we encounter bigger datasets will probably be troublesome to guess the weights.

Then how will we decide the weights for our mannequin? For this, we use the price perform and an optimization method known as gradient descent.

Price perform and the gradient descent algorithm

In a machine studying mannequin, the precise studying of the mannequin from the information is enabled by the price capabilities and the optimization algorithms. Within the case of linear regression, we can be utilizing the imply squared error price perform given as:

The imply squared error (MSE) is obtained by way of statistical strategies, particularly using the Most Chance Estimator (MLE). Within the means of deriving the MSE, it’s assumed that the true values include errors that comply with a standard distribution.

This doesn’t reply our query. How do we discover the proper weights that give us minimal MSE? The reply is by randomly initializing the weights and bias (collectively known as parameters).

Wait!? What’s the assure that random initialization will give us the proper mannequin? There isn’t. We begin with a random worth, and we let the mannequin regulate these parameters to reduce the MSE.

The minimization of MSE might be finished utilizing an optimization method known as gradient descent. In gradient descent, we take the partial derivatives of the MSE (J) to w and b. The algorithm is given as:

This algorithm ought to run as a loop till we get the proper values. However when will we cease this loop, when do we all know we acquired the optimum values? To know this, we have now to take a look at a graph between the prices and the weights (Right here, for simplicity, we assume y hat to be wx, thus eradicating the fixed).

Contemplating the above desk, we are able to simply guess that the worth of w=2. For the sake of argument, let’s say we couldn’t decide the worth of w. So, let’s initialize w=0. Now what would the values predicted by the mannequin be? Zero, in fact.

Upon additional calculations, we discover J = 6.67 roughly. If we proceed to calculate the worth of J with totally different values of w, we get a parabolic curve for the graph of J vs w. The graph is proven under:

The graph reaches its minimal when the burden is the same as 2. Once we initialize the weights randomly, we begin someplace on this graph (if you’re fortunate, you would possibly hit the minimal on the primary try). Utilizing the gradient descent method, we are able to regulate the weights and bias utilizing the derivatives and attain the minimal. The image alpha within the gradient descent algorithm is known as the educational charge. It determines the velocity with which we are going to descend to the minimal.

You would possibly suppose that by having a excessive studying charge, we are able to attain the minimal sooner, however this isn’t true. As we all know, the gradient is given because the product of the educational charge and the gradient. If we begin on the high left level of the above graph, the gradient can be a big unfavorable worth(slope of the graph at that time). The product would even be giant, thus overshooting away from the minimal. If the educational charge is simply too small, then it can take lots of time for the parameters to converge.

Therefore, by a trial and error technique, we have now to decide on the most effective studying charge.

Easy methods to code linear regression?

First, we import the numpy, which is a Python library that gives many capabilities for linear algebra

import numpy as np

Now we outline the linear regression perform and the perform to initialize our parameters:

#Defining the linear regression perform

def linear_regression(w,X,b):

z = np.dot(X,w) + b

return z#We've to make it possible for the burden matrix is of the proper form

#in order that it's suitable with the dot product within the above perform

def initialize_params(dim):

w = np.zeros((dim,1))

b = 0

return w,b

As soon as we have now written the capabilities for the mannequin let’s code its price perform and the capabilities to compute the gradients:

#That is the imply squared error price perform, we're giving the anticipated

#values and the true values as arguments

def cost_function(y_hat,y):

m = len(y) #m is the variety of coaching examples

squared_loss = np.sum((y_hat - y)**2) #computing the sum of squared errors

return squared_loss/m #imply squared errordef gradients(y_hat,y,X):

m = len(y)

dw = np.dot(X.T,(y_hat-y))/m #That is what comes after we carry out dJ/dw

db = np.sum(y_hat-y)/m #That is what comes after we carry out dJ/db

return dw,db

We’ve developed every thing we have to run our linear regression perform, let’s put all of it collectively within the prepare loop:

def train_loop(X_train,y_train,num_iterations,learning_rate,print_cost=False):

prices = []

w,b = initialize_params(X.form[1],1)

for i in vary(num_iterations):

y_hat = linear_regression(w,X,b) #Making a prediction with the weights

prices.append(cost_function(y_hat,y)) #Storing the price

if ipercent100 == 0 and print_cost==True:

print(f"The associated fee after {i} iterations {cost_function(y_hat,y)}")

dw,db = gradients(y_hat,y,X) #Calculating the gradient#Updating the parameters

w = w-(learning_rate)*dw

b = b-(learning_rate)*db

return w,b, prices

And that’s it, you will have coded your first machine-learning mannequin. If you wish to know how one can load knowledge and, prepare and check your mannequin with it click on the hyperlink under:

Whereas linear regression is nice for analyzing relations between variables which might be linearly dependent, it isn’t mannequin when the relation between the options and the goal is non-linear.

In such instances, we carry out one thing known as polynomial regression. What we do is we select the options based mostly on some remark and alter their diploma.

Within the GitHub repo, you possibly can see a easy implementation of polynomial regression. The drawback of this regression is that if we enhance the diploma of options, the mannequin will overfit. That’s, the mannequin won’t generalize nicely after we present unseen knowledge.

So that’s all about linear regression. Linear regression is an effective mannequin regardless of its setbacks. We now know that linear regression can guess numbers based mostly on the supplied knowledge. However may it’s used to categorise objects as nicely?

On Monday, I’ll publish two blogs, one answering the above query, and the opposite on how we are able to load and course of knowledge for our machine studying fashions to carry out higher.