Recognition of RAG

Over the previous two years whereas working with monetary corporations, I’ve noticed firsthand how they determine and prioritize Generative AI use instances, balancing complexity with potential worth.

Retrieval-Augmented Era (RAG) typically stands out as a foundational functionality throughout many LLM-driven options, putting a steadiness between ease of implementation and real-world impression. By combining a retriever that surfaces related paperwork with an LLM that synthesizes responses, RAG streamlines data entry, making it invaluable for functions like buyer help, analysis, and inside data administration.

Defining clear analysis standards is vital to making sure LLM options meet efficiency requirements, simply as Take a look at-Pushed Growth (TDD) ensures reliability in conventional software program. Drawing from TDD ideas, an evaluation-driven strategy units measurable benchmarks to validate and enhance AI workflows. This turns into particularly essential for LLMs, the place the complexity of open-ended responses calls for constant and considerate analysis to ship dependable outcomes.

For RAG functions, a typical analysis set consists of consultant input-output pairs that align with the meant use case. For instance, in chatbot functions, this may contain Q&A pairs reflecting person inquiries. In different contexts, akin to retrieving and summarizing related textual content, the analysis set might embody supply paperwork alongside anticipated summaries or extracted key factors. These pairs are sometimes generated from a subset of paperwork, akin to these which might be most seen or regularly accessed, guaranteeing the analysis focuses on probably the most related content material.

Key Challenges

Creating analysis datasets for RAG methods has historically confronted two main challenges.

- The method typically relied on material consultants (SMEs) to manually evaluation paperwork and generate Q&A pairs, making it time-intensive, inconsistent, and expensive.

- Limitations stopping LLMs from processing visible components inside paperwork, akin to tables or diagrams, as they’re restricted to dealing with textual content. Customary OCR instruments wrestle to bridge this hole, typically failing to extract significant info from non-textual content material.

Multi-Modal Capabilities

The challenges of dealing with advanced paperwork have developed with the introduction of multimodal capabilities in basis fashions. Industrial and open-source fashions can now course of each textual content and visible content material. This imaginative and prescient functionality eliminates the necessity for separate text-extraction workflows, providing an built-in strategy for dealing with mixed-media PDFs.

By leveraging these imaginative and prescient options, fashions can ingest total pages without delay, recognizing structure buildings, chart labels, and desk content material. This not solely reduces handbook effort but in addition improves scalability and information high quality, making it a robust enabler for RAG workflows that depend on correct info from a wide range of sources.

Dataset Curation for Wealth Administration Analysis Report

To reveal an answer to the issue of handbook analysis set era, I examined my strategy utilizing a pattern doc — the 2023 Cerulli report. One of these doc is typical in wealth administration, the place analyst-style studies typically mix textual content with advanced visuals. For a RAG-powered search assistant, a data corpus like this is able to seemingly include many such paperwork.

My purpose was to reveal how a single doc may very well be leveraged to generate Q&A pairs, incorporating each textual content and visible components. Whereas I didn’t outline particular dimensions for the Q&A pairs on this check, a real-world implementation would contain offering particulars on forms of questions (comparative, evaluation, a number of alternative), matters (funding methods, account varieties), and lots of different elements. The first focus of this experiment was to make sure the LLM generated questions that integrated visible components and produced dependable solutions.

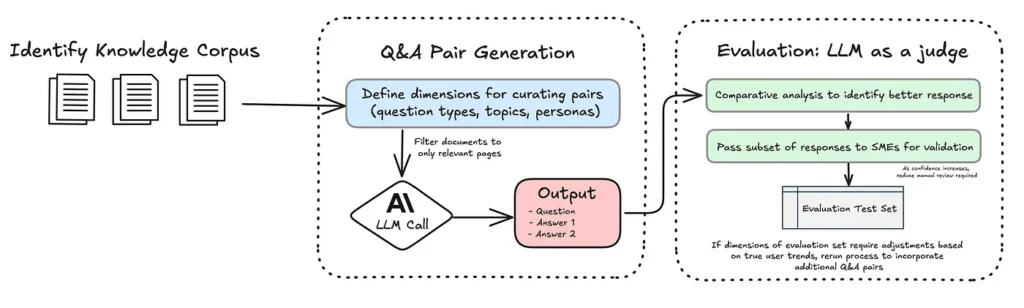

My workflow, illustrated within the diagram, leverages Anthropic’s Claude Sonnet 3.5 mannequin, which simplifies the method of working with PDFs by dealing with the conversion of paperwork into photographs earlier than passing them to the mannequin. This built-in performance eliminates the necessity for added third-party dependencies, streamlining the workflow and lowering code complexity.

I excluded preliminary pages of the report just like the desk of contents and glossary, specializing in pages with related content material and charts for producing Q&A pairs. Beneath is the immediate I used to generate the preliminary question-answer units.

You're an professional at analyzing monetary studies and producing question-answer pairs. For the offered PDF, the 2023 Cerulli report:1. Analyze pages {start_idx} to {end_idx} and for **every** of these 10 pages:

- Determine the **actual web page title** because it seems on that web page (e.g., "Exhibit 4.03 Core Market Databank, 2023").

- If the web page features a chart, graph, or diagram, create a query that references that visible component. In any other case, create a query concerning the textual content material.

- Generate two distinct solutions to that query ("answer_1" and "answer_2"), each supported by the web page’s content material.

- Determine the proper web page quantity as indicated within the backside left nook of the web page.

2. Return precisely 10 outcomes as a legitimate JSON array (a listing of dictionaries). Every dictionary ought to have the keys: “web page” (int), “page_title” (str), “query” (str), “answer_1” (str), and “answer_2” (str). The web page title sometimes consists of the phrase "Exhibit" adopted by a quantity.

Q&A Pair Era

To refine the Q&A era course of, I carried out a comparative studying strategy that generates two distinct solutions for every query. Throughout the analysis section, these solutions are assessed throughout key dimensions akin to accuracy and readability, with the stronger response chosen as the ultimate reply.

This strategy mirrors how people typically discover it simpler to make choices when evaluating options slightly than evaluating one thing in isolation. It’s like an eye fixed examination: the optometrist doesn’t ask in case your imaginative and prescient has improved or declined however as an alternative, presents two lenses and asks, Which is clearer, possibility 1 or possibility 2? This comparative course of eliminates the anomaly of assessing absolute enchancment and focuses on relative variations, making the selection less complicated and extra actionable. Equally, by presenting two concrete reply choices, the system can extra successfully consider which response is stronger.

This system can be cited as a finest follow within the article “What We Learned from a Year of Building with LLMs” by leaders within the AI area. They spotlight the worth of pairwise comparisons, stating: “As an alternative of asking the LLM to attain a single output on a Likert scale, current it with two choices and ask it to pick the higher one. This tends to result in extra secure outcomes.” I extremely suggest studying their three-part sequence, because it offers invaluable insights into constructing efficient methods with LLMs!

LLM Analysis

For evaluating the generated Q&A pairs, I used Claude Opus for its superior reasoning capabilities. Performing as a “decide,” the LLM in contrast the 2 solutions generated for every query and chosen the higher possibility based mostly on standards akin to directness and readability. This strategy is supported by intensive analysis (Zheng et al., 2023) that showcases LLMs can carry out evaluations on par with human reviewers.

This strategy considerably reduces the quantity of handbook evaluation required by SMEs, enabling a extra scalable and environment friendly refinement course of. Whereas SMEs stay important throughout the preliminary phases to spot-check questions and validate system outputs, this dependency diminishes over time. As soon as a adequate stage of confidence is established within the system’s efficiency, the necessity for frequent spot-checking is diminished, permitting SMEs to concentrate on higher-value duties.

Classes Discovered

Claude’s PDF functionality has a restrict of 100 pages, so I broke the unique doc into 4 50-page sections. Once I tried processing every 50-page part in a single request — and explicitly instructed the mannequin to generate one Q&A pair per web page — it nonetheless missed some pages. The token restrict wasn’t the actual downside; the mannequin tended to concentrate on whichever content material it thought of most related, leaving sure pages underrepresented.

To handle this, I experimented with processing the doc in smaller batches, testing 5, 10, and 20 pages at a time. By way of these assessments, I discovered that batches of 10 pages (e.g., pages 1–10, 11–20, and many others.) offered the most effective steadiness between precision and effectivity. Processing 10 pages per batch ensured constant outcomes throughout all pages whereas optimizing efficiency.

One other problem was linking Q&A pairs again to their supply. Utilizing tiny web page numbers in a PDF’s footer alone didn’t persistently work. In distinction, web page titles or clear headings on the high of every web page served as dependable anchors. They have been simpler for the mannequin to choose up and helped me precisely map every Q&A pair to the precise part.

Instance Output

Beneath is an instance web page from the report, that includes two tables with numerical information. The next query was generated for this web page:

How has the distribution of AUM modified throughout different-sized Hybrid RIA corporations?

Reply: Mid-sized corporations ($25m to <$100m) skilled a decline in AUM share from 2.3% to 1.0%.

Within the first desk, the 2017 column exhibits a 2.3% share of AUM for mid-sized corporations, which decreases to 1.0% in 2022, thereby showcasing the LLM’s capacity to synthesize visible and tabular content material precisely.

Advantages

Combining caching, batching and a refined Q&A workflow led to a few key benefits:

Caching

- In my experiment, processing a singular report with out caching would have price $9, however by leveraging caching, I diminished this price to $3 — a 3x price financial savings. Per Anthropic’s pricing mannequin, making a cache prices $3.75 / million tokens, nonetheless, reads from the cache are solely $0.30 / million tokens. In distinction, enter tokens price $3 / million tokens when caching just isn’t used.

- In a real-world state of affairs with multiple doc, the financial savings develop into much more vital. For instance, processing 10,000 analysis studies of comparable size with out caching would price $90,000 in enter prices alone. With caching, this price drops to $30,000, reaching the identical precision and high quality whereas saving $60,000.

Discounted Batch Processing

- Utilizing Anthropic’s Batches API cuts output prices in half, making it a less expensive possibility for sure duties. As soon as I had validated the prompts, I ran a single batch job to judge all of the Q&A reply units without delay. This technique proved far less expensive than processing every Q&A pair individually.

- For instance, Claude 3 Opus sometimes prices $15 per million output tokens. By utilizing batching, this drops to $7.50 per million tokens — a 50% discount. In my experiment, every Q&A pair generated a median of 100 tokens, leading to roughly 20,000 output tokens for the doc. At the usual fee, this is able to have price $0.30. With batch processing, the associated fee was diminished to $0.15, highlighitng how this strategy optimizes prices for non-sequential duties like analysis runs.

Time Saved for SMEs

- With extra correct, context-rich Q&A pairs, Topic Matter Consultants spent much less time sifting by way of PDFs and clarifying particulars, and extra time specializing in strategic insights. This strategy additionally eliminates the necessity to rent further employees or allocate inside assets for manually curating datasets, a course of that may be time-consuming and costly. By automating these duties, firms save considerably on labor prices whereas streamlining SME workflows, making this a scalable and cost-effective answer.

Source link