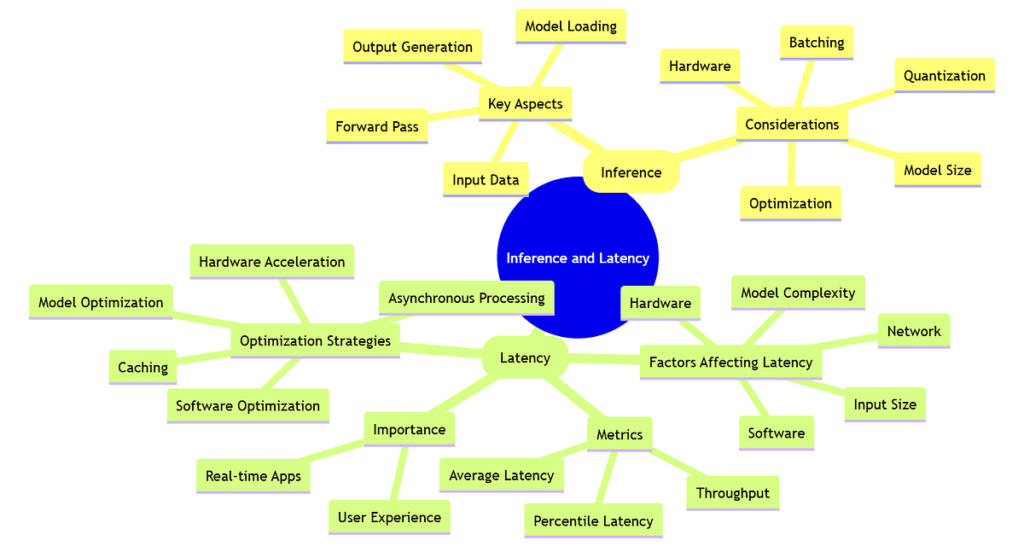

Inference: The method of utilizing a skilled machine studying mannequin to make predictions on new, unseen knowledge. It’s the applying of the discovered data to unravel real-world issues.

Key Points: The core parts concerned within the inference course of.

- Enter Knowledge: The brand new, unseen knowledge that the mannequin might be used to make predictions on. That is what the mannequin “sees” for the primary time.

- Mannequin Loading: The act of retrieving the skilled mannequin from storage (e.g., a file) and loading it into reminiscence so it may be used for prediction.

- Ahead Move: The computational course of the place the enter knowledge is fed by means of the mannequin’s layers. Every layer performs calculations on the enter it receives and passes the outcome to the following layer. This continues till the output layer is reached.

- Output Era: The ultimate step of the inference course of the place the mannequin produces its prediction based mostly on the enter knowledge.

Issues: Elements that affect the effectivity and efficiency of inference.

- Mannequin Measurement: The dimensions of the skilled mannequin, which impacts reminiscence utilization, loading time, and probably processing time. Bigger fashions usually require extra assets.

- {Hardware}: The kind of {hardware} used for inference (CPU, GPU, TPU, and so on.) considerably impacts efficiency. Completely different {hardware} is optimized for several types of computations.

- Batching: Processing a number of enter knowledge factors collectively in a batch to enhance throughput and effectivity. This permits the mannequin to carry out computations on a number of inputs concurrently.

- Quantization: A way to scale back the precision of the mannequin’s weights (e.g., from 32-bit floating-point to 8-bit integers) to lower mannequin measurement and enhance inference pace.

- Optimization: Strategies employed to make the mannequin extra environment friendly for inference, equivalent to pruning (eradicating much less essential connections) and data distillation (coaching a smaller “scholar” mannequin to imitate a bigger “instructor” mannequin).

Latency: The time delay between offering enter to a mannequin and receiving the output. It’s a vital measure of efficiency, particularly for real-time purposes.

Significance: Why latency is a vital issue, particularly in sure kinds of purposes.

- Actual-time Apps: Absolutely the necessity of low latency for real-time programs like self-driving automobiles, robotics, and on-line gaming the place delays are unacceptable.

- Person Expertise: How low latency contributes to a greater and extra responsive person expertise in interactive purposes.

Elements Affecting Latency: The weather that contribute to latency.

- Mannequin Complexity: The connection between the complexity of the mannequin (variety of layers, parameters, and so on.) and its inference time. Extra advanced fashions normally have larger latency.

- Enter Measurement: How the dimensions of the enter knowledge impacts processing time. Bigger inputs usually require extra processing.

- {Hardware}: The affect of the {hardware} used for inference on latency. Extra highly effective {hardware} can scale back latency.

- Community: The position of community latency in distributed programs the place the mannequin is likely to be hosted on a distant server.

- Software program: The influence of the software program and frameworks used for inference on latency. Inefficient code or frameworks can introduce overhead.

Metrics: Methods to measure latency.

- Common Latency: The imply latency over a set of enter knowledge factors.

- Percentile Latency: A extra sturdy metric than common latency. It represents the latency under which a sure share of requests are served (e.g., P99 latency means 99% of requests are served with a latency under that worth). That is essential for guaranteeing constant efficiency.

- Throughput: The variety of inferences that may be carried out per unit of time. It’s a measure of what number of requests the system can deal with.

Optimization Methods: Strategies to scale back latency.

- Mannequin Optimization: Strategies to optimize the mannequin itself for sooner inference, equivalent to pruning, quantization, and data distillation.

- {Hardware} Acceleration: Utilizing specialised {hardware} like GPUs, TPUs, or FPGAs to hurry up the inference course of.

- Software program Optimization: Optimizing the software program and inference frameworks used to scale back overhead and enhance efficiency.

- Caching: Storing the outcomes of frequent or widespread inferences to keep away from redundant computation. If the identical enter is obtained once more, the cached outcome might be returned instantly.

- Asynchronous Processing: Performing inference within the background or concurrently with different duties to forestall blocking and enhance responsiveness.

#Inference #Latencey #MachineLerning