Think about you’re constructing your dream residence. Nearly the whole lot is prepared. All that’s left to do is pick a entrance door. For the reason that neighborhood has a low crime fee, you resolve you desire a door with a typical lock — nothing too fancy, however most likely sufficient to discourage 99.9% of would-be burglars.

Sadly, the native owners’ affiliation (HOA) has a rule stating that each one entrance doorways within the neighborhood have to be financial institution vault doorways. Their reasoning? Financial institution vault doorways are the one doorways which were mathematically confirmed to be completely safe. So far as they’re involved, any entrance door beneath that customary might as nicely not be there in any respect.

You’re left with three choices, none of which appears notably interesting:

- Concede defeat and have a financial institution vault door put in. Not solely is that this costly and cumbersome, however you’ll be left with a entrance door that bogs you down each single time you need to open or shut it. At the least burglars gained’t be an issue!

- Go away your home doorless. The HOA rule imposes necessities on any entrance door within the neighborhood, nevertheless it doesn’t technically forbid you from not putting in a door in any respect. That may prevent loads of money and time. The draw back, after all, is that it will permit anybody to return and go as they please. On high of that, the HOA might at all times shut the loophole, taking you again to sq. one.

- Decide out fully. Confronted with such a stark dilemma (all-in on both safety or practicality), you select to not play the sport in any respect, promoting your nearly-complete home and searching for someplace else to stay.

This situation is clearly fully unrealistic. In actual life, everyone strives to strike an acceptable steadiness between safety and practicality. This steadiness is knowledgeable by everybody’s personal circumstances and threat evaluation, nevertheless it universally lands someplace between the 2 extremes of financial institution vault door and no door in any respect.

However what if as a substitute of your dream residence, you imagined a medical AI mannequin that has the facility to assist medical doctors enhance affected person outcomes? Extremely-sensitive coaching knowledge factors from sufferers are your valuables. The privateness safety measures you are taking are the entrance door you select to put in. Healthcare suppliers and the scientific neighborhood are the HOA.

All of a sudden, the situation is way nearer to actuality. On this article, we’ll discover why that’s. After understanding the issue, we’ll think about a easy however empirically efficient answer proposed within the paper Reconciling privacy and accuracy in AI for medical imaging [1]. The authors suggest a balanced different to the three unhealthy decisions laid out above, very like the real-life method of a typical entrance door.

The State of Affected person Privateness in Medical AI

Over the previous few years, synthetic intelligence has grow to be an ever extra ubiquitous a part of our day-to-day lives, proving its utility throughout a variety of domains. The rising use of AI fashions has, nonetheless, raised questions and considerations about defending the privateness of the information used to coach them. You could keep in mind the well-known case of ChatGPT, simply months after its preliminary launch, exposing proprietary code from Samsung [2].

A number of the privateness dangers related to AI fashions are apparent. For instance, if the coaching knowledge used for a mannequin isn’t saved securely sufficient, unhealthy actors might discover methods to entry it straight. Others are extra insidious, equivalent to the danger of reconstruction. Because the title implies, in a reconstruction attack, a foul actor makes an attempt to reconstruct a mannequin’s coaching knowledge with no need to realize direct entry to the dataset.

Medical information are one of the delicate varieties of private info there are. Though particular regulation varies by jurisdiction, affected person knowledge is mostly topic to stringent safeguards, with hefty fines for insufficient safety. Past the letter of the regulation, unintentionally exposing such knowledge might irreparably injury our capability to make use of specialised AI to empower medical professionals.

As Ziller, Mueller, Stieger, et al. level out [1], absolutely profiting from medical AI requires wealthy datasets comprising info from precise sufferers. This info have to be obtained with the total consent of the affected person. Ethically buying medical knowledge for analysis was difficult sufficient because it was earlier than the distinctive challenges posed by AI got here into play. But when proprietary code being uncovered precipitated Samsung to ban the usage of ChatGPT [2], what would occur if attackers managed to reconstruct MRI scans and determine the sufferers they belonged to? Even remoted cases of negligent safety in opposition to knowledge reconstruction might find yourself being a monumental setback for medical AI as a complete.

Tying this again into our entrance door metaphor, the HOA statute calling for financial institution vault doorways begins to make slightly bit extra sense. When the price of a single break-in might be so catastrophic for the whole neighborhood, it’s solely pure to need to go to any lengths to forestall them.

Differential Privateness (DP) as a Theoretical Financial institution Vault Door

Earlier than we focus on what an acceptable steadiness between privateness and practicality may appear like within the context of medical AI, we have now to show our consideration to the inherent tradeoff between defending an AI mannequin’s coaching knowledge and optimizing for high quality of efficiency. This may set the stage for us to develop a primary understanding of Differential Privacy (DP), the theoretical gold customary of privateness safety.

Though educational curiosity in coaching knowledge privateness has elevated considerably over the previous 4 years, ideas on which a lot of the dialog relies had been identified by researchers nicely earlier than the current LLM growth, and even earlier than OpenAI was based in 2015. Although it doesn’t cope with reconstruction per se, the 2013 paper Hacking smart machines with smarter ones [3] demonstrates a generalizable assault methodology able to precisely inferring statistical properties of machine studying classifiers, noting:

“Though ML algorithms are identified and publicly launched, coaching units will not be fairly ascertainable and, certainly, could also be guarded as commerce secrets and techniques. Whereas a lot analysis has been carried out in regards to the privateness of the weather of coaching units, […] we focus our consideration on ML classifiers and on the statistical info that may be unconsciously or maliciously revealed from them. We present that it’s potential to deduce surprising however helpful info from ML classifiers.” [3]

Theoretical knowledge reconstruction assaults had been described even earlier, in a context in a roundabout way pertaining to machine studying. The landmark 2003 paper Revealing information while preserving privacy [4] demonstrates a polynomial-time reconstruction algorithm for statistical databases. (Such databases are supposed to offer solutions to questions on their knowledge in mixture whereas protecting particular person knowledge factors nameless.) The authors present that to mitigate the danger of reconstruction, a certain quantity of noise must be launched into the information. Evidently, perturbing the unique knowledge on this approach, whereas crucial for privateness, has implications for the standard of the responses to queries, i.e., the accuracy of the statistical database.

In explaining the aim of DP within the first chapter of their e-book The Algorithmic Foundations of Differential Privacy [5], Cynthia Dwork and Aaron Roth deal with this tradeoff between privateness and accuracy:

“[T]he Basic Regulation of Data Restoration states that overly correct solutions to too many questions will destroy privateness in a spectacular approach. The aim of algorithmic analysis on differential privateness is to postpone this inevitability so long as potential. Differential privateness addresses the paradox of studying nothing about a person whereas studying helpful details about a inhabitants.” [5]

The notion of “studying nothing about a person whereas studying helpful details about a inhabitants” is captured by contemplating two datasets that differ by a single entry (one that features the entry and one which doesn’t). An (ε, δ)-differentially non-public querying mechanism is one for which the likelihood of a sure output being returned when querying one dataset is at most a multiplicative issue of the likelihood when querying the opposite dataset. Denoting the mechanism by M, the set of potential outputs by S, and the datasets by x and y, we formalize this as [5]:

Pr[M(x) ∈ S] ≤ exp(ε) ⋅ Pr[M(y) ∈ S] + δ

The place ε is the privateness loss parameter and δ is the failure likelihood parameter. ε quantifies how a lot privateness is misplaced on account of a question, whereas a constructive δ permits for privateness to fail altogether for a question at a sure (often very low) likelihood. Word that ε is an exponential parameter, that means that even barely rising it might trigger privateness to decay considerably.

An essential and helpful property of DP is composition. Discover that the definition above solely applies to instances the place we run a single question. The composition property helps us generalize it to cowl a number of queries primarily based on the truth that privateness loss and failure likelihood accumulate predictably after we compose a number of queries, be they primarily based on the identical mechanism or totally different ones. This accumulation is well confirmed to be (at most) linear [5]. What this implies is that, slightly than contemplating a privateness loss parameter for one question, we might view ε as a privateness funds that may be utilized throughout a lot of queries. For instance, when taken collectively, one question utilizing a (1, 0)-DP mechanism and two queries utilizing a (0.5, 0)-DP mechanism fulfill (2, 0)-DP.

The worth of DP comes from the theoretical privateness ensures it guarantees. Setting ε = 1 and δ = 0, for instance, we discover that the likelihood of any given output occurring when querying dataset y is at most exp(1) = e ≈ 2.718 occasions higher than that very same output occurring when querying dataset x. Why does this matter? As a result of the higher the discrepancy between the chances of sure outputs occurring, the better it’s to find out the contribution of the person entry by which the 2 datasets differ, and the better it’s to in the end reconstruct that particular person entry.

In follow, designing an (ε, δ)-differentially non-public randomized mechanism entails the addition of random noise drawn from a distribution depending on ε and δ. The specifics are past the scope of this text. Shifting our focus again to machine studying, although, we discover that the thought is identical: DP for ML hinges on introducing noise into the coaching knowledge, which yields sturdy privateness ensures in a lot the identical approach.

In fact, that is the place the tradeoff we talked about comes into play. Including noise to the coaching knowledge comes at the price of making studying tougher. We might completely add sufficient noise to realize ε = 0.01 and δ = 0, making the distinction in output possibilities between x and y nearly nonexistent. This might be great for privateness, however horrible for studying. A mannequin skilled on such a loud dataset would carry out very poorly on most duties.

There is no such thing as a consensus on what constitutes a “good” ε worth, or on common methodologies or greatest practices for ε choice [6]. In some ways, ε embodies the privateness/accuracy tradeoff, and the “correct” worth to goal for is very context-dependent. ε = 1 is mostly thought to be providing excessive privateness ensures. Though privateness diminishes exponentially with respect to ε, values as excessive as ε = 32 are talked about in literature and thought to offer reasonably sturdy privateness ensures [1].

The authors of Reconciling privacy and accuracy in AI for medical imaging [1] check the consequences of DP on the accuracy of AI fashions on three real-world medical imaging datasets. They achieve this utilizing varied values of ε and evaluating them to a non-private (non-DP) management. Desk 1 supplies a partial abstract of their outcomes for ε = 1 and ε = 8:

Even approaching the upper finish of the everyday ε values attested in literature, DP continues to be as cumbersome as a financial institution vault door for medical imaging duties. The noise launched into the coaching knowledge is catastrophic for AI mannequin accuracy, particularly when the datasets at hand are small. Word, for instance, the massive drop-off in Cube rating on the MSD Liver dataset, even with the comparatively excessive ε worth of 8.

Ziller, Mueller, Stieger, et al. counsel that the accuracy drawbacks of DP with typical ε values might contribute to the shortage of widespread adoption of DP within the discipline of Medical Ai [1]. Sure, wanting mathematically-provable privateness ensures is certainly smart, however at what value? Leaving a lot of the diagnostic energy of AI fashions on the desk within the title of privateness shouldn’t be a straightforward option to make.

Revisiting our dream residence situation armed with an understanding of DP, we discover that the choices we (appear to) have map neatly onto the three we had for our entrance door.

- DP with typical values of ε is like putting in a financial institution vault door: pricey, however efficient for privateness. As we’ll see, it’s additionally full overkill on this case.

- Not utilizing DP is like not putting in a door in any respect: a lot simpler, however dangerous. As talked about above, although, DP has but to be broadly utilized in medical AI [1].

- Passing up alternatives to make use of AI is like giving up and promoting the home: it saves us the headache of coping with privateness considerations weighed in opposition to incentives to maximise accuracy, however loads of potential is misplaced within the course of.

It appears like we’re at an deadlock… except we expect exterior the field.

Excessive-Price range DP: Privateness and Accuracy Aren’t an Both/Or

In Reconciling privacy and accuracy in AI for medical imaging [1], Ziller, Mueller, Stieger, et al. provide the medical AI equal of an everyday entrance door — an method that manages to guard privateness whereas giving up little or no in the best way of mannequin efficiency. Granted, this safety shouldn’t be theoretically optimum — removed from it. Nonetheless, because the authors present by way of a sequence of experiments, it is ok to counter virtually any real looking risk of reconstruction.

Because the saying goes, “Excellent is the enemy of excellent.” On this case, it’s the “optimum” — an insistence on arbitrarily low ε values — that locks us into the false dichotomy of whole privateness versus whole accuracy. Simply as a financial institution vault door has its place in the actual world, so does DP with ε ≤ 32. Nonetheless, the existence of the financial institution vault door doesn’t imply plain previous entrance doorways don’t even have a spot on the planet. The identical goes for high-budget DP.

The thought behind high-budget DP is simple: utilizing privateness budgets (ε values) which are so excessive that they “are near-universally shunned as being meaningless” [1] — budgets starting from ε = 10⁶ to as excessive as ε = 10¹⁵. In concept, these present such weak privateness ensures that it looks as if widespread sense to dismiss them as no higher than not utilizing DP in any respect. In follow, although, this couldn’t be farther from the reality. As we are going to see by trying on the outcomes from the paper, high-budget DP exhibits vital promise in countering real looking threats. As Ziller, Mueller, Stieger, et al. put it [1]:

“[E]ven a ‘pinch of privateness’ has drastic results in sensible eventualities.”

First, although, we have to ask ourselves what we think about to be a “real looking” risk. Any dialogue of the efficacy of high-budget DP is inextricably tied to the risk mannequin below which we select to guage it. On this context, a risk mannequin is just the set of assumptions we make about what a foul actor keen on acquiring our mannequin’s coaching knowledge is ready to do.

The paper’s findings hinge on a calibration of the assumptions to raised go well with real-world threats to affected person privateness. The authors argue that the worst-case mannequin, which is the one sometimes used for DP, is much too pessimistic. For instance, it assumes that the adversary has full entry to every authentic picture whereas trying to reconstruct it primarily based on the AI mannequin (see Desk 2) [1]. This pessimism explains the discrepancy between the reported “drastic results in sensible eventualities” of excessive privateness budgets and the very weak theoretical privateness ensures that they provide. We might liken it to incorrectly assessing the safety threats a typical home faces, wrongly assuming they’re prone to be as refined and enduring as these confronted by a financial institution.

The authors due to this fact suggest two different risk fashions, which they name the “relaxed” and “real looking” fashions. Below each of those, adversaries preserve some core capabilities from the worst-case mannequin: entry to the AI mannequin’s structure and weights, the power to govern its hyperparameters, and unbounded computational skills (see Desk 2). The real looking adversary is assumed to don’t have any entry to the unique pictures and an imperfect reconstruction algorithm. Even these assumptions go away us with a rigorous risk mannequin that will nonetheless be thought of pessimistic for many real-world eventualities [1].

Having established the three related risk fashions to think about, Ziller, Mueller, Stieger, et al. examine AI mannequin accuracy together with the reconstruction threat below every risk mannequin at totally different values of ε. As we noticed in Desk 1, that is finished for 3 exemplary Medical Imaging datasets. Their full outcomes are offered in Desk 3:

Unsurprisingly, excessive privateness budgets (exceeding ε = 10⁶) considerably mitigate the lack of accuracy seen with decrease (stricter) privateness budgets. Throughout all examined datasets, fashions skilled with high-budget DP at ε = 10⁹ (HAM10000, MSD Liver) or ε = 10¹² (RadImageNet) carry out almost in addition to their non-privately skilled counterparts. That is in keeping with our understanding of the privateness/accuracy tradeoff: the much less noise launched into the coaching knowledge, the higher a mannequin can be taught.

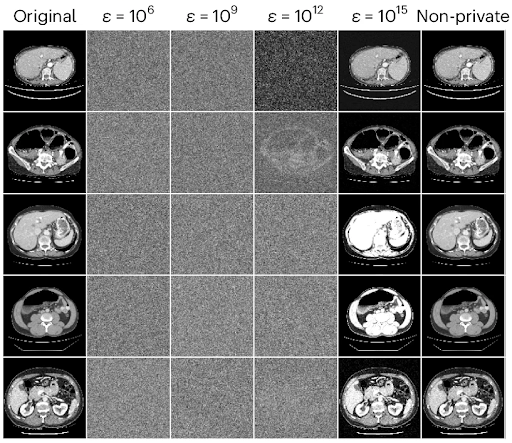

What is stunning is the diploma of empirical safety afforded by high-budget DP in opposition to reconstruction below the real looking risk mannequin. Remarkably, the real looking reconstruction threat is assessed to be 0% for every of the aforementioned fashions. The excessive efficacy of high-budget DP in defending medical AI coaching pictures in opposition to real looking reconstruction assaults is made even clearer by trying on the outcomes of reconstruction makes an attempt. Determine 1 beneath exhibits the 5 most readily reconstructed pictures from the MSD Liver dataset [9] utilizing DP with excessive privateness budgets of ε = 10⁶, ε = 10⁹, ε = 10¹², and ε = 10¹⁵.

Word that, a minimum of to the bare eye, even one of the best reconstructions obtained when utilizing the previous two budgets are visually indistinguishable from random noise. This lends intuitive credence to the argument that budgets typically deemed too excessive to offer any significant safety might be instrumental in defending privateness with out giving up accuracy when utilizing AI for medical imaging. In distinction, the reconstructions when utilizing ε = 10¹⁵ carefully resemble the unique pictures, exhibiting that not all excessive budgets are created equal.

Primarily based on their findings, Ziller, Mueller, Stieger, et al. make the case for coaching medical imaging AI fashions utilizing (a minimum of) high-budget DP because the norm. They notice the empirical efficacy of high-budget DP in countering real looking reconstruction dangers at little or no value when it comes to mannequin accuracy. The authors go as far as to assert that “it appears negligent to coach AI fashions with none type of formal privateness assure.” [1]

Conclusion

We began with a hypothetical situation wherein you had been compelled to resolve between a financial institution vault door or no door in any respect on your dream residence (or giving up and promoting the unfinished home). After an exploration of the dangers posed by insufficient privateness safety in medical AI, we regarded into the privateness/accuracy tradeoff in addition to the historical past and concept behind reconstruction assaults and differential privateness (DP). We then noticed how DP with widespread privateness budgets (ε values) degrades medical AI mannequin efficiency and in contrast it to the financial institution vault door in our hypothetical.

Lastly, we examined empirical outcomes from the paper Reconciling privacy and accuracy in AI for medical imaging to learn how high-budget differential privateness can be utilized to flee the false dichotomy of financial institution vault door vs. no door and defend Patient Privacy in the actual world with out sacrificing mannequin accuracy within the course of.

When you loved this text, please think about following me on LinkedIn to maintain up with future articles and tasks.

References

[1] Ziller, A., Mueller, T.T., Stieger, S. et al. Reconciling privateness and accuracy in AI for medical imaging. Nat Mach Intell 6, 764–774 (2024). https://doi.org/10.1038/s42256-024-00858-y.

[2] Ray, S. Samsung bans ChatGPT and different chatbots for workers after delicate code leak. Forbes (2023). https://www.forbes.com/sites/siladityaray/2023/05/02/samsung-bans-chatgpt-and-other-chatbots-for-employees-after-sensitive-code-leak/.

[3] Ateniese, G., Mancini, L. V., Spognardi, A. et al. Hacking sensible machines with smarter ones: learn how to extract significant knowledge from machine studying classifiers. Worldwide Journal of Safety and Networks 10, 137–150 (2015). https://doi.org/10.48550/arXiv.1306.4447.

[4] Dinur, I. & Nissim, Ok. Revealing info whereas preserving privateness. Proc. twenty second ACM SIGMOD-SIGACT-SIGART Symp Rules Database Syst 202–210 (2003). https://doi.org/10.1145/773153.773173.

[5] Dwork, C. & Roth, A. The algorithmic foundations of differential privateness. Foundations and Tendencies in Theoretical Pc Science 9, 211–407 (2014). https://doi.org/10.1561/0400000042.

[6] Dwork, C., Kohli, N. & Mulligan, D. Differential privateness in follow: expose your epsilons! Journal of Privateness and Confidentiality 9 (2019). https://doi.org/10.29012/jpc.689.

[7] Mei, X., Liu, Z., Robson, P.M. et al. RadImageNet: an open radiologic deep studying analysis dataset for efficient switch studying. Radiol Artif Intell 4.5, e210315 (2022). https://doi.org/10.1148/ryai.210315.

[8] Tschandl, P., Rosendahl, C. & Kittler, H. The HAM10000 dataset, a big assortment of multi-source dermatoscopic pictures of widespread pigmented pores and skin lesions. Sci Knowledge 5, 180161 (2018). https://doi.org/10.1038/sdata.2018.161.

[9] Antonelli, M., Reinke, A., Bakas, S. et al. The Medical Segmentation Decathlon. Nat Commun 13, 4128 (2022). https://doi.org/10.1038/s41467-022-30695-9.