For years, autoregressive fashions (ARMs) have dominated the sphere of huge language fashions (LLMs). Nonetheless, a brand new research challenges this paradigm, introducing a diffusion-based various referred to as LLaDA. This text explores the paper Massive Language Diffusion Fashions step-by-step, explaining how LLaDA works, its benefits, and why it may redefine the way forward for AI.

Autoregressive fashions predict the subsequent token sequentially, making them environment friendly however restricted in sure areas, equivalent to bidirectional reasoning and parallel processing. LLaDA, then again, employs a masked diffusion strategy. As an alternative of producing textual content token by token, it predicts masked tokens all of sudden, utilizing a diffusion-based course of that refines its predictions iteratively.

LLaDA follows a two-step course of:

- Ahead Course of — Textual content is progressively masked in a structured method.

- Reverse Course of — A transformer mannequin predicts the lacking tokens utilizing probabilistic inference.

By optimizing a chance certain, LLaDA supplies a principled generative strategy for modeling language information. This enables it to scale successfully and match the efficiency of conventional ARMs.

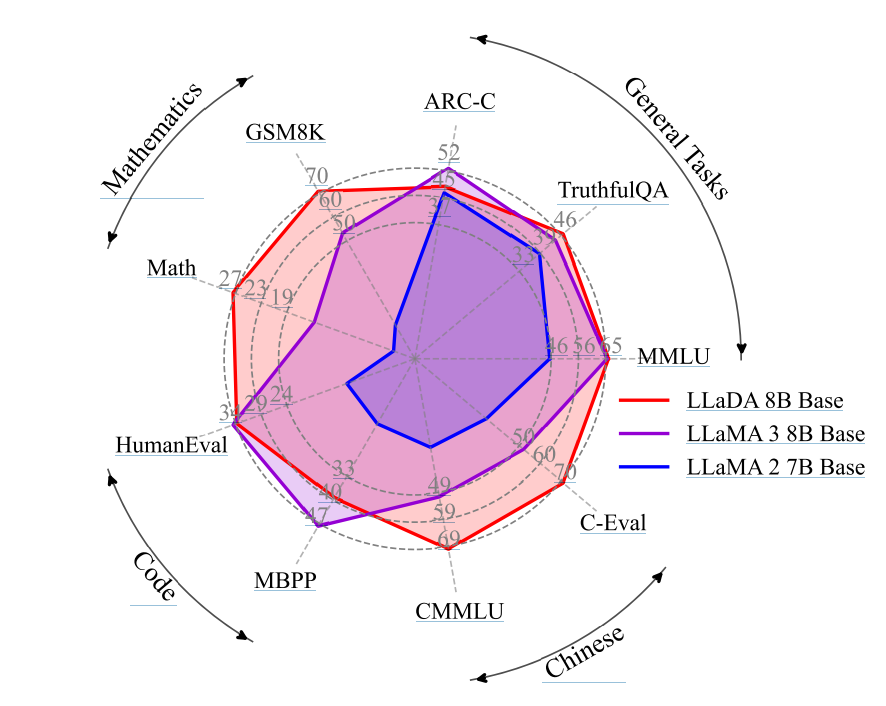

LLaDA has been evaluated throughout a number of benchmarks, evaluating its efficiency to autoregressive fashions like LLaMA 3 and GPT-4o. Key findings embody:

- Scalability: LLaDA 8B performs comparably to LLaMA 3 8B, proving that diffusion fashions can scale successfully.

- In-Context Studying: LLaDA excels at zero- and few-shot duties, surpassing LLaMA 2 7B.

- Instruction-Following: After supervised fine-tuning (SFT), LLaDA demonstrates spectacular conversational skills.

- Reversal Reasoning: Not like conventional ARMs, LLaDA efficiently breaks the ‘reversal curse,’ outperforming even GPT-4o in a reversal poem completion process.

The outcomes problem the idea that ARMs are the one viable structure for LLMs. LLaDA’s success means that diffusion fashions generally is a strong various, providing advantages equivalent to:

- Bidirectional Context Utilization: Not like ARMs, LLaDA can generate phrases primarily based on each left and proper context.

- Parallel Processing: As an alternative of producing phrases sequentially, it could predict a number of phrases directly, resulting in potential velocity enhancements.

- Higher Dealing with of Complicated Reasoning Duties: Duties that require non-sequential reasoning profit from LLaDA’s strategy.

Regardless of its benefits, LLaDA will not be with out challenges:

- Computational Value: Diffusion fashions require considerably extra computation in comparison with ARMs.

- Inference Effectivity: The sampling course of is at present slower than autoregressive strategies.

- Scaling Constraints: Whereas LLaDA has been scaled to 8B parameters, larger-scale coaching is required to match top-tier fashions like GPT-4.

Future work will give attention to optimizing inference velocity, fine-tuning alignment strategies, and integrating reinforcement studying strategies to enhance instruction-following skills.

LLaDA represents a groundbreaking shift in how we take into consideration language modeling. By proving that diffusion-based fashions can obtain outcomes corresponding to one of the best ARMs, this analysis paves the way in which for a brand new technology of LLMs that would surpass present limitations. Whereas challenges stay, the success of LLaDA indicators that the way forward for AI might not be solely autoregressive — it could even be diffusive.