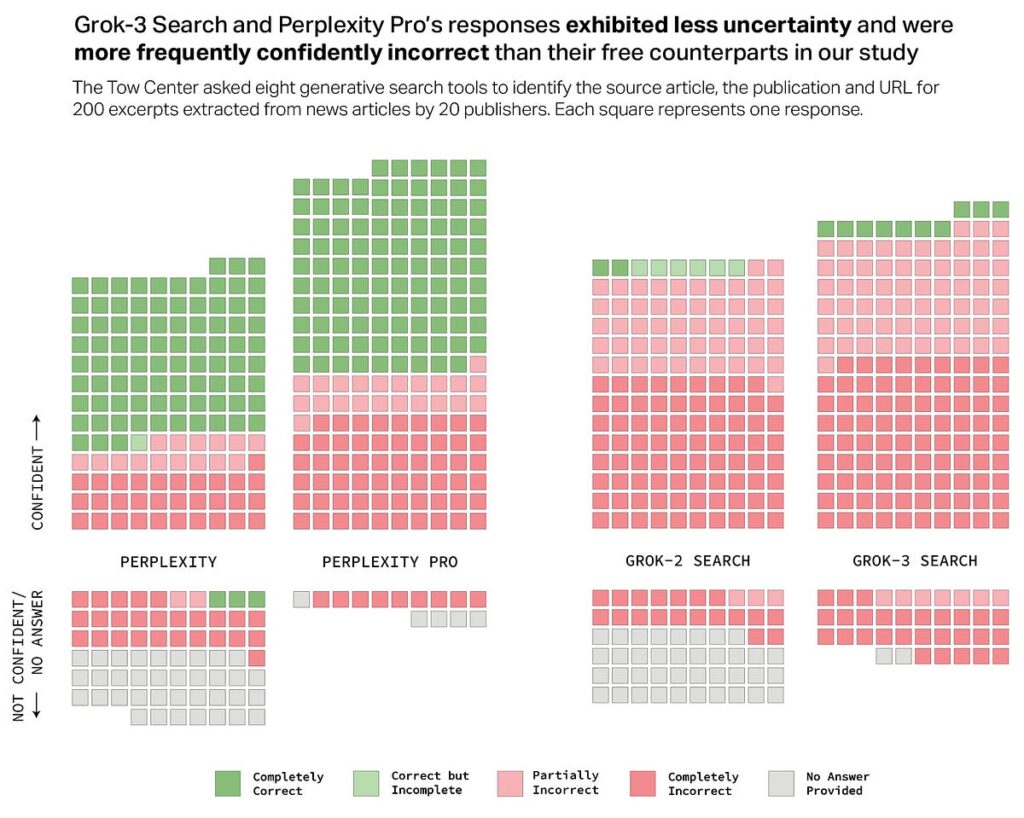

The Tow Heart requested eight generative search instruments to determine the supply article, the publication and URL for 200 excerpts extracted from information articles by 20 publishers. Every response was evaluated on its accuracy and confidence degree.

The check was designed to be straightforward!

Researchers intentionally selected excerpts that could possibly be simply present in Google, with the unique supply showing within the first three outcomes. But the outcomes had been deeply regarding:

- Lower than 40% of AI-generated search responses had been appropriate on common

- Essentially the most correct system, Perplexity, achieved solely 63% accuracy

- X’s Grok-3 carried out dismally with simply 6% accuracy

This real-world demonstration reveals how frontier AI fashions confidently present incorrect details about simply verifiable info.

One of the counterintuitive flaws in in the present day’s AI search instruments is their overconfidence.

Ask them to seek out info, and also you count on certainly one of two issues — both appropriate attribution or an admission of uncertainty. However most fashions don’t try this. As an alternative, they confidently generate fully incorrect solutions, as in the event that they had been info.

The Tow Heart research discovered a number of troubling patterns:

- Premium chatbots supplied extra confidently incorrect solutions than their free counterparts

- Most methods failed to say no answering after they couldn’t discover correct info

- AI instruments routinely fabricated hyperlinks and cited syndicated variations as an alternative of originals

Take into consideration this for a second —

The vast majority of what AI search tells you could possibly be incorrect, however you’d by no means understand it from the assured presentation.

The analysis recognized further regarding behaviors that increase critical questions on these instruments:

- A number of chatbots bypassed Robotic Exclusion Protocol preferences

- Content material licensing offers with information sources supplied no assure of correct quotation

- A number of instruments confirmed patterns of inventing info quite than admitting uncertainty

These aren’t simply theoretical issues. The dangers will far outweigh the rewards if companies and customers don’t perceive these limitations earlier than counting on AI search.

If the straightforward activity of discovering and attributing a direct quote proves so difficult for in the present day’s main AI search instruments, how can we belief them with extra complicated info retrieval duties?

For companies, this isn’t a hypothetical problem — it’s a significant legal responsibility. In case your AI search instrument confidently fabricates info about monetary insights, medical suggestions, or coverage choices, the results could possibly be catastrophic.

For AI search to really transfer from hype to actuality, it wants extra than simply large datasets and high-speed inference. It wants integrity.

At Riafy, we attempt to unravel these issues on the core. We constructed R10, a production-ready AI system, by fine-tuning Google’s Gemini — not simply to be clever, however to be dependable and ethically accountable in info retrieval.

Right here’s what makes R10 totally different:

- Multi-Perspective Intelligence — It considers context and ethics earlier than responding, avoiding bias and bettering factual accuracy.

- Acknowledging Uncertainty — If it doesn’t know a solution, it says so as an alternative of creating one thing up.

- Enhanced Safety Measures — It’s designed to acknowledge the bounds of its information and current info with applicable confidence ranges.

This strategy signifies that companies utilizing R10 don’t simply get an AI that generates textual content — they get an AI that includes moral issues and acknowledges its limitations.

The world doesn’t want one other AI that hallucinates info, generates dangerous content material, or fakes confidence. It wants AI that acknowledges its personal limitations, makes choices with applicable warning, and really earns person belief.

That’s precisely what we’re constructing at Riafy.

And if AI goes to outline the way forward for info retrieval, let’s be certain that it’s a future we are able to belief.