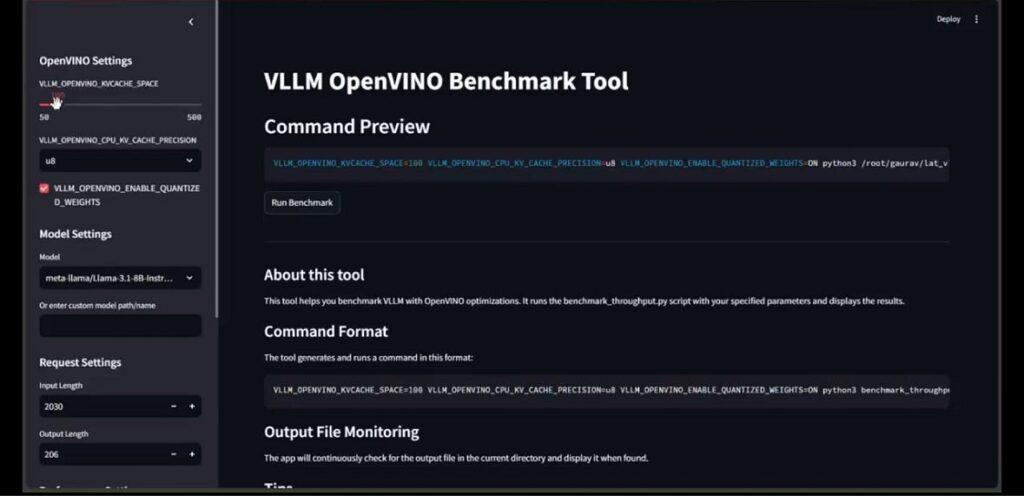

I used to be working vLLM with OpenVINO on a number of fashions to get benchmarking outcomes. Doing this manually each time was getting repetitive, so I made a decision to automate the method!

I constructed a easy Streamlit app that permits you to tweak parameters like:

🔹 Mannequin

🔹 KV cache dimension

🔹 Quantized vs. Unquantized

🔹 Knowledge kind

🔹 Enter & Output size

🔹 Prefill chunking technique

. and many others…

When you run it, you may see all the outcomes in your display screen immediately.

That is only a first draft, and I’ll hold enhancing it over time. Proper now, it really works with OpenVINO on Intel {hardware}. In subsequent steps I’d add IPEX as an possibility which might leverage AMX capabilities of the structure.

Subsequent steps could be to examine optimizations utilized in vLLM intimately each from mannequin and inference server degree.