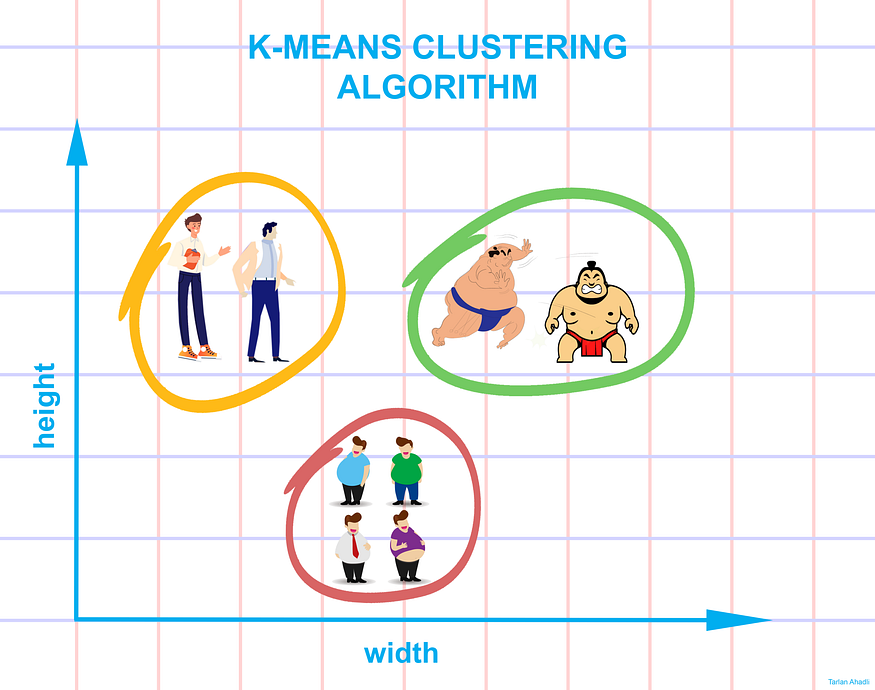

Ok-means is a knowledge clustering method for unsupervised machine studying that may separate unlabeled knowledge right into a predetermined variety of disjoint teams of equal variances — clusters — based mostly on their similarities.

It’s a preferred algorithm because of its ease of use and pace on giant datasets. On this weblog submit, we have a look at its underlying rules, use circumstances, in addition to advantages and limitations.

Ok-means is an iterative algorithm that splits a dataset into non-overlapping subgroups which can be known as clusters. The variety of clusters created is decided by the worth of okay — a hyperparameter that’s chosen earlier than operating the algorithm.

First, the algorithm selects okay preliminary factors, the place okay is the worth supplied to the algorithm.

Every of those serves as an preliminary centroid for a cluster — an actual or imaginary level that represents a cluster’s middle. Then one another level within the dataset is assigned to the centroid that’s closest to it by distance.

After that, we recalculate the places of the centroids. The coordinate of the centroid is the imply worth of all factors of the cluster. You need to use totally different imply capabilities for this, however a generally used one is the arithmetic imply (the sum of all factors, divided by the variety of factors).

As soon as we have now recalculated the centroid places, we are able to readjust the factors to the clusters based mostly on distance to the brand new places.

The recalculation of centroids is repeated till a stopping situation has been happy.

Some frequent stopping circumstances for k-means clustering are:

- The centroids don’t change location anymore.

- The info factors don’t change clusters anymore.

- Lastly, we are able to additionally terminate coaching after a set variety of iterations.

To sum up, the method consists of the next steps:

- Present the variety of clusters (okay) the algorithm should generate.

- Randomly choose okay knowledge factors and assign every as a centroid of a cluster.

- Classify knowledge based mostly on these centroids.

- Compute the centroids of the ensuing clusters.

- Repeat the steps 3 and 4 till you attain a stopping situation.

The tip results of the algorithm depends upon the variety of сlusters (okay) that’s chosen earlier than operating the algorithm. Nevertheless, choosing the proper okay could be laborious, with choices various based mostly on the dataset and the person’s desired clustering decision.

The smaller the clusters, the extra homogeneous knowledge there may be in every cluster. Growing the okay worth results in a lowered error price within the ensuing clustering. Nevertheless, an enormous okay also can result in extra calculation and mannequin complexity. So we have to strike a steadiness between too many clusters and too few.

The most well-liked heuristic for that is the elbow method.

Beneath you possibly can see a graphical illustration of the elbow technique. We calculate the variance explained by totally different okay values whereas on the lookout for an “elbow” — a price after which greater okay values don’t affect the outcomes considerably. This would be the greatest okay worth to make use of.

Mostly, Inside Cluster Sum of Squares (WCSS) is used because the metric for defined variance within the elbow technique. It calculates the sum of squares of distance from every centroid to every level in that centroid’s cluster.

So, that was the gist of clustering and the way clustering could be executed by means of the Ok-means algorithm. I hope I used to be capable of provide you with a basic introduction of one of many easiest unsupervised studying strategies. Thanks to your time. Hope you loved this text. 😁