Auto-Tuning Massive Language Fashions with Amazon SageMaker: A Deep Dive into LLMOps Optimization

Introduction

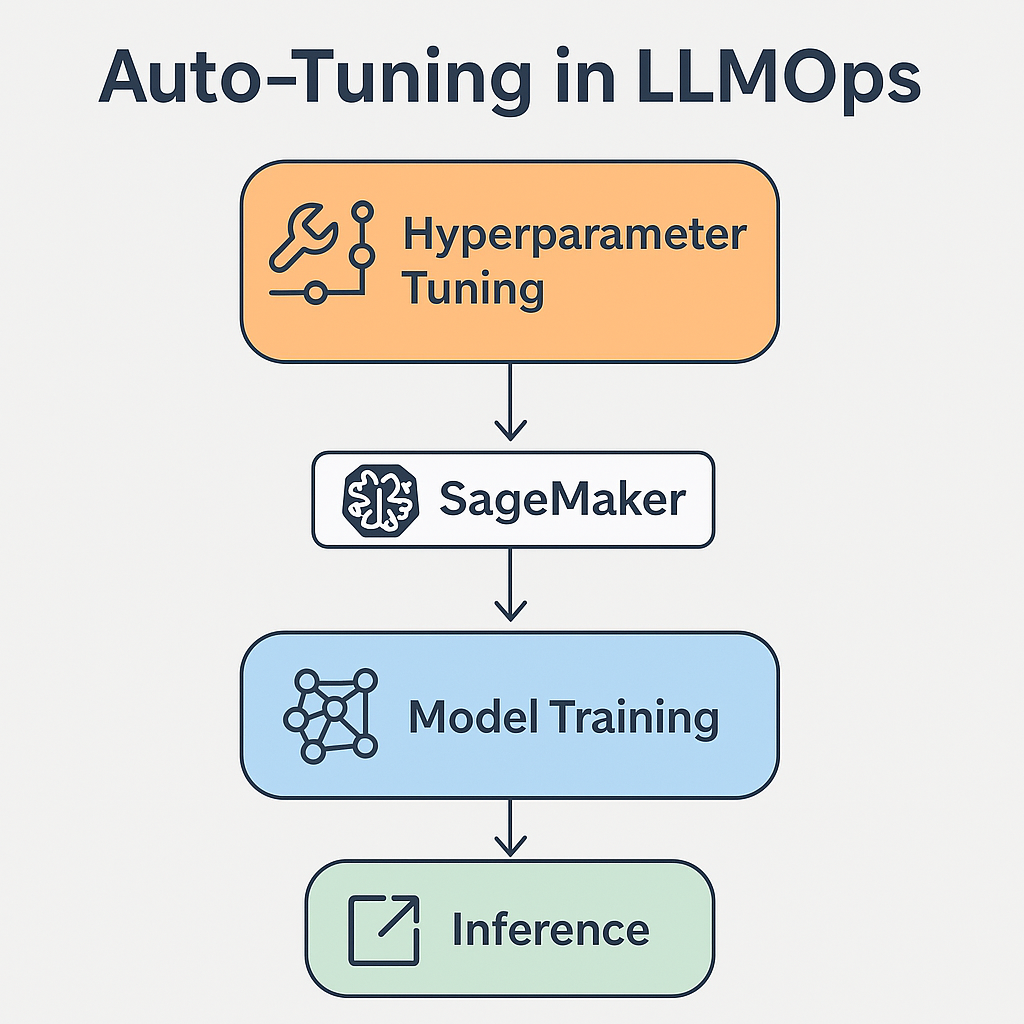

As Massive Language Fashions (LLMs) grow to be extra prevalent in manufacturing environments, LLMOps (LLM Operations) is rising as an important discipline, guaranteeing environment friendly deployment, monitoring, and optimization of those fashions. One of many key challenges in LLMOps is hyperparameter tuning—a course of that considerably impacts mannequin efficiency, price, and latency. Amazon SageMaker supplies a strong automated hyperparameter tuning functionality that streamlines this course of, optimizing fashions for particular workloads effectively.

On this article, we discover

Auto-Tuning with SageMaker, its working mechanism, and greatest practices to maximise the effectivity of fine-tuning and inference in large-scale LLM functions.

What’s Auto-Tuning in SageMaker?

Amazon SageMaker’s Computerized Mannequin Tuning (AMT), also called hyperparameter optimization (HPO), automates the method of discovering the most effective hyperparameter configuration for coaching a machine studying mannequin.

For LLMs, this course of entails optimizing parameters akin to:

– Studying price

– Batch dimension

– Sequence size

– Optimizer settings

– Dropout charges

– Gradient accumulation steps

Not like handbook tuning, which is time-consuming and computationally costly, SageMaker’s Auto-Tuning automates the seek for the most effective hyperparameter mixture primarily based on outlined targets akin to minimizing loss or maximizing accuracy.

How Auto-Tuning Works in SageMaker

1. Defining the Hyperparameter Area

Customers specify a variety of values for every hyperparameter. SageMaker explores these ranges to search out the optimum mixture. The search area can embrace:

– Steady values

– Discrete values

– Categorical values

2. Selecting an Optimization Technique

SageMaker helps a number of search methods, together with:

– Bayesian Optimization (default, really useful for costly fashions)

– Grid Search (exhaustive however pricey)

– Random Search (sooner however much less environment friendly)

– Hyperband (dynamic early stopping for quick convergence)

3.Launching Coaching Jobs

SageMaker runs a number of coaching jobs in parallel throughout completely different configurations. It dynamically adjusts exploration vs. exploitation, refining search outcomes over time.

4.Evaluating Mannequin Efficiency

After coaching jobs full, SageMaker selects the best-performing mannequin primarily based on an analysis metric (e.g., BLEU rating, perplexity, loss, or F1 rating).

5.Deploying the Finest Mannequin

The most effective-trained mannequin is routinely deployed to SageMaker endpoints or built-in into manufacturing pipelines.

Advantages of Auto-Tuning for LLMOps

1. Reduces Guide Effort – No want for handbook trial-and-error tuning.

2. Optimizes Value – Avoids extreme coaching jobs by intelligently choosing promising configurations.

3. Accelerates Mannequin Coaching – Identifies the most effective hyperparameters sooner than conventional handbook strategies.

4. Improves Mannequin Efficiency– Ensures hyperparameters are optimized for accuracy, latency, and effectivity.

5. Scales Seamlessly – Can be utilized throughout completely different AWS situations, supporting GPU and distributed coaching.

Finest Practices for Auto-Tuning LLMs in SageMaker

1. Use Heat Begin to Cut back Value

Leverage beforehand skilled fashions to initialize hyperparameter tuning as an alternative of ranging from scratch.

2. Optimize Distributed Coaching

When fine-tuning large-scale LLMs use SageMaker’s distributed coaching to hurry up tuning.

3. Set Early Stopping for Sooner Convergence Allow early stopping in Auto-Tuning to forestall pointless coaching on underperforming configurations.

4. Monitor Experiments with SageMaker Experiments

Observe completely different coaching runs, hyperparameter selections, and mannequin efficiency with SageMaker Experiments for reproducibility.

5. Optimize for Value-Effectivity with Spot Situations Use Spot Coaching to cut back prices whereas working a number of trials in Auto-Tuning.

6. Automate with SageMaker Pipelines

Combine Auto-Tuning right into a full MLOps pipeline utilizing SageMaker Pipelines, guaranteeing clean transitions from coaching to deployment.

Actual-World Use Case: Nice-Tuning an LLM with Auto-Tuning

Situation

An organization desires to fine-tune Llama 3 for enterprise doc summarization whereas optimizing coaching prices.

Resolution:

1. Outline a SageMaker Auto-Tuning Job with hyperparameters:

– Studying Price: [1e-5, 1e-3]

– Batch Dimension: [16, 32, 64]

– Optimizer: [AdamW, SGD]

– Sequence Size: [512, 1024]

2. Use Bayesian Optimization to determine the most effective mixture.

3. Leverage Spot Situations to cut back prices.

4. Run parallel tuning trials on a number of GPUs.

5. Deploy the most effective mannequin to SageMaker Inference.

Consequence:

– Achieved 20% enchancment in summarization accuracy.

– Decreased coaching prices by 35% utilizing Spot Coaching.

– Routinely optimized hyperparameters with out human intervention.

Way forward for Auto-Tuning in LLMOps

The evolution of AutoML and LLMOps is resulting in extra subtle hyperparameter tuning approaches, together with:

– Reinforcement Studying-based Hyperparameter Optimization

– Meta-Studying for Adaptive Hyperparameter Choice

– Neural Structure Search (NAS) for Automated Mannequin Choice

– LLM-Particular Auto-Tuning Frameworks leveraging AWS Bedrock

As enterprises proceed scaling LLM deployments,Auto-Tuning with SageMaker will probably be a key driver in bettering mannequin effectivity, efficiency, and cost-effectiveness.

Conclusion

Amazon SageMaker’s Auto-Tuning simplifies hyperparameter optimization, making it simpler for LLMOps engineers to fine-tune and deploy large-scale language fashions effectively. By leveraging Bayesian optimization, distributed coaching, and AWS automation instruments, organizations can obtain larger mannequin accuracy, decrease prices, and sooner improvement cycles.

As LLM adoption grows, automating optimization will probably be a game-changer, enabling companies to coach and deploy state-of-the-art language fashions with minimal handbook effort.