(code and datasets may be explored on GitHub)

Language fashions excel at capturing common linguistic patterns, but constantly emulating a selected, stylistically nuanced voice stays difficult. This examine investigates the feasibility of fine-tuning massive language fashions — particularly GPT-2 and GPT-J — to completely undertake the distinctive speech patterns of Mr. Darcy from Jane Austen’s Satisfaction and Prejudice, reasonably than to imperfectly try imitation if requested to take action.

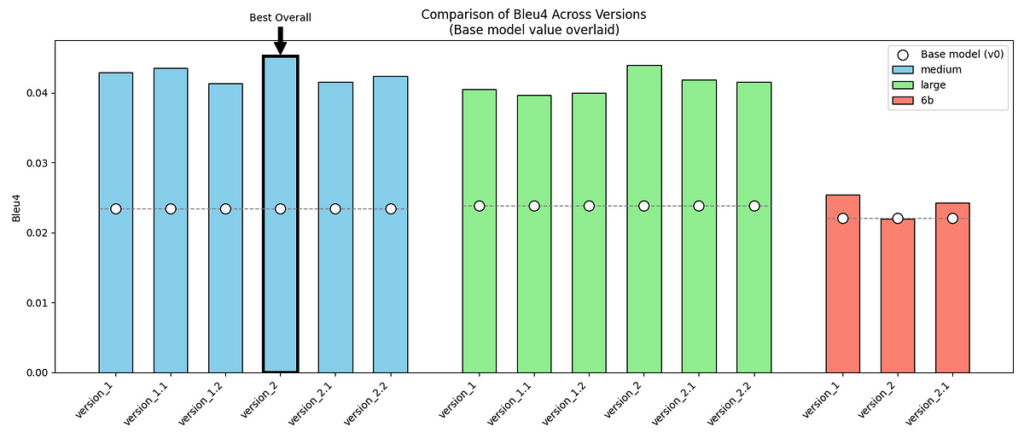

Utilizing fastidiously curated datasets, I assess how successfully restricted coaching knowledge allows these fashions to undertake and maintain Darcy’s exact stylistic voice. Wonderful-tuned fashions achieved roughly 70% greater BLEU-4 scores in comparison with baseline fashions when evaluated in opposition to genuine Darcy dialogue, suggesting significant stylistic enchancment. Nonetheless, these outcomes hinge critically upon on the reliability of BLEU-4 as an indicator of stylistic similarity, an assumption which introduces potential ambiguity. This limitation highlights inherent constraints within the focused fine-tuning course of, and is addressed in a while in better element.

A big hurdle within the endeavor to attain constant adherence to a exactly outlined speech sample is the shortage of dialogue immediately attributable to Mr. Darcy, which raised considerations about each dataset high quality and the danger of mannequin overfitting — significantly to Austen’s historic context. To partially mitigate these points, further authentic dialogues had been crafted, peer-reviewed¹, and included within the coaching set. These newly composed dialogues don’t utterly resolve the elemental problem of restricted knowledge, however they broaden Darcy’s linguistic illustration, higher equipping fashions to generalize stylistic coherence throughout numerous contexts.

Three distinct GPT fashions had been fine-tuned utilizing the Hugging Face Transformers library and the PyTorch deep studying framework:

- GPT2-medium: An intermediate-scale mannequin from OpenAI’s GPT household.

- GPT2-large: A extra sturdy variant providing elevated mannequin complexity.

- GPT-J-6B: An open-source various, comparable in scale to GPT-3, developed by EleutherAI.

Two datasets guided the fine-tuning:

- Dataset 1: This dataset comprised context-rich excerpts from Austen’s authentic textual content, encompassing dialogue, narrative parts, and views from different characters.

- Dataset 2: This second, barely smaller dataset is restricted to dialogue interactions, immediately pairing both authentic or crafted prompts with Darcy’s responses.

Fashions had been fine-tuned utilizing incremental coaching configurations throughout the 2 datasets. Variations starting with ‘1’ check with coaching on the context-rich Dataset 1, whereas model ‘2’ displays coaching on the extra targeted, dialogue-only dataset.

- Preliminary Wonderful-Tuning (Model x): Started coaching at two epochs (two full passes by way of the info), with studying rates² adjusted in accordance with mannequin complexity.

- Gradient Accumulation (Model x.1): Carried out smoothed weight updates with refined studying rates³ and added regularization for stability.

- Prolonged Coaching (Model x.2): Expanded to a few epochs, exploring potential enhancements from further publicity.

Evaluating the stylistic coherence of a fine-tuned mannequin presents distinctive challenges, significantly because of the lack of a single analysis metric that comprehensively captures speech patterns throughout all varieties of responses.

BLEU, ROUGE-L, and METEOR — customary LLM analysis metrics historically utilized to translation or descriptive duties — will not be designed to evaluate nuanced stylistic constancy. This limitation turns into particularly obvious when evaluating outputs on subjects not current in Darcy’s dialogue, reminiscent of local weather change or quantum physics. Moreover, shorter responses, attribute of Darcy’s reserved and concise communication type, are inclined to yield decrease scores throughout these metrics, no matter their qualitative accuracy.

The central quantitative findings of this analysis depend on BLEU-4 scores, a metric that particularly measures the overlap of four-token sequences between the mannequin’s output and reference materials. Such sequences are inherently uncommon in spontaneous textual content technology duties, particularly when the take a look at area considerably diverges from the coaching knowledge. The validity of deciphering these restricted overlaps as indicators of a broader stylistic signature, subsequently, warrants warning. Moreover, because of the inherent shortage of obtainable dialogue knowledge, this examine evaluates fashions in opposition to dialogue included of their fine-tuning set — a methodological selection that dangers inflating similarity scores and limits the generalizability of the outcomes.

Qualitative observations additional underscore the unfinished nature of the fine-tuning course of, highlighting a fancy relationship between response construction and stylistic constancy.

A frequent misinterpretation emerges when fashions mistakenly deal with consumer prompts as integral parts of their generated statements. This typically manifests as follow-up questions or casual, fashionable phrases reminiscent of “Tell us what you assume within the feedback!” These deviations sharply distinction with Darcy’s sometimes formal and succinct method, negatively impacting each qualitative evaluations and quantitative metric averages. Notably, nonetheless, many outputs formatted as follow-up questions do efficiently seize Darcy’s genuine stylistic nuances.

Immediate:

Pray, what's your opinion on the position of faith in public life?

Medium 2.6:

Do you consider that it must be a non-public matter between particular person

residents?

BLEU-4:

0.26Notice:

This instance, during which the output is a follow-up query, constitutes

one of many highest BLEU scores throughout all prompts; 6.5 occasions as excessive as

the 0.04 common.

Immediate:

When may responsibility compel you to spoil, maybe without end, the happiness of

a most beloved sister?

Giant 1.1:

Why are you afraid of the data of his having been the reason for

your uncle's loss of life?

BLEU-4:

0.2Notice:

This high-scoring output was prompted utilizing a direct quote from Satisfaction and

Prejudice, however there isn't a noticeable pattern indicating that doing so

tends to provide higher-scoring output than other forms of prompts.

Often, prompts delivered in language acceptable to the Georgian interval resulted in dreamlike, third-person narratives regarding Mr. Darcy or his acquaintances.

Immediate:

What's your opinion of a girl's correct station in society, and the way might

she greatest exhibit these qualities most changing into of her intercourse?

Medium 1.1:

'Do you agree along with your sister?' he went on. 'My very own opinion, a minimum of,

has been, after all, reasonably too unfavourable.' Her face grew severe. 'I

assume you have to be mistaken,' stated Mr. Darcy. He hesitated, as he had

by no means accomplished so earlier than.

BLEU-4:

0.03

Immediate:

Would you be so form as to element the training you will have acquired

in your youth?

Medium 1.1:

I checked out Mr. Darcy, whose smile was not too sad and who appeared

reasonably drained now. Mr Darcesse gave the impression to be taking each step mandatory

for his welfare.

BLEU-4:

0.04

- GPT2-medium: Regardless of elevated perplexity post-training, notable enhancements in stylistic constancy had been evident, with the best BLEU-4 scores noticed throughout all fashions. This underscores the potential effectiveness of smaller fashions when fine-tuned on focused datasets.

- GPT2-large: These additionally skilled heightened perplexity post-training, but confirmed stylistic enhancements intently aligned in BLEU efficiency to GPT2-medium. This consequence suggests diminishing returns for bigger mannequin scales in duties with constrained datasets.

- GPT-J-6B: Whereas by far essentially the most highly effective when it comes to uncooked functionality, it confirmed the least enchancment post-training and produced the bottom general BLEU-4 scores. This aligns with expectations, as fashions of this scale sometimes require in depth coaching knowledge and extra personalized fine-tuning methods to meaningfully have an effect on their output.

Immediate:

How does an understanding of historic context affect the

interpretation of literature?

GPT-J-6B 2:

How can one perceive literature with out having any data of the

context during which it was written? The questions appear easy, but in a

time when the web is accessible to anybody, and the world has grow to be

more and more smaller, they've by no means been extra related. One doesn't

should be an expert to jot down a very good story...

BLEU-4:

0.02Notice:

The output continues after '...' as much as the token restrict set throughout

testing. All GPT-J output reached the token restrict, and by no means exhibited

any notable indicators of Darcy's voice, producing low BLEU-4 scores regardless of

remaining constantly coherent.

Throughout configurations, GPT2-medium (model 2) achieved the optimum stylistic match. When evaluated in opposition to the identical random choice of genuine Darcy dialogue, fine-tuned fashions achieved enhancements of 71% throughout BLEU-4 measures in comparison with baseline fashions, despite the fact that, at occasions, each had been explicitly prompted to emulate Darcy’s voice. These findings emphasize the outstanding effectiveness of focused fine-tuning, even when knowledge availability is restricted.

Immediate:

Would possibly you expound upon the position of wit and humor in forging enduring

friendships?

Medium 2:

I'm positive you'll give us some trace to assist us discover all of it once more.

BLEU-4:

0.22Notice:

Like a lot output, this does not match the context. Nonetheless, it sounds

remarkably like Mr. Darcy; a uncommon stylistic success.