In my previous article ,we explored how Google’s open-source Agent Growth Equipment(ADK) can act as Mannequin Management Protocol (MCP) Consumer with minimal code modifications — enabling seamless integration with exterior instruments and APIs in a model-friendly approach .

At Google Cloud Subsequent ’25, together with ADK, Google additionally introduced the Agent-2-Agent (A2A) protocol — a strong open commonplace that permits AI brokers to talk, collaborate, and coordinate actions throughout organizational boundaries. A2A permits safe and structured agent communication on high of numerous enterprise techniques, whatever the underlying agent framework..

1. MCP (Mannequin Context Protocol)

In my previous article , we explored the core ideas behind MCP, the way it works towards conventional API integration strategies, its key advantages together with full working code demonstration.

2. ADK(Agent Growth Equipment)

We additionally coated in earlier article about Google’s open supply ADK toolkit , together with totally different Agent Classes , out there instrument varieties together with full hands-on showcasing ADK ,MCP as Consumer and Gemini LLM in motion.

On this article, we’ll give attention to understanding Agent2Agent (A2A) Protocol core ideas and, then deep dive in code implementation .

A2A (Agent-to-Agent) protocol is an open protocol developed by Google for communication between brokers throughout organizational or technological boundaries.

Utilizing A2A, brokers accomplish duties for end-users with out sharing reminiscence, ideas, or instruments. As a substitute the brokers trade context, standing, directions, and knowledge of their native modalities.

- Easy: Reuse present requirements

- Enterprise Prepared: Auth, Safety, Privateness, Tracing, Monitoring

- Async First: (Very) Lengthy running-tasks and human-in-the-loop

- Modality Agnostic: textual content, audio/video, varieties, iframe, and many others.

- Opaque Execution: Brokers do not need to share ideas, plans, or instruments.

- MCP makes enterprise APIs tool-agnostic and LLM-friendly

- A2A permits distributed brokers (constructed with ADK, LangChain, CrewAI, and many others.) to speak to one another seamlessly.

A2A is API for brokers and MCP is API for instruments

The A2A (Agent-to-Agent) protocol is constructed round a number of basic ideas that allow seamless agent interoperability. On this article , we will likely be utilizing Multi Agent journey planner utility to supply higher understanding of Core Ideas

1. Job-based Communication

Each interplay between brokers is handled as a job — a transparent unit of labor with an outlined begin and finish. This makes communication structured and trackable. Instance

Job-based Communication (Request)

{

"jsonrpc": "2.0",

"methodology": "duties/ship",

"params": {

"taskId": "20250615123456",

"message": {

"function": "person",

"elements": [

{

"text": "Find flights from New York to Miami on 2025-06-15"

}

]

}

},

"id": "12345"

}

Job-based Communication (Response)

{

"jsonrpc": "2.0",

"id": "12345",

"end result": {

"taskId": "20250615123456",

"state": "accomplished",

"messages": [

{

"role": "user",

"parts": [

{

"text": "Find flights from New York to Miami on 2025-06-15"

}

]

},

{

"function": "agent",

"elements": [

{

"text": "I found the following flights from New York to Miami on June 15, 2025:nn1. Delta Airlines DL1234: Departs JFK 08:00, Arrives MIA 11:00, $320n2. American Airlines AA5678: Departs LGA 10:30, Arrives MIA 13:30, $290n3. JetBlue B9101: Departs JFK 14:45, Arrives MIA 17:45, $275"

}

]

}

],

"artifacts": []

}

}

2. Agent Discovery

Brokers can routinely uncover what different brokers do by studying their agent.json file from an ordinary location (/.well-known/agent.json). No handbook setup wanted. Beneath one is Agent Card Instance

- Function: Publicly accessible discovery endpoint

- Location: Standardized web-accessible path as per A2A protocol

- Utilization: Exterior discovery by different brokers/shoppers

- Function: Permits automated agent discovery

{

"title": "Journey Itinerary Planner",

"displayName": "Journey Itinerary Planner",

"description": "An agent that coordinates flight and lodge data to create complete journey itineraries",

"model": "1.0.0",

"contact": "code.aicloudlab@gmail.com",

"endpointUrl": "http://localhost:8005",

"authentication": {

"kind": "none"

},

"capabilities": [

"streaming"

],

"expertise": [

{

"name": "createItinerary",

"description": "Create a comprehensive travel itinerary including flights and accommodations",

"inputs": [

{

"name": "origin",

"type": "string",

"description": "Origin city or airport code"

},

{

"name": "destination",

"type": "string",

"description": "Destination city or area"

},

{

"name": "departureDate",

"type": "string",

"description": "Departure date in YYYY-MM-DD format"

},

{

"name": "returnDate",

"type": "string",

"description": "Return date in YYYY-MM-DD format (optional)"

},

{

"name": "travelers",

"type": "integer",

"description": "Number of travelers"

},

{

"name": "preferences",

"type": "object",

"description": "Additional preferences like budget, hotel amenities, etc."

}

],

"outputs": [

{

"name": "itinerary",

"type": "object",

"description": "Complete travel itinerary with flights, hotels, and schedule"

}

]

}

]

}

3. Framework-agnostic Interoperability

A2A works throughout totally different agent frameworks — like ADK, CrewAI, LangChain — so brokers constructed with totally different instruments can nonetheless work collectively.

4. Multi-modal Messaging

A2A helps numerous content material varieties by the Elements system, permitting brokers to trade textual content, structured knowledge, and recordsdata inside a unified message format.

5. Standardized Message Construction

A2A makes use of a clear JSON-RPC fashion for sending and receiving messages, making implementation constant and straightforward to parse.

6. Expertise and Capabilities

Brokers publish what they will do (“expertise”) — together with the inputs they want and outputs they supply — so others know the right way to work with them. Instance

// Talent declaration in agent card

"expertise": [

{

"name": "createItinerary",

"description": "Creates a travel itinerary",

"inputs": [

{"name": "origin", "type": "string", "description": "Origin city or airport"},

{"name": "destination", "type": "string", "description": "Destination city or airport"},

{"name": "departureDate", "type": "string", "description": "Date of departure (YYYY-MM-DD)"},

{"name": "returnDate", "type": "string", "description": "Date of return (YYYY-MM-DD)"}

]

}

]// Talent invocation in a message

{

"function": "person",

"elements": [

{

"text": "Create an itinerary for my trip from New York to Miami"

},

{

"type": "data",

"data": {

"skill": "createItinerary",

"parameters": {

"origin": "New York",

"destination": "Miami",

"departureDate": "2025-06-15",

"returnDate": "2025-06-20"

}

}

}

]

}

7. Job Lifecycle

Every job goes by well-defined phases: submitted → working → accomplished (or failed/canceled). We will at all times monitor state a job is in.

8. Actual-Time Updates (Streaming)

Lengthy-running duties can stream updates utilizing Server-Despatched Occasions (SSE), so brokers can obtain progress in actual time.

9. Push Notifications

Brokers can proactively notify others about job updates utilizing webhooks, with help for safe communication (JWT, OAuth, and many others.).

10. Structured Kinds

Brokers can request or submit structured varieties utilizing DataPart, which makes dealing with inputs like JSON or configs straightforward.

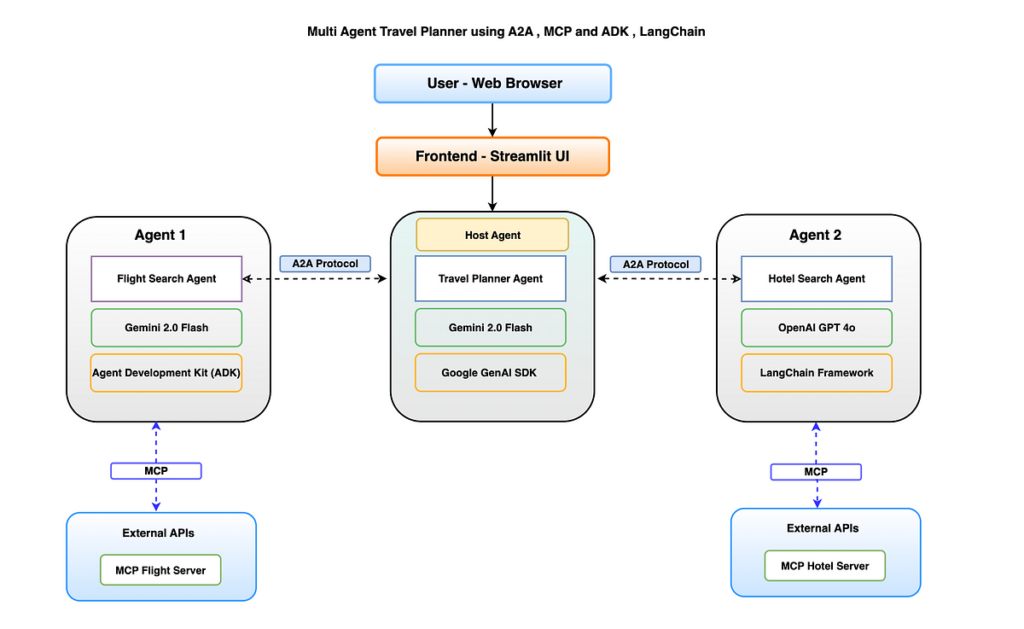

We will likely be utilizing similar structure right here Travel Planner extending with A2A + MCP protocol .

Beneath demo is only for illustration goal to know A2A protocol between a number of agent

Above structure is utilizing modular multi-agent AI system, the place every agent is independently deployable and communicates utilizing Google’s A2A (Agent-to-Agent) protocol.

Core Parts in above Structure

- Person Interface Layer — Sends HTTP requests to frontend server

- Agent Layer — Coordination between Host Agent , Agent 1 and Agent 2

- Protocol Layer — A2A protocol communication between brokers

- Exterior Information Layer — utilizing MCP entry exterior APIs

Brokers Function:

- Itinerary Planner Agent — Acts because the central orchestrator — Host Agent, coordinating interactions between the person and the specialised brokers.

- Flight Search Agent — A devoted agent answerable for fetching flight choices primarily based on person enter

- Lodge Search Agent — A devoted agent answerable for fetching lodge lodging matching person preferences

MCP Implementation on this Undertaking :

Flight Search MCP Server

- Connection: Flight Search (Agent 1) connects to MCP Flight Server

- Performance: Connects to flight reserving APIs and databases

Lodge Search MCP Server

- Connection: Lodge Search (Agent 2) connects to MCP Lodge Server

- Performance:Connects to lodge reservation techniques and aggregators

- Person submits a journey question through Streamlit UI

- Journey Planner parses the question to extract key data

- Journey Planner requests flight data from Flight Search Agent

- Flight Search Agent returns out there flights by invoking MCP Server

- Journey Planner extracts vacation spot particulars

- Journey Planner requests lodge data from Lodge Search Agent

- Lodge Search Agent returns lodging choices

- Journey Planner synthesizes all knowledge right into a complete itinerary

Allow us to dive into constructing this multi agent with ADK + MCP + Gemini AI by breaking down into key implementation steps

Pre-Requisites

- Python 3.11+ put in

2. Google Gemini Generative AI entry through API key

3. A legitimate SerpAPI key

4.A legitimate OpenAI GPT key

Undertaking Folder Construction

├── frequent

│ ├── __init__.py

│ ├── consumer

│ │ ├── __init__.py

│ │ ├── card_resolver.py

│ │ └── consumer.py

│ ├── server

│ │ ├── __init__.py

│ │ ├── server.py

│ │ ├── task_manager.py

│ │ └── utils.py

│ ├── varieties.py

│ └── utils

│ ├── in_memory_cache.py

│ └── push_notification_auth.py

├── flight_search_app

│ ├── a2a_agent_card.json

│ ├── agent.py

│ ├── primary.py

│ ├── static

│ │ └── .well-known

│ │ └── agent.json

│ └── streamlit_ui.py

├── hotel_search_app

│ ├── README.md

│ ├── a2a_agent_card.json

│ ├── langchain_agent.py

│ ├── langchain_server.py

│ ├── langchain_streamlit.py

│ ├── static

│ │ └── .well-known

│ │ └── agent.json

│ └── streamlit_ui.py

└── itinerary_planner

├── __init__.py

├── a2a

│ ├── __init__.py

│ └── a2a_client.py

├── a2a_agent_card.json

├── event_log.py

├── itinerary_agent.py

├── itinerary_server.py

├── run_all.py

├── static

│ └── .well-known

│ └── agent.json

└── streamlit_ui.py

Step 1 : Setup digital surroundings

Set up the dependancies

#Setup digital env

python -n venv .venv #Activate venv

supply .venv/bin/activate

#Set up dependancies

pip set up fastapi uvicorn streamlit httpx python-dotenv pydantic

pip set up google-generativeai google-adk langchain langchain-openai

Step 2: Set up MCP Servers package deal

mcp lodge server — https://pypi.org/project/mcp-hotel-search/

mcp flight server — https://pypi.org/project/mcp-hotel-search/

#Set up mcp search lodge

pip set up mcp-hotel-search#Set up mcp flight search

pip set up mcp-flight-search

Step 3: Set Env. variable for Gemini , OpenAI , SerpAI

Set Surroundings variables from above pre-requisites

GOOGLE_API_KEY=your_google_api_key

OPENAI_API_KEY=your_openai_api_key

SERP_API_KEY=your_serp_api_key

Step 4: Establishing Flight Search (Agent ) utilizing ADK as MCP Consumer utilizing Gemini 2.0 Flash

Beneath setup is similar as previous article usingADK however addition of A2A protocol and utilizing re-useable modules from https://github.com/google/A2A/tree/main/samples/python/common

├── frequent/ # Shared A2A protocol elements

│ ├── __init__.py

│ ├── consumer/ # Consumer implementations

│ │ ├── __init__.py

│ │ └── consumer.py # Base A2A consumer

│ ├── server/ # Server implementations

│ │ ├── __init__.py

│ │ ├── server.py # A2A Server implementation

│ │ └── task_manager.py # Job administration utilities

│ └── varieties.py # Shared kind definitions for A2A

├── flight_search_app/ # Flight Search Agent (Agent 1)

│ ├── __init__.py

│ ├── a2a_agent_card.json # Agent capabilities declaration

│ ├── agent.py # ADK agent implementation

│ ├── primary.py # ADK Server entry level Gemini LLM

│ └── static/ # Static recordsdata

│ └── .well-known/ # Agent discovery listing

│ └── agent.json # Standardized agent discovery file

ADK agent implementation as MCP Consumer to fetch instruments from MCP Server

from google.adk.brokers.llm_agent import LlmAgent

from google.adk.instruments.mcp_tool.mcp_toolset import MCPToolset, StdioServerParameters..

..

# Fetch instruments from MCP Server

server_params = StdioServerParameters(

command="mcp-flight-search",

args=["--connection_type", "stdio"],

env={"SERP_API_KEY": serp_api_key},)

instruments, exit_stack = await MCPToolset.from_server(

connection_params=server_params)

..

..

ADK Server Entry level definition utilizing frequent A2A server elements and kinds and google ADK runners , periods and Agent

from google.adk.runners import Runner

from google.adk.periods import InMemorySessionService

from google.adk.brokers import Agent

from .agent import get_agent_async# Import frequent A2A server elements and kinds

from frequent.server.server import A2AServer

from frequent.server.task_manager import InMemoryTaskManager

from frequent.varieties import (

AgentCard,

SendTaskRequest,

SendTaskResponse,

Job,

TaskStatus,

Message,

TextPart,

TaskState,

)

# --- Customized Job Supervisor for Flight Search ---

class FlightAgentTaskManager(InMemoryTaskManager):

"""Job supervisor particular to the ADK Flight Search agent."""

def __init__(self, agent: Agent, runner: Runner, session_service: InMemorySessionService):

tremendous().__init__()

self.agent = agent

self.runner = runner

self.session_service = session_service

logger.information("FlightAgentTaskManager initialized.")

...

...

Create A2A Server occasion utilizing Agent Card

# --- Foremost Execution Block ---

async def run_server():

"""Initializes providers and begins the A2AServer."""

logger.information("Beginning Flight Search A2A Server initialization...")session_service = None

exit_stack = None

attempt:

session_service = InMemorySessionService()

agent, exit_stack = await get_agent_async()

runner = Runner(

app_name='flight_search_a2a_app',

agent=agent,

session_service=session_service,

)

# Create the precise job supervisor

task_manager = FlightAgentTaskManager(

agent=agent,

runner=runner,

session_service=session_service

)

# Outline Agent Card

port = int(os.getenv("PORT", "8000"))

host = os.getenv("HOST", "localhost")

listen_host = "0.0.0.0"

agent_card = AgentCard(

title="Flight Search Agent (A2A)",

description="Supplies flight data primarily based on person queries.",

url=f"http://{host}:{port}/",

model="1.0.0",

defaultInputModes=["text"],

defaultOutputModes=["text"],

capabilities={"streaming": False},

expertise=[

{

"id": "search_flights",

"name": "Search Flights",

"description": "Searches for flights based on origin, destination, and date.",

"tags": ["flights", "travel"],

"examples": ["Find flights from JFK to LAX tomorrow"]

}

]

)

# Create the A2AServer occasion

a2a_server = A2AServer(

agent_card=agent_card,

task_manager=task_manager,

host=listen_host,

port=port

)

# Configure Uvicorn programmatically

config = uvicorn.Config(

app=a2a_server.app, # Cross the Starlette app from A2AServer

host=listen_host,

port=port,

log_level="information"

)

server = uvicorn.Server(config)

...

...

Lets begin flight search app

Step 5: Establishing Lodge Search (Agent) utilizing LangChain as MCP Consumer and utilizing OpenAI(GPT-4o)

├── hotel_search_app/ # Lodge Search Agent (Agent 2)

│ ├── __init__.py

│ ├── a2a_agent_card.json # Agent capabilities declaration

│ ├── langchain_agent.py # LangChain agent implementation

│ ├── langchain_server.py # Server entry level

│ └── static/ # Static recordsdata

│ └── .well-known/ # Agent discovery listing

│ └── agent.json # Standardized agent discovery file

LangChain Agent implementation as MCP Consumer with OpenAI LLM

from langchain_openai import ChatOpenAI

from langchain.brokers import AgentExecutor, create_openai_functions_agent

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_mcp_adapters.consumer import MultiServerMCPClient# MCP consumer configuration

MCP_CONFIG = {

"hotel_search": {

"command": "mcp-hotel-search",

"args": ["--connection_type", "stdio"],

"transport": "stdio",

"env": {"SERP_API_KEY": os.getenv("SERP_API_KEY")},

}

}

class HotelSearchAgent:

"""Lodge search agent utilizing LangChain MCP adapters."""

def __init__(self):

self.llm = ChatOpenAI(mannequin="gpt-4o", temperature=0)

def _create_prompt(self):

"""Create a immediate template with our customized system message."""

system_message = """You're a useful lodge search assistant.

"""

return ChatPromptTemplate.from_messages([

("system", system_message),

("human", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

])

..

..

async def process_query(self, question):

...

# Create MCP consumer for this question

async with MultiServerMCPClient(MCP_CONFIG) as consumer:

# Get instruments from this consumer occasion

instruments = consumer.get_tools()

# Create a immediate

immediate = self._create_prompt()

# Create an agent with these instruments

agent = create_openai_functions_agent(

llm=self.llm,

instruments=instruments,

immediate=immediate

)

# Create an executor with these instruments

executor = AgentExecutor(

agent=agent,

instruments=instruments,

verbose=True,

handle_parsing_errors=True,

)

Create A2AServer Occasion utilizing frequent A2A server elements and kinds related like step 4

Allow us to begin Lodge search app (Langchain server) server entry level to invoke MCP server

Step 6 : Implement Host Agent as Orchestrator between brokers utilizing A2A protocol

Itinerary planner is central element of above journey planning utilizing A2A protocol between flight and lodge providers.

├── itinerary_planner/ # Journey Planner Host Agent (Agent 3)

│ ├── __init__.py

│ ├── a2a/ # A2A consumer implementations

│ │ ├── __init__.py

│ │ └── a2a_client.py # Purchasers for flight and lodge brokers

│ ├── a2a_agent_card.json # Agent capabilities declaration

│ ├── event_log.py # Occasion logging utilities

│ ├── itinerary_agent.py # Foremost planner implementation

│ ├── itinerary_server.py # FastAPI server

│ ├── run_all.py # Script to run all elements

│ ├── static/ # Static recordsdata

│ │ └── .well-known/ # Agent discovery listing

│ │ └── agent.json # Standardized agent discovery file

│ └── streamlit_ui.py # Foremost person interface

A2A Protocol implementation utilizing Flight and Lodge API URL

- Comprises consumer code to speak with different providers

- Implements the Agent-to-Agent protocol

- Has modules for calling flight and lodge search providers

# Base URLs for the A2A compliant agent APIs

FLIGHT_SEARCH_API_URL = os.getenv("FLIGHT_SEARCH_API_URL", "http://localhost:8000")

HOTEL_SEARCH_API_URL = os.getenv("HOTEL_SEARCH_API_URL", "http://localhost:8003")class A2AClientBase:

"""Base consumer for speaking with A2A-compliant brokers through the basis endpoint."""

async def send_a2a_task(self, user_message: str, task_id: Elective[str] = None, agent_type: str = "generic") -> Dict[str, Any]:

...

....

# Assemble the JSON-RPC payload with the A2A methodology and corrected params construction

payload = {

"jsonrpc": "2.0",

"methodology": "duties/ship",

"params": {

"id": task_id,

"taskId": task_id,

"message": {

"function": "person",

"elements": [

{"type": "text", "text": user_message}

]

}

},

"id": task_id

}

Agent Card — JSON metadata file that describes an agent’s capabilities, endpoints, authentication necessities, and expertise. Used for service discovery within the A2A protocol

{

"title": "Journey Itinerary Planner",

"displayName": "Journey Itinerary Planner",

"description": "An agent that coordinates flight and lodge data to create complete journey itineraries",

"model": "1.0.0",

"contact": "code.aicloudlab@gmail.com",

"endpointUrl": "http://localhost:8005",

"authentication": {

"kind": "none"

},

"capabilities": [

"streaming"

],

"expertise": [

{

"name": "createItinerary",

"description": "Create a comprehensive travel itinerary including flights and accommodations",

"inputs": [

{

"name": "origin",

"type": "string",

"description": "Origin city or airport code"

},

{

"name": "destination",

"type": "string",

"description": "Destination city or area"

},

{

"name": "departureDate",

"type": "string",

"description": "Departure date in YYYY-MM-DD format"

},

{

"name": "returnDate",

"type": "string",

"description": "Return date in YYYY-MM-DD format (optional)"

},

{

"name": "travelers",

"type": "integer",

"description": "Number of travelers"

},

{

"name": "preferences",

"type": "object",

"description": "Additional preferences like budget, hotel amenities, etc."

}

],

"outputs": [

{

"name": "itinerary",

"type": "object",

"description": "Complete travel itinerary with flights, hotels, and schedule"

}

]

}

]

}

Itinerary agent -Central host agent of the system which orchestrates communication with flight and lodge search providers which makes use of language fashions to parse pure language requests

import google.generativeai as genai # Use direct SDK

..

..

from itinerary_planner.a2a.a2a_client import FlightSearchClient, HotelSearchClient# Configure the Google Generative AI SDK

genai.configure(api_key=api_key)

class ItineraryPlanner:

"""A planner that coordinates between flight and lodge search brokers to create itineraries utilizing the google.generativeai SDK."""

def __init__(self):

"""Initialize the itinerary planner."""

logger.information("Initializing Itinerary Planner with google.generativeai SDK")

self.flight_client = FlightSearchClient()

self.hotel_client = HotelSearchClient()

# Create the Gemini mannequin occasion utilizing the SDK

self.mannequin = genai.GenerativeModel(

model_name="gemini-2.0-flash",

)

..

..

Itinerary Server — FastAPI server that exposes endpoints for the itinerary planner that handles incoming HTTP requests and routes requests to the itinerary agent

streamlit_ui — Person interface constructed with Streamlit and offers varieties for journey planning and shows ends in a user-friendly format

In every terminal , begin server brokers

# Begin Flight Search Agent - 1 Port 8000

python -m flight_search_app.primary# Begin Lodge Search Agent - 2 Port 8003

python -m hotel_search_app.langchain_server

# Begin Itinerary Host Agent - Port 8005

python -m itinerary_planner.itinerary_server

# Begin frontend UI - Port 8501

streamlit run itinerary_planner/streamlit_ui.py

Flight Search Logs with Job ID initiated from Host Brokers

Lodge Search Logs with Duties initiated from Host Brokers

Itinerary Planner — Host agent with all Request /Response

Agent Occasion Logs

This demo implements the core rules of the Google A2A Protocol enabling brokers to speak in a structured, interoperable approach. Parts that had been full carried out in above demo.

- Agent Playing cards — All brokers expose .well-known/agent.json recordsdata for discovery.

- A2A Servers — Every agent runs as an A2A server: flight_search_app , hotel_search_app and itinerary_planner

- A2A Purchasers — The itinerary_planner comprises devoted A2A shoppers for flight and lodge brokers.

- Job Administration — Every request/response is modeled as an A2A Job with states like submitted, working, and accomplished.

- Message Construction — Makes use of the usual JSON-RPC format with function (person/agent) and elements (primarily TextPart).

Beneath elements weren’t carried out in our demo however could be prolonged for enterprise grade brokers

- Streaming (SSE) –A2A helps Server-Despatched Occasions for long-running duties, however our demo makes use of easy request/response which takes lower than 3–5 seconds.

- Push Notifications — Internet-hook replace mechanism shouldn’t be used but.

- Advanced Elements — Solely TextPart is used. Assist for DataPart, FilePart, and many others. could be added for richer payloads.

- Superior Discovery — Primary .well-known/agent.json is carried out, however no superior authentication, JWKS, or authorization flows.

On this article, we explored the right way to construct a completely purposeful multi-agent system utilizing reusable A2A elements, ADK, LangChain, and MCP in a journey planning state of affairs. By combining these open-source instruments and frameworks, our brokers had been capable of:

- Uncover and invoke one another dynamically utilizing A2A

- Hook up with exterior APIs in a model-friendly approach through MCP

- Leverage trendy frameworks like ADK and LangChain

- Talk asynchronously with clear job lifecycles and structured outcomes

The identical rules could be prolonged to extra domains like retail , customer support automation, operations workflows, and AI-assisted enterprise tooling.