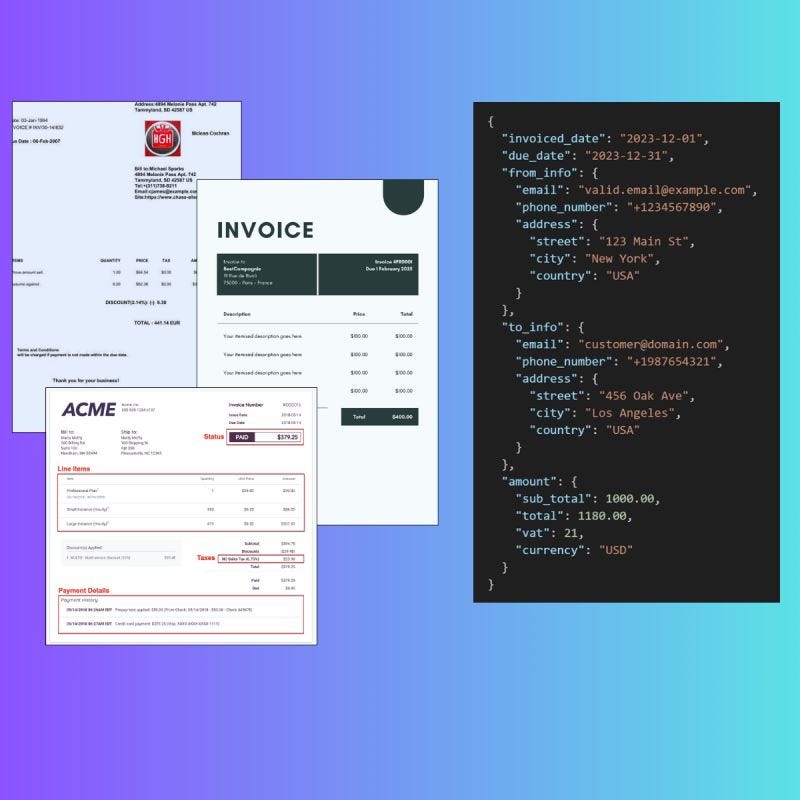

Since Qwen-2.5-VL is already fine-tuned to return structured outputs, it makes it a super candidate for parsing paperwork.

vLLM to serve the VLM

vLLM is an LLM serving device that drastically accelerates LLM inferences. It leverages PagedAttention, a mechanism that allocates optimally the KV cache in reminiscence to course of a number of LLM requests on the identical time.

vLLM not solely helps text-only fashions, it additionally helps Imaginative and prescient Language Fashions, along with structured outputs technology, which makes it a the proper match.

The most effective a part of it: it’s straight built-in with the Transformers library. This enables us to load any mannequin from the Hugging Face platform and vLLM routinely assign the mannequin weights into the GPU VRAM.

There are 2 deployment configurations we are able to use with vLLM: on-line inference and offline inference.

On this article, we’ll solely give attention to the offline inference. We’ll create a Python module that makes use of vLLM and course of pictures into structured outputs like JSON.

AWS Batch to run the inference

The characteristic we’ll develop will probably be used as a step in an ETL (Extract — Remodel — Load) pipeline. Regularly, let’s say as soon as a day, the mannequin will extract knowledge from hundreds of pictures, till its completion.

That is the proper use case for Batch Deployment.

We’ll use AWS Batch to handle batch jobs in the cloud.

It’s composed as follows:

🏗️ Jobs

The smallest entities. Primarily based on Docker containers, a Job runs a module till its completion or failure.

In comparison with serving, which runs computations 24/7, it’s an economical answer for time-bounded duties.

🏗️ Job Definition

The job template. It defines the useful resource allotted for the duty, the situation of the Docker Picture, the variety of retries, priorities, and most time.

As soon as outlined, you’ll be able to create and run as many roles as you want.

🏗️ Job queue

Liable for orchestrating and prioritizing jobs based mostly on sources availability and job priorities.

You’ll be able to create a large number of queues for high-priority duties, for time-sensitive jobs for instance, or low-priority, which might run on least expensive sources.

Assets are allotted to every queue through the Computation Surroundings.

🏗️ Computation Surroundings

It defines the sources you’re able to allocate for all of your job queues. GPUs for heavy computation duties comparable to LLM coaching, or Spot Occasion for fault-tolerant duties.

Job queue routinely allocate the correct quantity of sources based mostly on the Job Definition necessities.

AWS Batch is a strong service from AWS to run any kind of job, utilizing any sources from EC2: CPU and GPU situations. You solely pay for the working time. As soon as the job is completed, AWS Batch routinely turns off every part. This makes it the proper candidate for our use case.

The VLM deployment will seem like this:

- Due to its capability of producing structured output straight out of the field, we’ll use Qwen-2.5-VL to carry out the information extraction from paperwork.

- To speed up the inference, we use vLLM to routinely handle giant batch inferences. Certainly, vLLM routinely handles giant numbers of tokens coming from directions and embedded pictures utilizing the PagedAttention mechanism.

- The scripts and modules containing vLLM offline inference will probably be packaged utilizing uv and containerized utilizing Docker. This makes the module deployable nearly anyplace: AWS Batch / GCP Batch / Kubernetes.

- The Docker Picture will probably be saved on AWS ECR to maintain the characteristic within the AWS ecosystem.

- We use AWS Batch to handle the job queues. We’ll orchestrate the occasion utilizing EC2 as a substitute of Fargate. The reason is Fargate doesn’t suggest GPU sources, that are important for working LLMs. The infrastructure will probably be managed and deployed utilizing Terraform.

- We’ll use AWS S3 as our storage answer to obtain paperwork and add the extracted knowledge as a dataset. The info can then be utilized in any ETL pipeline comparable to feeding analytics dashboards.

Let’s begin!

The job module

We start with writing the job script.

The method goes as follows:

- Paperwork, saved in an S3 bucket as pictures, are downloaded utilizing the AWS SDK. We additionally establish every doc by its S3 path, which is exclusive. It will assist us establish the information output with its respective doc.

#llm/parser/essential.py

from io import BytesIO

from PIL import Picture

import loggingimport boto3

from botocore.exceptions import ClientError

LOGGER = logging.getLogger(__name__)

def load_images(

s3_bucket: str,

s3_images_folder_uri: str,

) -> tuple[list[str], record[Image.Image]]:

strive:

s3 = boto3.shopper("s3")

response = s3.list_objects_v2(Bucket=s3_bucket, Prefix=s3_images_folder_uri)

pictures: record[Image.Image] = []

filenames: record[str] = []

for obj in response["Contents"]:

key = obj["Key"]

filenames.append(key)

response = s3.get_object(Bucket=s3_bucket, Key=key)

image_data = response["Body"].learn()

pictures.append(Picture.open(BytesIO(image_data)))

return filenames, pictures

besides ClientError as e:

LOGGER.error("Subject when loading pictures from s3: %s.", str(e))

increase

besides Exception as e:

LOGGER.error("One thing went flawed when loading the pictures from AWS S3: %s", e)

increase

2. We use vLLM to load the mannequin from the Hugging Face platform and put together it for the GPU.

It additionally comes with helpful options, comparable to Guided decoding, which will be set within the SamplingParams parameter.

Within the back-end, vLLM makes use of the xGrammar mechanism to orient the textual content technology output into a sound JSON format.

By offering a Pydantic schema of the anticipated output, we are able to information the technology into the fitting knowledge format.

#llm/parser/schemas.py

import refrom pydantic import BaseModel, field_validator, ValidationInfo

class Deal with(BaseModel):

road: str | None = None

metropolis: str | None = None

nation: str | None = None

class Data(BaseModel):

e-mail: str | None = None

phone_number: str | None = None

handle: Deal with

class Quantity(BaseModel):

sub_total: float | None = None

complete: float | None = None

vat: float | None = None

foreign money: str | None = None

class Bill(BaseModel):

invoiced_date: str | None = None

due_date: str | None = None

from_info: Data

to_info: Data

quantity: Quantity

#llm/parser/essential.py

from vllm import LLM, SamplingParams

from vllm.sampling_params import GuidedDecodingParams

from pydantic import BaseModelfrom llm.settings import settings

def load_model(

model_name: str, schema: Sort[BaseModel] | None

) -> tuple[LLM, SamplingParams]:

llm = LLM(

mannequin=model_name,

gpu_memory_utilization=settings.gpu_memory_utilisation,

max_num_seqs=settings.max_num_seqs,

max_model_len=settings.max_model_len,

mm_processor_kwargs={"min_pixels": 28 * 28, "max_pixels": 1280 * 28 * 28},

disable_mm_preprocessor_cache=True,

)

sampling_params = SamplingParams(

guided_decoding=GuidedDecodingParams(json=schema.model_json_schema())

if schema

else None,

max_tokens=settings.max_tokens,

temperature=settings.temperature,

)

return llm, sampling_params

3. As soon as the mannequin and pictures are loaded in reminiscence, we are able to run the inference utilizing vLLM.

Since we use Guided Decoding as a substitute of vanilla inference, the method will take a bit extra time in alternate for higher knowledge high quality.

To help the textual content technology, we additionally present an instruction guiding the LLM to return the legitimate schema. We have to match the instruction into the immediate template used throughout Qwen-2.5-VL fine-tuning.

#llm/parser/prompts.py

INSTRUCTION = """

Extract the information from this bill.

Return your response as a sound JSON object.Here is an instance of the anticipated JSON output:

{

"invoiced_date": 09/04/2025 # format DD/MM/YYYY

"due_date": 09/04/2025 # format DD/MM/YYYY

"from_info": {

"e-mail": "jeremya@gmail.com",

"phone_number": "+33645789564",

"handle": {

"road": "Chemin des boulangers",

"metropolis": "Bourges",

"nation": FR # 2 letters nation

},

"to_info": {

"e-mail": "igordosgor@gmail.com",

"phone_number": "+33645789564",

"handle": {

"road": "Chemin des boulangers",

"metropolis": "New York",

"nation": US

},

}

"quantity": {

"sub_total": 1450.4 # Earlier than taxes

"complete": 1740.48 # After taxes

"vat": 0.2 # Pourcentage

"foreign money": USD # 3 letters code (USD, EUR, ...)

}

}

""".strip()

QWEN_25_VL_INSTRUCT_PROMPT = (

"<|im_start|>systemnYou are a useful assistant.<|im_end|>n"

"<|im_start|>usern<|vision_start|><|image_pad|><|vision_end|>"

"{instruction}<|im_end|>n"

"<|im_start|>assistantn"

)

#llm/parser/essential.py

from PIL import Picture

from vllm import LLM, SamplingParamsfrom llm.parser import prompts

def run_inference(

mannequin: LLM, sampling_params: SamplingParams, pictures: record[Image.Image], immediate: str

) -> record[str]:

"""Generate textual content output for every picture"""

inputs = [

{

"prompt": prompt,

"multi_modal_data": {"image": image},

}

for image in images

]

outputs = mannequin.generate(inputs, sampling_params=sampling_params)

return [output.outputs[0].textual content for output in outputs]

if __name__ == "__main__":

outputs = run_inference(

mannequin=mannequin,

sampling_params=sampling_params,

pictures=pictures,

immediate=prompts.QWEN_25_VL_INSTRUCT_PROMPT.format(

instruction=prompts.INSTRUCTION

),

)

4. As soon as the inference over, we extract and validate the JSON from the generated textual content. Qwen-2.5-VL coupled with xgrammar already finished an ideal job to keep away from any excedent texts!

To take action, we merely use the json Python library to decode the JSON:

#llm/parser/essential.py

import json

import logging

from typing import AnyLOGGER = logging.getLogger(__name__)

def extract_structured_outputs(outputs: record[str]) -> record[dict[str, Any]]:

json_outputs: record[dict[str, Any]] = []

for output in outputs:

begin = output.discover("{")

finish = output.rfind("}") + 1 # +1 to incorporate the closing brace

json_str = output[start:end]

strive:

json_outputs.append(json.hundreds(json_str))

besides json.JSONDecodeError as e:

LOGGER.error("Subject with decoding json for LLM output: %s", e)

json_outputs.append({})

return json_outputs

We additionally validate the returned JSON with Pydantic.

Right here, Pydantic turns into tremendous helpful because it allows the module to return a sound schema regardless of the technology. LLMs are inclined to “hallucinate”, which may very well be dramatic in a manufacturing software.

Nonetheless, Pydantic doesn’t include an out-of-the-box “return default_value if invalidate”.

For this, we’ll create our personal validation utilizing @field_validator decorator. Verify the documentation to know extra in regards to the characteristic.

class Data(BaseModel):

e-mail: str | None = None

phone_number: str | None = None

handle: Deal with@field_validator("e-mail", mode="earlier than")

@classmethod

def validate_email(cls, e-mail: str | None, information: ValidationInfo) -> str | None:

if not e-mail:

return None

email_pattern = r"^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+.[a-zA-Z]{2,}$"

if not re.match(email_pattern, e-mail):

return cls.model_fields[info.field_name].get_default()

else:

return e-mail

5. Lastly, the information is transformed right into a dataset, with the distinctive identifier for every factor (we picked the S3 path of every doc).

We use uv to handle the Python dependencies and package deal our software. Moreover, utilizing the Pyproject.toml, we add the cli command run-batch-job to run the job script.

#pyproject.toml

[project.scripts]

run-batch-job = "llm.__main__:essential"

As soon as the package deal is constructed, we simply must run:

uv run run-batch-job

Concerning the settings of the applying, such because the S3 bucket identify and the S3 prefixes, we use pydantic-settings , as a substitute of a fundamental Python class. The reason is Pydantic routinely validates the job configuration, along with validating the surroundings variables.

from typing import Annotatedfrom pydantic_settings import BaseSettings, SettingsConfigDict

from pydantic import Area

class Settings(BaseSettings):

model_config = SettingsConfigDict(

env_file=".env", additional="ignore"

) # additional="ignore" for AWS credentials

# Mannequin config

model_name: str = "Qwen/Qwen2.5-VL-3B-Instruct"

gpu_memory_utilisation: Annotated[float, Field(gt=0, le=1)] = 0.9

max_num_seqs: Annotated[int, Field(gt=0)] = 2

max_model_len: Annotated[int, Field(multiple_of=8)] = 4096

max_tokens: Annotated[int, Field(multiple_of=8)] = 2048

temperature: Annotated[float, Field(ge=0, le=1)] = 0

# AWS S3

s3_bucket: str

s3_preprocessed_images_dir_prefix: str

s3_processed_dataset_prefix: str

settings = Settings()

S3 settings are applied throughout runtime, which means we are able to modify the settings with out re-building the package deal!

Containerize the module with Docker

We use the official multi-stage construct from the uv documentation..

(The Docker Picture measurement is ~9GB due to the Pytorch and Cuda libraries!)

# An instance utilizing multi-stage picture builds to create a ultimate picture with out uv.# First, construct the applying within the `/app` listing.

# See `Dockerfile` for particulars.

FROM ghcr.io/astral-sh/uv:python3.12-bookworm-slim AS builder

ENV UV_COMPILE_BYTECODE=1 UV_LINK_MODE=copy

# Disable Python downloads, as a result of we wish to use the system interpreter

# throughout each pictures. If utilizing a managed Python model, it must be

# copied from the construct picture into the ultimate picture; see `standalone.Dockerfile`

# for an instance.

ENV UV_PYTHON_DOWNLOADS=0

WORKDIR /app

RUN --mount=kind=cache,goal=/root/.cache/uv

--mount=kind=bind,supply=uv.lock,goal=uv.lock

--mount=kind=bind,supply=pyproject.toml,goal=pyproject.toml

uv sync --frozen --no-install-project --no-dev

ADD . /app

RUN --mount=kind=cache,goal=/root/.cache/uv

uv sync --frozen --no-dev

# Then, use a ultimate picture with out uv

FROM python:3.12-slim-bookworm

# Copy the applying from the builder

COPY --from=builder --chown=app:app /app /app

# vLLM requires some fundamental compilation instruments

RUN apt-get replace && apt-get set up -y build-essential

# Place executables within the surroundings on the entrance of the trail

ENV PATH="/app/.venv/bin:$PATH"

# Executable package deal. Verify pyproject.toml

CMD ["run-batch-job"]

As soon as constructed, we add the Docker Picture to ECR. We implement the logic straight right into a Makefile to make the command simple to run. This additionally helps doc the venture.

AWS_REGION ?= eu-central-1

ECR_REPO_NAME ?= demo-invoice-structured-outputs

ECR_URI = ${ECR_ACCOUNT_ID}.dkr.ecr.${AWS_REGION}.amazonaws.com/${ECR_REPO_NAME}.PHONY: deploy

deploy: ecr-login construct tag push

# Require ECR_ACCOUNT_ID (fail if not offered)

ifndef ECR_ACCOUNT_ID

$(error ECR_ACCOUNT_ID shouldn't be set. Please present it, e.g., `make deploy ECR_ACCOUNT_ID=123456789012`)

endif

ecr-login:

@echo "[INFO] Logging in to ECR..."

aws ecr get-login-password --region ${AWS_REGION} | docker login --username AWS --password-stdin ${ECR_URI}

@echo "[INFO] ECR login profitable"

construct:

@echo "[INFO] Constructing Docker picture..."

docker construct -t ${ECR_REPO_NAME} .

@echo "[INFO] Construct accomplished"

tag:

@echo "[INFO] Tagging picture for ECR..."

docker tag ${ECR_REPO_NAME}:newest ${ECR_URI}:newest

@echo "[INFO] Tagging accomplished"

push:

@echo "[INFO] Pushing picture to ECR..."

docker push ${ECR_URI}:newest

@echo "[INFO] Push accomplished"

You’ll be able to then run the following command.

make deploy ECR_ACCOUNT_ID=

AWS Batch proposes 3 essential approaches to carry out jobs utilizing AWS sources: EC2, Fargate, or EKS. Since Fargate doesn’t permit the GPU utilization and EKS requires an current cluster working, we go for the EC2 orchestration.

We’ll clarify the deployment utilizing Terraform, an Infrastructure As a Code device.

IAM Roles

First comes IAM permissions and roles.

In AWS, roles allow an AWS service to work together with different. AWS Batch requires 4 roles:

AWS Batch really makes use of ECS beneath the hood to launch and run EC2 situations. Subsequently, we have to create a coverage to entry the EC2 service and assign this function to an ECS function, that will probably be assign to AWS Batch Compute Environnment

I do know, why make it easy if we are able to make it complicated

# IAM Coverage Doc

knowledge "aws_iam_policy_document" "ec2_assume_role" {

assertion {

impact = "Permit"principals {

kind = "Service"

identifiers = ["ec2.amazonaws.com"]

}

actions = ["sts:AssumeRole"]

}

}

# IAM Position Creation

useful resource "aws_iam_role" "ecs_instance_role" {

identify = "ecs_instance_role"

assume_role_policy = knowledge.aws_iam_policy_document.ec2_assume_role.json

}

# Coverage Attachment

useful resource "aws_iam_role_policy_attachment" "ecs_instance_role" {

function = aws_iam_role.ecs_instance_role.identify

policy_arn = "arn:aws:iam::aws:coverage/service-role/AmazonEC2ContainerServiceforEC2Role"

}

# Occasion Profile Creation. Utilized in Batch-Compute-Env

useful resource "aws_iam_instance_profile" "ecs_instance_role" {

identify = "ecs_instance_role"

function = aws_iam_role.ecs_instance_role.identify

}

Coverage for AWS Batch service function which permits entry to associated providers together with EC2, Autoscaling, EC2 Container service, Cloudwatch Logs, ECS and IAM.

It’s the spine of AWS Batch that connects the service to all different AWS providers.

knowledge "aws_iam_policy_document" "batch_assume_role" {

assertion {

impact = "Permit"principals {

kind = "Service"

identifiers = ["batch.amazonaws.com"]

}

actions = ["sts:AssumeRole"]

}

}

useful resource "aws_iam_role" "batch_service_role" {

identify = "aws_batch_service_role"

assume_role_policy = knowledge.aws_iam_policy_document.batch_assume_role.json

}

useful resource "aws_iam_role_policy_attachment" "batch_service_role" {

function = aws_iam_role.batch_service_role.identify

policy_arn = "arn:aws:iam::aws:coverage/service-role/AWSBatchServiceRole"

}

Since AWS Batch runs inside an ECS cluster, it requires the entry to ECS Execution Position to entry providers, comparable to pulling a container from an ECR personal repository.

useful resource "aws_iam_role" "ecs_task_execution_role" {

identify = "ecs_task_execution_role"assume_role_policy = jsonencode({

Model = "2012-10-17",

Assertion = [{

Effect = "Allow",

Principal = { Service = "ecs-tasks.amazonaws.com" },

Action = "sts:AssumeRole"

}]

})

}

# Connect the usual ECS Process Execution coverage

useful resource "aws_iam_role_policy_attachment" "ecs_task_execution_role" {

function = aws_iam_role.ecs_task_execution_role.identify

policy_arn = "arn:aws:iam::aws:coverage/service-role/AmazonECSTaskExecutionRolePolicy"

}

Lastly, within the case you want the job to name exterior AWS providers, S3 in our case, we have to point out a task to the working container. This can be a higher and extra secured method to deal with AWS credentials as a substitute of utilizing surroundings variables.

We additionally connect to this function the ecs-task-execution function as it’s managed by ECS as a activity.

We lastly restrict the permission to our bucket solely. This to respect the least-prvilege precept.

useful resource "aws_iam_role" "batch_job_role" {

identify = "demo-batch-job-role"assume_role_policy = jsonencode({

Model = "2012-10-17",

Assertion = [{

Effect = "Allow",

Principal = { Service = "ecs-tasks.amazonaws.com" },

Action = "sts:AssumeRole"

}]

})

}

# IAM Coverage for S3 Entry

useful resource "aws_iam_policy" "batch_s3_policy" {

identify = "batch-s3-access"

description = "Permit Batch jobs to entry S3 buckets"

coverage = jsonencode({

Model = "2012-10-17",

Assertion = [{

Effect = "Allow",

Action = [

"s3:GetObject",

"s3:PutObject",

"s3:ListBucket"

],

Useful resource = [

"arn:aws:s3:::${var.s3_bucket}",

"arn:aws:s3:::${var.s3_bucket}/*"

]

}]

})

}

Compute Surroundings

AWS Batch makes use of Compute Surroundings to launch sources from AWS, both from EC2 or Fargate.

It additionally helps Spot Cases, that are inexpensive than on-demand sources however include the danger of untimely termination. For batch jobs, this is a perfect use case, as failed jobs can simply be retried.

useful resource "aws_batch_compute_environment" "batch_compute_env" {

compute_environment_name = "demo-compute-environment"

kind = "MANAGED"

service_role = aws_iam_role.batch_service_role.arn # Position related to Batchcompute_resources {

kind = "EC2"

allocation_strategy = "BEST_FIT_PROGRESSIVE"

instance_type = var.instance_type

min_vcpus = var.min_vcpus

desired_vcpus = var.desired_vcpus

max_vcpus = var.max_vcpus

security_group_ids = [aws_security_group.batch_sg.id]

subnets = knowledge.aws_subnets.default.ids

instance_role = aws_iam_instance_profile.ecs_instance_role.arn # Position to roll up EC2 situations

}

depends_on = [aws_iam_role_policy_attachment.batch_service_role] # To forestall a race situation throughout surroundings deletion

}

Batch Compute Surroundings routinely assigns jobs to sources inside a VPC. On this case, we used our default VPC from AWS.

Verify the code to see the Terraform half for creating VPC/Subnets/Safety Teams.

We’ll use an EC2 occasion g6.xlarge, containing an Nvidia L4 with 16GB CPU RAM and ~24GB VRAM.