With the growing adoption of Machine Studying throughout tasks, sustaining code high quality and reusability turns into paramount. Packaging frequent logic into Python packages considerably improves maintainability, facilitates collaboration, and ensures consistency throughout completely different ML experiments and deployments. This method is vital to constructing sturdy and scalable ML techniques.

In our state of affairs, we’re coaching a number of fashions inside Azure Machine Studying studio. These fashions share frequent knowledge processing and utility features, which we’ve consolidated right into a Python bundle revealed on an Azure Artifact feed throughout the similar Azure DevOps undertaking. A crucial requirement is to construct reusable Azure ML environments that embody this personal bundle, guaranteeing our mannequin coaching and deployment processes are standardized and reproducible.

Azure Machine Studying environments could be outlined utilizing Conda files, which specify Python dependencies. This enables for reproducible environments by itemizing actual bundle variations. Notice that you must explicitly outline the tackle to your Azure Artifact Feed Python repository.

identify: training-environmentchannels:

- anaconda

- defaults

dependencies:

- python>=3.10

- pip>=22.2.2

- pip:

- --extra-index-url https://pkgs.dev.azure.com/[..]

- azure-ai-ml==1.13.0

- azure-identity==1.15.0

- our-cool-reusable-package==1.0

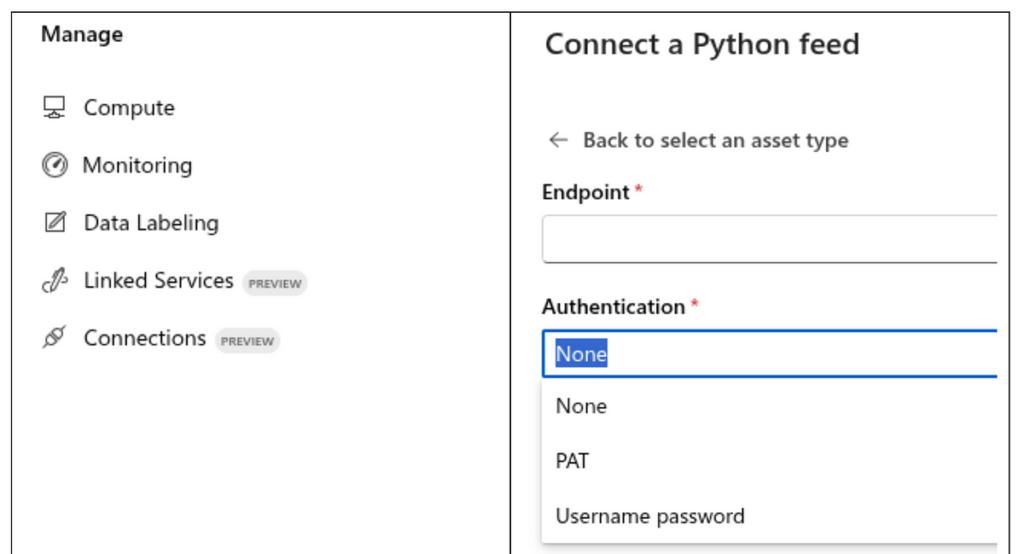

Initially, we explored utilizing Azure ML Connections (a preview function on the time of writing) to authorize entry to the Azure Artifact feed straight from inside Azure ML. Whereas this function simplified connecting to exterior assets, it primarily supported authentication by way of Private Entry Tokens (PATs), that are much less safe and tougher to handle at scale in comparison with identity-based authentication strategies.

To maneuver past the restrictions of PATs and undertake a safer and sustainable authentication technique, we aimed for an identity-based answer, much like how Managed Identities are used elsewhere in Azure for service-to-service authentication.

Our first try concerned assigning a Managed Identification to the Azure ML Compute Occasion used for constructing environments. This identification was granted learn entry to the Azure DevOps feed. Nevertheless, we found that whereas the identification labored accurately for authenticating bundle entry at runtime inside an setting, it did not routinely authenticate entry throughout the construct section when Docker photos have been being constructed, despite the fact that the construct course of ran on the identical compute. This method proved unreliable and unsustainable for constantly constructing environments.

We additionally reached out to Microsoft Help to listen to about when/if they’ll help Managed Identification for authentication in Connections however haven’t heard again from them,.

The ultimate, sturdy answer leverages an Azure DevOps Pipeline. Azure DevOps pipelines have built-in, safe mechanisms for authenticating to Azure Artifact feeds throughout the similar undertaking, eliminating the necessity for PATs or complicated identification configurations throughout the pipeline execution. The pipeline’s function is to first securely obtain the required bundle model from the feed after which bundle it together with the setting definition recordsdata earlier than sending every little thing to Azure ML for the setting construct course of.

# Instance pipeline, could possibly be parameterized to help a number of environments with comparable definition

levels:

- stage: DownloadPackages

displayName: "Obtain packages from requirement"

jobs:

- job: DownloadPackages

steps:

- job: UsePythonVersion@0

inputs:

versionSpec: "3.10" # In sync with AML setting

structure: "x64"

addToPath: true- job: PipAuthenticate@1

displayName: 'Pip Authenticate'

inputs:

artifactFeeds: 'My DevOps Undertaking/My Artifact Feed'

onlyAddExtraIndex: true # Pressure pip to fallback to exterior index

- script: |

cd training_environment/docker-context

mkdir pip_packages

pip obtain --platform manylinux2014_x86_64 --only-binary=:all: --no-binary=:none: -r necessities.txt -d pip_packages

ls -lah pip_packages

displayName: "Obtain setting dependencies"

- script: |

python -m pip set up -r necessities.txt

displayName: "Set up dependencies for publishing job to AML"

- job: AzureCLI@2

displayName: 'Create AML setting with Python SDK'

continueOnError: false

inputs:

azureSubscription: "AML Service connection"

scriptType: bash

workingDirectory: $(System.DefaultWorkingDirectory)/code

scriptLocation: inlineScript

inlineScript: |

my_env_building_script.py

Notice: When downloading the bundle throughout the pipeline, be sure that the proper platform model is chosen that’s appropriate with the goal platform and Python model specified for the Azure ML setting.

For outlining the Azure ML setting itself, particularly when together with native recordsdata like a downloaded personal bundle, utilizing a Docker context is probably the most appropriate method. The Docker context contains the Dockerfile and all mandatory recordsdata (like necessities.txt and the downloaded bundle) which might be despatched to Azure ML for constructing the customized Docker picture that varieties the setting.

Folder construction for our surroundings definition:

training_environment/

│ ├── docker-context/

│ │ ├── Dockerfile

│ │ ├── necessities.txt

│ │ └── pip_packages/ # Downloaded by DevOps pipeline

│ └── training-environment.yml # AML setting definition pointing to Docker-context

Dockerfile:

FROM mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu22.04 COPY pip_packages /pip_packages

COPY necessities.txt necessities.txt

RUN pip set up --no-index --find-links /pip_packages -r necessities.txt

AML setting definition (training-environment.yml):

$schema: https://azuremlschemas.azureedge.web/newest/setting.schema.json identify: training_environment

description: Dependencies for coaching

construct:

path: docker-context

Simplified python script for setting construct (my_env_building_script.py):

from azure.ai.ml import MLClient, load_environment

from azure.identification import DefaultAzureCredential shopper = MLClient() # initializing connection to Azure ML workspace

env = load_environment(f"training-environment/training-environment.yml")

shopper.environments.create_or_update(setting=env)

Standardizing the setting definitions and parameterizing the constructing script and pipeline will help you create a strong MLOps pipeline for steady construct and deploy of your environments with personal packages.