When engaged on a classification drawback, one of the vital essential elements after coaching the mannequin is mannequin analysis. Choosing the proper analysis metric can considerably affect the way you interpret your mannequin’s efficiency and the way you enhance it. On the coronary heart of most analysis strategies lies the confusion matrix.

On this weblog, we’ll discover:

What’s a confusion matrix?

Key metrics derived from it: Precision, Recall, F1 Rating, Accuracy

Kind I and Kind II Errors

When to concentrate on which metric

ROC Curve and AUC Rating

Instance Python code

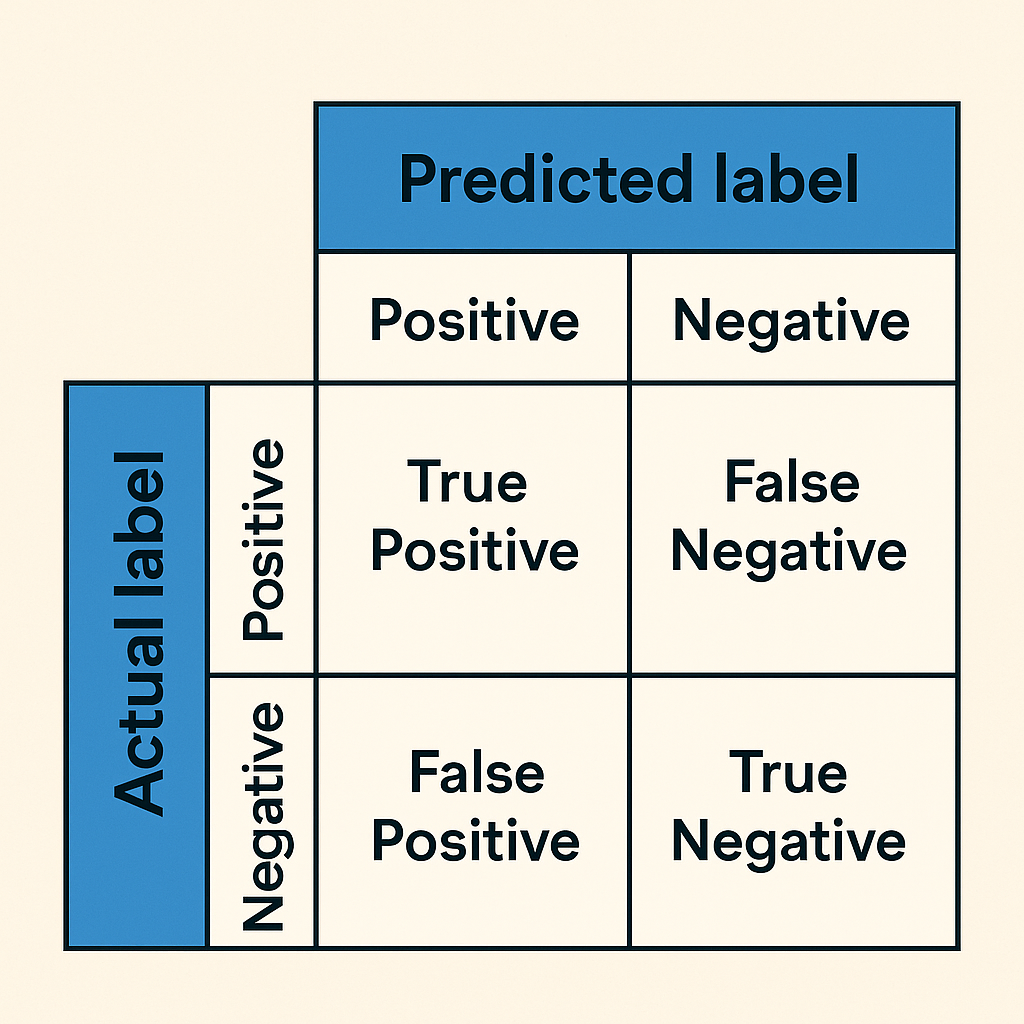

A confusion matrix is a desk that’s usually used to explain the efficiency of a classification mannequin on a set of check knowledge for which the precise values are identified.

For a binary classification drawback:

Predicted PositivePredicted NegativeActual PositiveTrue Optimistic (TP)False Unfavorable (FN)Precise NegativeFalse Optimistic (FP)True Unfavorable (TN)

- True Optimistic (TP): The mannequin predicted a constructive consequence, and the precise label was additionally constructive. (Instance: The mannequin appropriately identifies a affected person with a illness.)

- True Unfavorable (TN): The mannequin predicted a damaging consequence, and the precise label was additionally damaging. (Instance: The mannequin appropriately identifies a wholesome affected person.)

- False Optimistic (FP): The mannequin predicted a constructive consequence, however the precise label was damaging. (Instance: The mannequin wrongly says a wholesome affected person has a illness.)

- False Unfavorable (FN): The mannequin predicted a damaging consequence, however the precise label was constructive. (Instance: The mannequin wrongly says a sick affected person is wholesome.)

From this matrix, a number of necessary analysis metrics could be calculated.

- Definition: (TP + TN) / (TP + FP + FN + TN)

- Interpretation: How usually the classifier is right.

Drawback: Accuracy could be deceptive if the dataset is imbalanced.

- Definition: TP / (TP + FP)

- Interpretation: When the mannequin predicts a constructive class, how usually is it right?

Actual-world instance: In spam detection, excessive precision signifies that emails categorised as spam are certainly spam. Low precision would trigger many necessary, respectable emails to be wrongly filtered as spam (false positives).

Excessive precision means a low false constructive price.

- Definition: TP / (TP + FN)

- Interpretation: Out of all precise positives, what number of had been appropriately recognized?

Actual-world instance: In most cancers detection, excessive recall is essential as a result of we wish to catch as many precise most cancers circumstances as doable. Lacking a constructive case (false damaging) could be harmful.

Excessive recall means a low false damaging price.

- Definition: 2 * (Precision * Recall) / (Precision + Recall)

- Interpretation: Harmonic imply of precision and recall.

F1 is a steadiness between precision and recall, useful when you’ve got imbalanced lessons.

- Kind I Error (False Optimistic): Incorrectly figuring out a damaging occasion as constructive.

- Instance: Flagging a respectable e-mail as spam.

- Kind II Error (False Unfavorable): Incorrectly figuring out a constructive occasion as damaging.

- Instance: Failing to detect a fraudulent transaction.

Understanding the price of these errors is essential. In some circumstances, reminiscent of fraud detection or illness screening, Kind II errors are much more expensive than Kind I errors.

The Receiver Working Attribute (ROC) curve plots the True Optimistic Price (also called Recall) in opposition to the False Optimistic Price (1— Specificity) at numerous threshold settings.

- The nearer the ROC curve is to the top-left nook, the higher the mannequin.

- The Space Below the Curve (AUC) quantifies the mannequin’s general means to discriminate between constructive and damaging lessons.

Interpretation:

- AUC = 1: Good mannequin

- AUC = 0.5: No discrimination (random guess)

Let’s generate and consider a classification mannequin utilizing Scikit-learn.

import numpy as np

import pandas as pd

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import confusion_matrix, accuracy_score, precision_score, recall_score, f1_score, roc_auc_score, roc_curve

import matplotlib.pyplot as plt# Generate dummy dataset

X, y = make_classification(n_samples=1000, n_features=20, n_classes=2, random_state=42)

# Cut up dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Prepare a mannequin

clf = RandomForestClassifier()

clf.match(X_train, y_train)

# Predict

y_pred = clf.predict(X_test)

# Metrics

cm = confusion_matrix(y_test, y_pred)

print("Confusion Matrix:n", cm)

print("Accuracy:", accuracy_score(y_test, y_pred))

print("Precision:", precision_score(y_test, y_pred))

print("Recall:", recall_score(y_test, y_pred))

print("F1 Rating:", f1_score(y_test, y_pred))

# ROC Curve

y_probs = clf.predict_proba(X_test)[:, 1] # Possibilities for constructive class

fpr, tpr, thresholds = roc_curve(y_test, y_probs)

roc_auc = roc_auc_score(y_test, y_probs)

plt.determine()

plt.plot(fpr, tpr, shade='darkorange', lw=2, label=f'ROC curve (space = {roc_auc:.2f})')

plt.plot([0, 1], [0, 1], shade='navy', lw=2, linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Optimistic Price')

plt.ylabel('True Optimistic Price')

plt.title('Receiver Working Attribute')

plt.legend(loc="decrease proper")

plt.present()

The confusion matrix is a robust device that gives deeper perception into not solely how usually your classifier is right, however additionally how correct it’s, in addition to how inaccurate it’s.

Choosing the proper metric is closely context-dependent (is dependent upon the use case):

Subsequent time you’re employed on a classification drawback, transcend accuracy. Look into confusion matrices, take into consideration your use case, and choose the analysis metric accordingly!

Joyful Modeling! 🚀