Language fashions like GPT-4 and Claude are spectacular, however they nonetheless have a significant weak spot. They typically hallucinate. They’ll make issues up. In case you’re utilizing them in real-world purposes like buyer assist or doc search, you most likely need solutions grounded in information.

That is the place RAG is available in.

On this information, you’ll learn to construct your individual RAG system utilizing LangChain, FAISS, and the Groq API. By the tip, you’ll have a working system that may reply questions primarily based by yourself paperwork as an alternative of simply guessing.

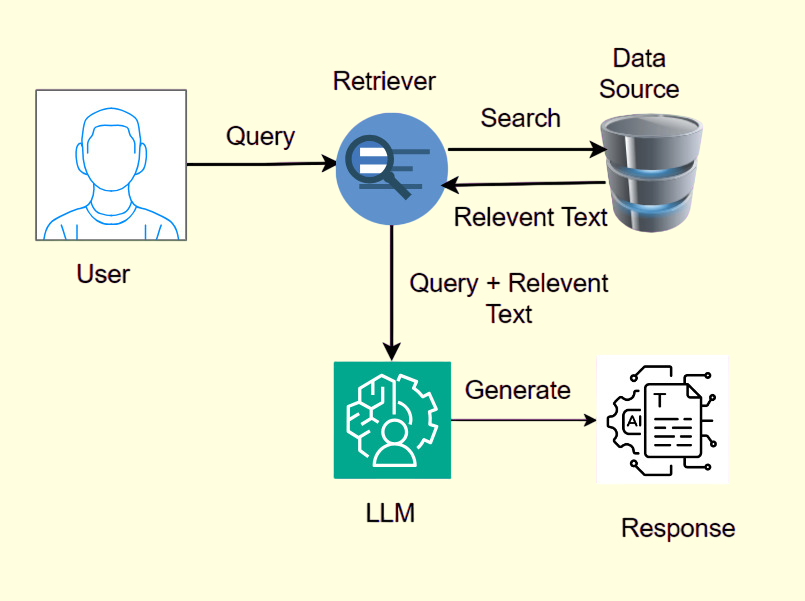

RAG stands for Retrieval-Augmented Era. It improves language mannequin responses by first retrieving related info from a doc database after which feeding that info to the mannequin to assist it generate correct solutions.

As an alternative of asking a mannequin to reply from reminiscence, you assist it “look issues up” earlier than it speaks.

- It reduces hallucinations or made-up solutions.

- It helps you to use your individual information with out retraining the mannequin.

- It really works effectively for query answering, doc search, and chatbots with actual information.

Right here’s what we’ll must construct the RAG system:

- LangChain: This helps you manage the workflow.

- FAISS: A quick approach to seek for comparable chunks of textual content.

- Groq API: To entry highly effective language fashions like Llama 3.

- OpenAI Embeddings: To transform your textual content into searchable vectors.

- A easy textual content file: This might be your information base.

Be sure you have these packages put in:

pip set up langchain openai faiss-cpu tiktoken requests

First, load your doc and break it into chunks so it’s simpler to go looking.

from langchain.document_loaders import TextLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

loader = TextLoader("your_file.txt")

docs = loader.load()

splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50)

chunks = splitter.split_documents(docs)

We convert the chunks into vectors utilizing OpenAI’s embeddings, then retailer them in FAISS.

from langchain.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(chunks, embeddings)

This enables us to later seek for essentially the most related items of textual content.

Let’s outline a easy wrapper for the Groq API (LLaMA 3):

import requests

def call_groq_llm(immediate, api_key):

url = "https://api.groq.com/openai/v1/chat/completions"

headers = {

"Authorization": f"Bearer {api_key}",

"Content material-Kind": "software/json"

}

payload = {

"mannequin": "llama3-70b-8192",

"messages": [{"role": "user", "content": prompt}],

"temperature": 0.2

}

response = requests.publish(url, headers=headers, json=payload)

return response.json()["choices"][0]["message"]["content"]

Now we deliver every little thing collectively right into a easy question-answering system.

from langchain.chains import RetrievalQA

from langchain.llms.base import LLM

from langchain.prompts import PromptTemplate

class GroqLLM(LLM):

def __init__(self, api_key):

self.api_key = api_key

def _call(self, immediate, **kwargs):

return call_groq_llm(immediate, self.api_key)

@property

def _llm_type(self):

return "groq_llm"

# Set your Groq API key

groq_api_key = "your-groq-api-key"

llm = GroqLLM(api_key=groq_api_key)

retriever = vectorstore.as_retriever()

qa = RetrievalQA.from_chain_type(llm=llm, retriever=retriever)

question = "What's retrieval-augmented technology?"

consequence = qa.run(question)

print("Reply:", consequence)

You now have a working RAG pipeline that may reply questions utilizing your information.

You may construct a fundamental net interface utilizing Streamlit:

import streamlit as st

st.title("Ask My Paperwork")

question = st.text_input("Enter your query:")

if question:

consequence = qa.run(question)

st.write(consequence)

Run it with:

streamlit run app.py