by Grant Nitta, Nicholas Barsi-Rhyne, and Ada Zhang

This mission explores a simplified method to picture era, impressed by the denoising course of utilized in diffusion fashions. By corrupting clear coaching pictures with noise and coaching a neural community to reverse that corruption, we experiment with how nicely a mannequin can study to “undo” noise and generate coherent pictures from scratch.

We selected the CIFAR-10 dataset as our coaching information as a result of it comes with a wealthy set of labeled pictures in 100 totally different lessons, the place every class has 600 pictures. The pictures embody autos, birds, vegetation, folks, and extra, with an RBG form (3, 32, 32).

Our preprocessing pipeline included the next steps:

- Conversion to tensor: We transformed pictures from PIL format (with pixel values starting from 0 to 255) to PyTorch tensors with values in [0, 1].

- Normalization: We normalized every RGB channel to have values within the vary [-1, 1] to stabilize and speed up coaching.

- Batch loading: We used a PyTorch DataLoader to effectively load batches of coaching information for coaching.

- Noise corruption: To simulate diffusion, we corrupted the pictures by mixing them with random noise. This was completed utilizing the next perform:

def corrupt(x, quantity):

"""

Corrupt enter pictures by mixing them with random noise.

Parameters

- - - - -

- x (torch.Tensor): Enter pictures of form (batch_size, channels, peak, width).

- quantity (torch.Tensor): Tensor of form (batch_size,) indicating the corruption degree

for every picture within the batch. Values must be in [0, 1].Returns

- - - -

- torch.Tensor: Corrupted pictures of the identical form as `x`, the place every picture is

interpolated between the unique and random noise primarily based on `quantity`.

"""

noise = torch.rand_like(x)

quantity = quantity.view(-1, 1, 1, 1)

return x * (1 - quantity) + noise * quantity

For this mission, we used a U-Web structure carried out through the UNet2DModel class from Hugging Face’s diffusers library:

from diffusers import UNet2DModel

web = UNet2DModel(

sample_size=32, # Enter picture measurement

in_channels=3, # RGB pictures

out_channels=3, # Reconstruct RGB pictures

layers_per_block=2, # 2 residual blocks per stage

block_out_channels=(64, 128, 128),

down_block_types=("DownBlock2D", "AttnDownBlock2D", "AttnDownBlock2D"),

up_block_types=("AttnUpBlock2D", "AttnUpBlock2D", "UpBlock2D"),

)

web.to(machine)

U-Web is a well known convolutional structure initially developed for biomedical picture segmentation, but it surely’s additionally extremely efficient for picture era duties. In our case, the mannequin takes in a loud picture and learns to reconstruct the clear model. This matches a simplified diffusion setup, the place pictures are progressively noised after which denoised.

We used imply squared error (MSE) loss with the Adam optimizer to attenuate the pixel-wise distinction between the expected and unique pictures. This teaches the mannequin to “undo” noise and enhance picture high quality over time.

The methodology consists of three principal phases:

- Noise Simulation: Every clear CIFAR-100 picture is corrupted utilizing randomized uniform noise. This simulates the ahead course of in diffusion fashions.

- Denoising Mannequin Coaching: A U-Web is educated to reverse this noise, studying to map noisy inputs again to the unique clear pictures.

- Iterative Picture Era: To generate pictures from scratch, we begin with random noise and iteratively refine it utilizing the educated mannequin, step by step shifting from noise to a coherent picture.

The complete mission was carried out in PyTorch, utilizing:

diffusersfor U-Web structuretorchvisionfor CIFAR-100 information and preprocessingmatplotlibandnumpyfor evaluation and visualization- Google Cloud Platform for GPU coaching

All code is totally reproducible and runs in a single pocket book.

We educated on many various datasets, together with CIFAR-10, PixelGen16x16, Oxford 102 Flower Dataset, and MNIST.

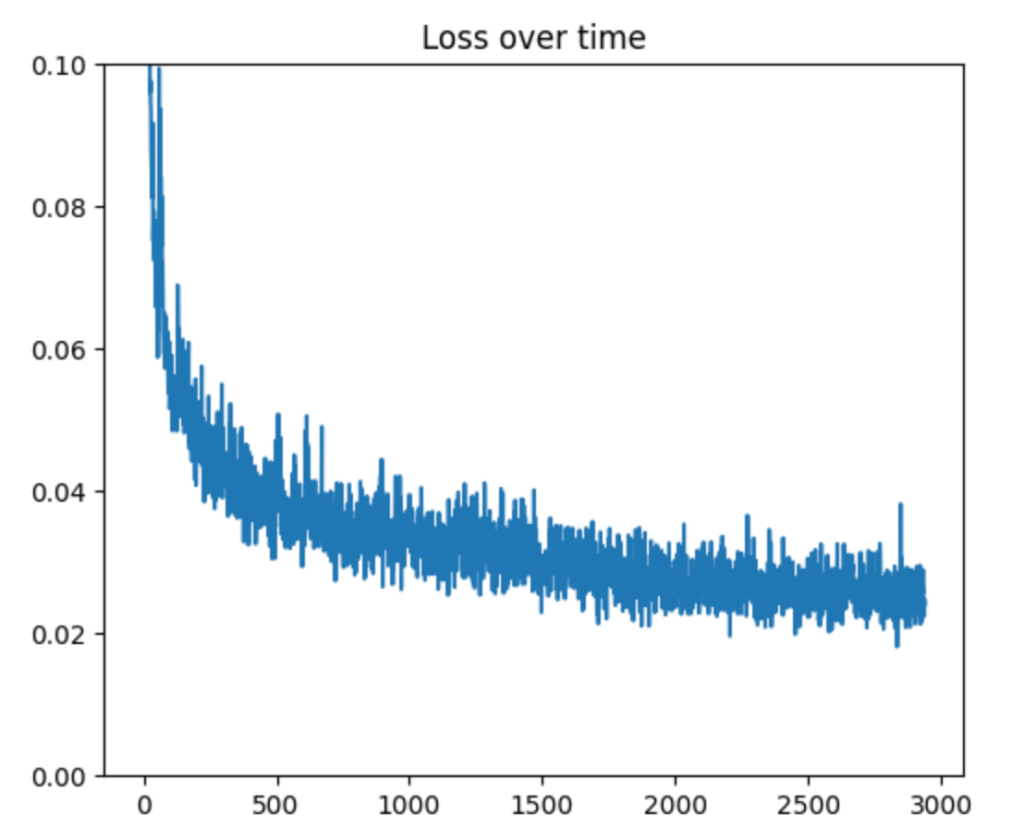

We discovered that utilizing the CIFAR-100 dataset resulted within the biggest MSE loss over epochs:

These have been the generated pictures:

We wish to be aware that coaching a mannequin to generate pictures may be very computationally costly and requires plenty of coaching information. Due to this fact, we weren’t in a position to practice as many fashions as we might have appreciated.

We discovered that coaching generative fashions is computationally intensive, particularly with out large-scale {hardware}. Resulting from time and useful resource constraints, we weren’t in a position to run lengthy coaching cycles or in depth hyperparameter tuning — however the outcomes nonetheless present promising indicators that even a simplified denoising setup can generate coherent pictures.