Discover Absolute Zero, a groundbreaking AI paradigm the place fashions study complicated reasoning by way of strengthened self-play with none exterior knowledge. Uncover how AZR achieves SOTA outcomes, its implications for AI scalability, and the daybreak of the “period of expertise.”

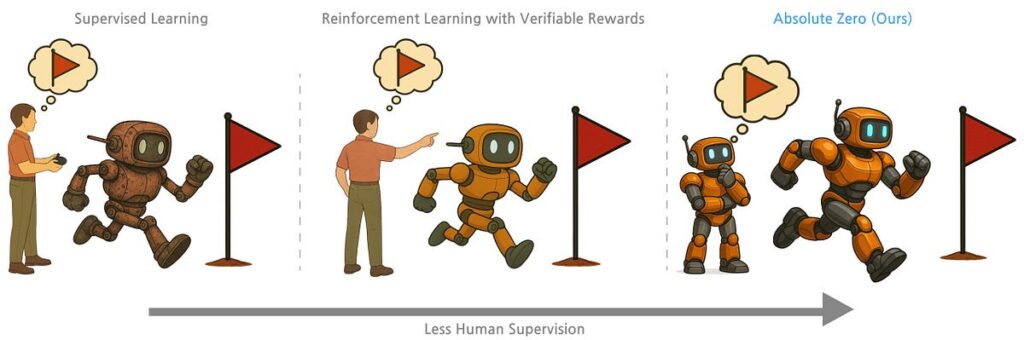

Massive Language Fashions (LLMs) have grow to be astonishingly adept at duties requiring complicated reasoning, from writing code to fixing mathematical issues. We’ve seen speedy progress, largely fueled by strategies like Supervised Fantastic-Tuning (SFT) and, extra not too long ago, Reinforcement Studying with Verifiable Rewards (RLVR). SFT entails coaching fashions on huge datasets of human-generated examples (like question-answer pairs with step-by-step reasoning). RLVR takes a step additional, studying from outcome-based rewards (e.g., did the code run accurately? Was the maths reply proper?), which reduces the necessity for completely labeled reasoning steps however nonetheless closely depends on giant collections of human-curated issues and their recognized solutions.

This reliance on human-provided knowledge presents a looming bottleneck. Creating high-quality datasets is dear, time-consuming, and requires vital experience. As fashions grow to be extra…