Creating and managing machine studying initiatives generally is a advanced course of for contemporary knowledge science groups. MLOps has emerged as a strong strategy to streamline this course of and automate the workflow from mannequin improvement to deployment. On this weblog put up, we are going to discover step-by-step the right way to construct an efficient MLOps workflow utilizing instruments like Dagshub and MLflow. By combining Dagshub’s knowledge and mannequin administration capabilities with MLflow’s experiment monitoring and deployment options, you may make your initiatives extra environment friendly and scalable. Get able to dive into the world of MLOps and uncover the right way to optimize your workflow with these highly effective instruments.

Dagshub is a platform that integrates with GitHub, so we have to create a repository on GitHub. You may optionally add a .gitignore and README. I’ve created a public repository named MLOPS-Demo, and I’ll proceed with this repository. Now, we clone the repository to our pc, create a Conda setting, and set up the libraries listed within the necessities.txt file. If every little thing is prepared, we will begin coding and join our repository to Dagshub.

You should have a Dagshub account; you’ll be able to log in utilizing your GitHub account or Google account. After logging in, you can be greeted by the Dagshub dashboard.

Click on on the “Create +” button within the high proper nook.

Within the opened window, click on on the “Join a repository” menu. After clicked you will note two totally different choices: GitHub and Different. Choose the GitHub possibility right here.

You will note a piece just like the one within the picture above. Choose the repository you created and click on the Save button.

Lastly, we’re greeted by a piece like this on Dagshub. That is the ultimate half associated to DagsHub. Now, let’s deal with the coding. For this, I’m making a file named predominant.py, and I first have to import the required libraries.

import os

import warnings

import sys

import logging

from urllib.parse import urlparseimport pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

import mlflow

import mlflow.sklearn

import dagshub

# Dagshub integration

dagshub.init(repo_owner='hasan.asus1999', repo_name='MLOPS-Demo', mlflow=True)logging.basicConfig(degree=logging.WARN)

logger = logging.getLogger(__name__)

The road dagshub.init(repo_owner=’hasan.asus1999′, repo_name=’MLOPS-Demo’, mlflow=True) is essential, and this code block is supplied by DagsHub.

On the Dagshub dashboard, click on the “Distant” button, navigate to the “Experiments” menu, and choose the marked code line beneath “Utilizing MLflow monitoring.”

def eval_classification_metrics(precise, pred):

acc = accuracy_score(precise, pred)

prec = precision_score(precise, pred, common='weighted')

rec = recall_score(precise, pred, common='weighted')

f1 = f1_score(precise, pred, common='weighted')

return acc, prec, rec, f1

By writing the eval_classification_metrics operate, we are going to use the classification metrics and make comparisons in MLflow.

if __name__ == "__main__":

warnings.filterwarnings("ignore")

np.random.seed(42)# Dataset: Iris

csv_url = "https://uncooked.githubusercontent.com/uiuc-cse/data-fa14/gh-pages/knowledge/iris.csv"

attempt:

knowledge = pd.read_csv(csv_url)

besides Exception as e:

logger.exception("Unable to obtain CSV. Error: %s", e)

# separate dependent and impartial variables

X = knowledge.drop("species", axis=1)

y = knowledge["species"]

# coaching/testing distinction

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=42)

# Hyperparameters

n_estimators = int(sys.argv[1]) if len(sys.argv) > 1 else 100

max_depth = int(sys.argv[2]) if len(sys.argv) > 2 else None

with mlflow.start_run():

clf = RandomForestClassifier(n_estimators=n_estimators, max_depth=max_depth, random_state=42)

clf.match(X_train, y_train)

y_pred = clf.predict(X_test)

acc, prec, rec, f1 = eval_classification_metrics(y_test, y_pred)

print(f"Random Forest (n_estimators={n_estimators}, max_depth={max_depth}):")

print(f" Accuracy: {acc}")

print(f" Precision: {prec}")

print(f" Recall: {rec}")

print(f" F1 Rating: {f1}")

# MLflow logs

mlflow.log_param("n_estimators", n_estimators)

mlflow.log_param("max_depth", max_depth)

mlflow.log_metric("accuracy", acc)

mlflow.log_metric("precision", prec)

mlflow.log_metric("recall", rec)

mlflow.log_metric("f1_score", f1)

# Dagshub URI

remote_server_uri = "https://dagshub.com/hasan.asus1999/MLflow-Take a look at.mlflow"

mlflow.set_tracking_uri(remote_server_uri)

tracking_url_type_store = urlparse(mlflow.get_tracking_uri()).scheme

if tracking_url_type_store != "file":

mlflow.sklearn.log_model(clf, "mannequin", registered_model_name="RandomForestIrisModel")

else:

mlflow.sklearn.log_model(clf, "mannequin")

I discovered it acceptable to make use of the Iris dataset for this examine as a result of it’s a basic dataset for classification algorithms. This code block, csv_url = “https://uncooked.githubusercontent.com/uiuc-cse/data-fa14/gh-pages/knowledge/iris.csv” will assist me use the Iris dataset, which means I received’t have to obtain it from Kaggle or another supply. If you need, you could find extra detailed details about the Iris dataset here. One other vital level is the Dagshub URI, which is that this line of code: remote_server_uri = “https://dagshub.com/hasan.asus1999/MLflow-Take a look at.mlflow”. This line is critical for us to connect with MLflow. So how can we discover this within the DagsHub dashboard?

On the Dagshub dashboard, click on the “Distant” button, navigate to the “Experiments” menu, and choose the marked code line beneath “MLflow Monitoring distant”.

Now every little thing is prepared. Primarily based on the picture above, let’s click on on the “Go to mlflow UI” button and go to MLflow.

We’re greeted by an empty display screen; nothing is seen as a result of I haven’t run the related Python code. To run my Python code, execute it by typing python predominant.py or python3 predominant.py.

If there aren’t any errors in your DagsHub connections and code, it’s best to see an output like this within the console.

Let’s clarify the output line by line. Accessing as hasan.asus1999 means you’re authenticated and accessing your DagsHub account accurately. Initialized MLflow to trace repo “hasan.asus1999/MLOPS-Demo” means MLflow has been efficiently initialized to log all coaching runs, metrics, and fashions to your DagsHub repository named MLOPS-Demo. Random Forest (n_estimators=100, max_depth=None) signifies the mannequin was skilled utilizing the default hyperparameters. If I don’t give parameter values to my RandomForest algorithm, my default n_estimators worth will likely be 100 and my max_depth worth will likely be None. We’ll attempt it by giving parameters in one other instance and examine it in MLflow.

# Hyperparameters

n_estimators = int(sys.argv[1]) if len(sys.argv) > 1 else 100

max_depth = int(sys.argv[2]) if len(sys.argv) > 2 else None

Do you keep in mind the code line from above? That code line permits us to make use of the default values, however we are going to break this after we rerun our software shortly.

Accuracy: 1.0

Precision: 1.0

Recall: 1.0

F1 Rating: 1.0

These metrics present good scores (1.0), which implies the mannequin carried out flawlessly on the take a look at knowledge. That is anticipated for the Iris dataset, which is small, clear, and well-balanced.

Efficiently registered mannequin ‘RandomForestIrisModel’ is skilled mannequin was registered efficiently within the MLflow Mannequin Registry beneath the identify RandomForestIrisModel.

Created model ‘1’ of mannequin ‘RandomForestIrisModel’. This mannequin is saved as model 1. Should you prepare and log the mannequin once more (with totally different parameters), MLflow will create model 2, 3, and many others.

Now let’s refresh our MLflow dashboard web page and ensure we see one thing like this.

As you’ll be able to see, every little thing is ok. Within the picture above, after we click on on the Run Title subject subsequent to the orange icon, we will entry particulars resembling parameters and metrics.

It matches completely with the values in our console; that is superior!

Now let’s attempt totally different parameters for our RandomForest algorithm. I’ll run my software this time as follows: python3 predominant.py 150 5

This implies the n_estimators worth will likely be 150 and the max_depth worth will likely be 5.

Now let’s refresh our MLflow dashboard and ensure we will see this picture.

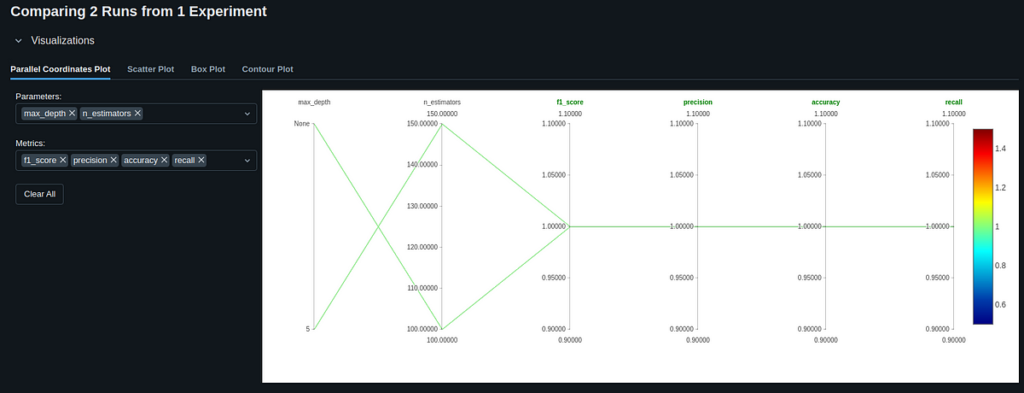

As you’ll be able to see we now have two totally different fashions. We are able to visually examine two fashions by choosing the checkboxes subsequent to the fashions and clicking the “Evaluate” button.

You may visualize by choosing your parameters and metrics. I attempted choosing all parameters and metrics; you’ll be able to attempt being extra particular.