NVSwitch connects all GPUs in a node by way of a swap cloth, preserving NVLink’s point-to-point nature whereas providing uniform all-to-all communication

NVSwitch Execs & cons

Execs:

- Full bandwidth, all-to-all connections

- Uniform latency and no routing complexity

- Simplifies NCCL collective operations

- Permits environment friendly tensor-parallel/model-parallel coaching

- Important for DGX, HGX, and NVIDIA SuperPOD deployments

Cons:

- Costly and power-hungry

- Requires specialised {hardware}/chassis (e.g., DGX — NVIDIA’s high-end server with 8 GPUs related by way of NVSwitch, optimized for large-scale deep studying)

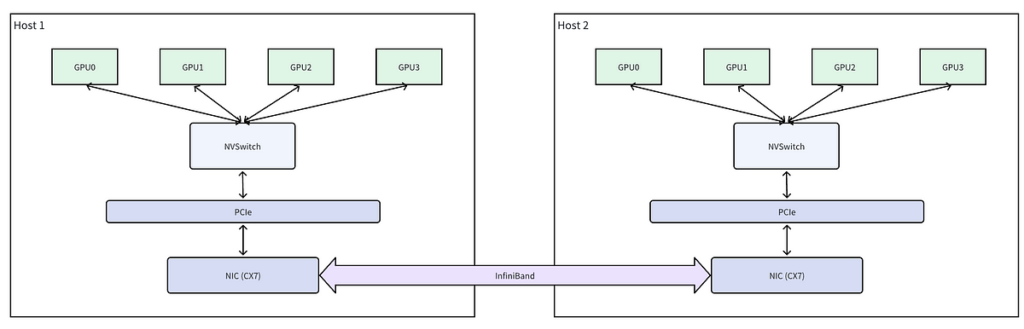

Multi-Host Setup with InfiniBand + NVLink

In a multi-host NVSwitch-based GPU system, GPUs and the NVSwitch don’t immediately connect with the community interface. As a substitute, GPUs entry the NIC (e.g., ConnectX-7) by way of the host’s PCIe subsystem. For inter-node communication, every NIC is related by way of InfiniBand, forming a high-speed cluster cloth. GPUDirect RDMA permits the NIC to carry out direct reminiscence entry (DMA) to and from GPU reminiscence over PCIe, fully bypassing the CPU and system reminiscence. This zero-copy switch path considerably reduces communication latency and CPU overhead, enabling environment friendly, large-scale distributed coaching throughout a number of GPU servers. The mixture of NVSwitch (for intra-node) and GPUDirect RDMA (for inter-node) gives a seamless and high-performance information path for collective operations like NCCL’s all_reduce.

We show the all_reduce operation on 4 GPUs, every holding the vector [x0–x7], break up into 4 chunks.

Every GPU begins with the total tensor of 8 components, divided into 4 chunks:

- chunk0: indices [0,1]

- chunk1: indices [2,3]

- chunk2: indices [4,5]

- chunk3: indices [6,7]

Every GPU will cut back one distinctive chunk by summing that chunk from all GPUs.

Every GPU now owns one totally diminished chunk. These are shared to all different GPUs in a hoop sample over 3 steps.

All GPUs now maintain the whole diminished tensor.