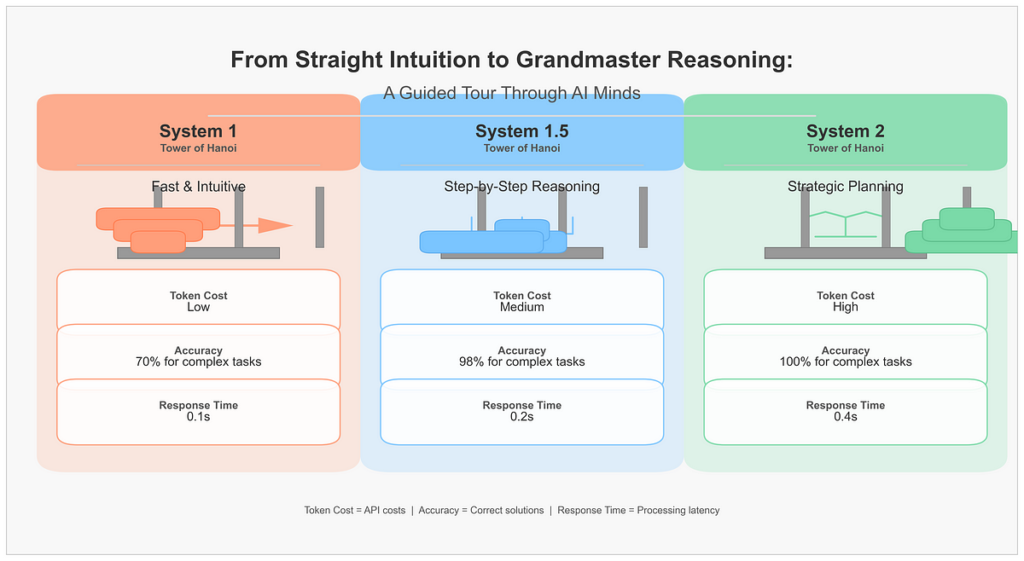

- 2–3 disks: All three nail it.

- 4 disks: System 1 stumbles (~70% appropriate), 1.5 and a pair of stay dependable.

- 5–6 disks: System 1 is a legal responsibility; System 2 is flawless; System 1.5 is the sensible hero (98%+ accuracy).

# Code to validate strikes and test correctness

def validate_moves(strikes, num_disks):

pegs = {"A": record(vary(num_disks, 0, -1)), "B": [], "C": []}

illegal_moves = 0

for transfer in strikes:

# Parse transfer like "Transfer disk 1 from A to C"

disk, src, tgt = parse_move(transfer) # Examine if transfer is authorized

if not pegs[src] or pegs[src][-1] != disk:

illegal_moves += 1

proceed

if pegs[tgt] and pegs[tgt][-1] < disk:

illegal_moves += 1

proceed # Execute the transfer

pegs[src].pop()

pegs[tgt].append(disk) # Examine if puzzle is solved

is_complete = pegs["C"] == record(vary(num_disks, 0, -1))

return is_complete and illegal_moves == 0, illegal_moves

You’d assume “quick” all the time wins. But:

- System 1 makes use of few tokens — till you consider re-asks, handbook checks and bug repair site visitors.

- System 2 invests extra tokens up entrance, then sails house error free.

# Simplified token effectivity calculation

def calculate_total_cost(system, num_disks, retry_probability):

# Preliminary request

immediate = generate_prompt(system, num_disks)

prompt_tokens = estimate_tokens(immediate)

completion_tokens = estimate_completion_tokens(system, num_disks)

# Base value

value = estimate_cost(prompt_tokens, completion_tokens) # Add retry prices

expected_retries = 0

if system == "system1":

# System 1 has larger retry charges as complexity will increase

expected_retries = retry_probability * (num_disks - 1)

elif system == "system1.5":

# System 1.5 has decrease retry charges

expected_retries = retry_probability * (num_disks - 3) if num_disks > 3 else 0 # System 2 has near-zero retries # Complete value together with retries

total_cost = value * (1 + expected_retries) return total_cost

Perception: It’s cheaper to “pay” for rigorous reasoning as soon as than to pay the value of repeated failures.

Allow us to have a look at the precise knowledge from our benchmarks:

Discover that as complexity will increase, System 2’s preliminary larger token value turns into justified by its excellent accuracy.

System 1:

Transfer disk 1 from A to C

Transfer disk 2 from A to B

Transfer disk 1 from C to B

Transfer disk 3 from A to C

Transfer disk 1 from B to A

Transfer disk 2 from B to C

...

[stops at step 20, missing 11 moves]

System 1.5:

1. Transfer disk 1 from A to C [clear path]

2. Transfer disk 2 from A to B [free disk 3]

3. Transfer disk 1 from C to B [group small disks]

4. Transfer disk 3 from A to C [continue clearing larger disks]

...

31. Transfer disk 5 from A to C [final transfer]

System 2:

Technique:

- For n disks, we'd like (2^n - 1) strikes

- For five disks, we'd like 31 strikes

- We'll use recursion:

1. Transfer 4 disks from A to B

2. Transfer disk 5 from A to C

3. Transfer 4 disks from B to C

Strikes:

Transfer disk 1 from A to C

Transfer disk 2 from A to B

...

Transfer disk 5 from A to C

Conventional immediate engineering = crafting the right one-liner. Stream engineering = designing a multi-stage workflow:

# Stream engineering instance with multi-step prompting

def flow_engineering_approach(num_disks):

# Step 1: Ask the mannequin to grasp the issue

understand_prompt = f"Clarify the Tower of Hanoi puzzle with {num_disks} disks and its guidelines."

understanding = call_llm(understand_prompt)

# Step 2: Ask for an answer technique

strategy_prompt = f"Primarily based on this understanding, define a method to resolve the Tower of Hanoi with {num_disks} disks."

technique = call_llm(strategy_prompt) # Step 3: Ask for execution

execution_prompt = f"Utilizing this technique, record the exact strikes to resolve the Tower of Hanoi with {num_disks} disks."

strikes = call_llm(execution_prompt) # Step 4: Validate the answer

validation_prompt = f"Confirm if these strikes appropriately remedy the Tower of Hanoi with {num_disks} disks in accordance with the principles."

validation = call_llm(validation_prompt) return {

"understanding": understanding,

"technique": technique,

"strikes": strikes,

"validation": validation

}

- Comprehend: “Clarify the principles.”

- Plan: “Define your recursive technique.”

- Execute: “Listing strikes.”

- Validate: “Examine legality; repair errors.”

That additional “plan” + “validate” steps are your System 1.5 in motion, a tiny workflow that unlocks reliability.

- Code technology: fast scaffolds vs. examined modules vs. full architectural plans

# Instance of System 2 strategy for code technology

def generate_code_with_system2(necessities):

immediate = f"""You might be an skilled software program engineer. Plan rigorously earlier than writing code.

Necessities: {necessities} First, define the structure and important elements.

Then, determine potential edge instances and find out how to deal with them.

Lastly, write clear, well-documented code that meets these necessities. Embrace error dealing with, exams, and feedback explaining key selections.

""" return call_llm(immediate)

- Medical diagnostics: symptom matching vs. differential reasoning vs. care-path design

- Authorized due diligence: clause lookup vs. threat evaluation vs. contract drafting

- Begin easy (S1): for primary queries, maintain prompts tight.

- Escalate sensible (S1.5): chain-of-thought for medium complexity duties.

- Lock in (S2): when failure isn’t an possibility, construct a circulation with planning and validation.

# Adaptive system choice primarily based on error charges

def adaptive_prompt_system(job, complexity):

# Begin with System 1

system = "system1"

max_attempts = 3

makes an attempt = 0

whereas makes an attempt < max_attempts:

immediate = generate_prompt(system, job, complexity)

response = call_llm(immediate) # Validate the response

legitimate, errors = validate_response(response, job) if legitimate:

return response # Escalate to extra sturdy techniques after failures

makes an attempt += 1

if makes an attempt == 1:

system = "system1.5"

elif makes an attempt == 2:

system = "system2" # Return finest effort with System 2

return response

Tip: Instrument your LLM calls. Monitor errors, retries and token utilization. Mechanically swap techniques when accuracy dips beneath your threshold.

Stream engineering is the seed of agentic AI — small brokers executing micro-flows beneath human oversight, collaborating like a symphony.

# Easy agent-based workflow

class Agent:

def __init__(self, title, system, specialization):

self.title = title

self.system = system

self.specialization = specialization

def course of(self, job, context):

immediate = self.generate_prompt(job, context)

return call_llm(immediate)def agentic_workflow(job):

# Create specialised brokers

planner = Agent("Planner", "system2", "breaking down advanced duties")

researcher = Agent("Researcher", "system1", "retrieving data")

reasoner = Agent("Reasoner", "system1.5", "analyzing data")

validator = Agent("Validator", "system2", "checking correctness") # Coordination workflow

plan = planner.course of(job, {})

research_results = researcher.course of(plan["research_tasks"], {})

evaluation = reasoner.course of(plan["analysis_tasks"], research_results)

validation = validator.course of(plan["validation_tasks"], evaluation) return {

"plan": plan,

"analysis": research_results,

"evaluation": evaluation,

"validation": validation,

"final_result": evaluation if validation["is_valid"] else None

}

One agent handles knowledge ingestion, one other causes, one other validates, all beneath a conductor (you or a grasp agent).

Need to see these outcomes firsthand? Observe these steps:

- Clone the repository: git clone https://github.com/Bora-Bastab/tower-of-hanoi-llm-benchmark.git

- Set up dependencies:

pip set up -r necessities.txt - Run the benchmark:

python hanoi_benchmark.py

The script will generate CSV outcomes and all of the visualisations talked about on this article. Be happy to switch the parameters in hanoi_benchmark.py to check totally different eventualities.

Whereas the Tower of Hanoi is a traditional mathematical puzzle, its underlying rules have discovered sensible purposes throughout numerous domains:

1. Pc Science and Algorithms

The recursive nature of the Tower of Hanoi makes it a wonderful instrument for educating and understanding recursion in pc science. It’s usually used for example how advanced issues could be damaged down into less complicated sub issues.

2. Knowledge Backup Methods

Within the realm of knowledge administration, the Tower of Hanoi algorithm has impressed backup rotation schemes. These schemes optimise the frequency and retention of backups, guaranteeing knowledge integrity whereas minimising storage necessities.

3. Neuropsychological Assessments

Psychologists utilise the Tower of Hanoi job to evaluate government features, similar to planning and problem-solving skills. It’s significantly helpful in evaluating people with frontal lobe impairments or cognitive problems.

4. Synthetic Intelligence and Machine Studying

The issue-solving methods derived from the Tower of Hanoi have influenced AI algorithms, particularly in areas requiring planning and sequential resolution making. It serves as a benchmark for evaluating the effectivity of AI fashions in dealing with recursive duties.

To successfully apply the insights from the Tower of Hanoi and the mentioned prompting methods:

- Perceive the Complexity: Recognise the character of the issue at hand. Easy duties may profit from intuitive approaches (System 1), whereas advanced issues might require deliberate, step-by-step methods (System 2).

- Steadiness Effectivity and Accuracy: Intention for a hybrid strategy (System 1.5) when applicable, combining the velocity of instinct with the accuracy of analytical considering.

- Leverage Recursive Pondering: Break down advanced issues into smaller, manageable components, much like the recursive steps within the Tower of Hanoi, to simplify and remedy them successfully.

- Apply in Numerous Fields: Contemplate how these methods could be tailored past theoretical workout routines, similar to in software program growth, knowledge administration, cognitive assessments and AI planning.

By integrating these approaches, we will improve downside fixing capabilities and optimise resolution making processes throughout numerous purposes. We have to keep in mind that prompting is not only a hack, it’s a design. Our immediate is the interface between human intention and machine cognition.

- Experiment: Strive 3–6 disks with every system.

- Measure: Monitor accuracy, value and developer hours saved.

- Share: Submit your outcomes, riff on the workflow and be a part of the dialog.

Keep in mind: Typically the sluggish, deliberate path is the quickest approach house. So subsequent time, earlier than you hit “ship” on that one liner, ask your self: “Which system do I actually need?”