Synthetic Intelligence (AI) has develop into an integral a part of fashionable technological ecosystems, powering improvements throughout sectors comparable to healthcare, finance, protection, and transportation. Nevertheless, behind the scenes of refined AI purposes lies a fancy and strong AI infrastructure comparable to a foundational layer that allows information assortment, storage, processing, mannequin coaching, deployment, and monitoring. AI infrastructure just isn’t merely a set of {hardware} and software program however a strategic enabler for operationalizing AI at scale with efficiency, effectivity, and moral oversight.

This discovery explores the important thing parts of AI infrastructure, its position in enabling scalable and moral AI, challenges in its deployment, and the long run instructions shaping its evolution.

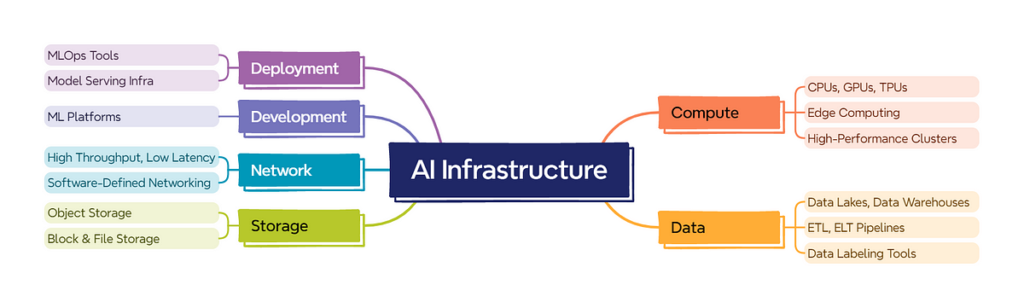

AI workloads — notably these involving deep studying — require intensive computational assets. The compute infrastructure usually consists of:

- CPUs, GPUs, TPUs: Whereas CPUs are appropriate for mild workloads and orchestration duties, GPUs and TPUs are important for parallel processing in mannequin coaching.

- Edge Computing: For real-time AI purposes comparable to autonomous automobiles and IoT units, edge computing infrastructure is deployed nearer to information sources, decreasing latency.

- Excessive-Efficiency Clusters (HPC): For big-scale coaching of fashions like GPT or BERT, organizations depend on clustered compute nodes with high-speed interconnects.

AI fashions thrive on information. The information infrastructure should help the ingestion, cleaning, labeling, storage, and retrieval of huge and numerous datasets.

- Information Lakes and Warehouses: Information lakes retailer uncooked, unstructured information whereas warehouses home structured and refined information for mannequin enter.

- ETL/ELT Pipelines: Automated pipelines guarantee information is extracted, reworked, and loaded effectively to take care of mannequin freshness.

- Information Labeling Instruments: Human-in-the-loop or automated instruments are important for supervised studying fashions.

AI initiatives usually cope with petabytes of information, necessitating scalable and quick storage programs.

- Object Storage (e.g., AWS S3): For storing unstructured datasets like photographs, movies, and logs.

- Block and File Storage: To be used instances requiring low-latency entry, comparable to throughout coaching or real-time inference.

AI workloads are bandwidth-intensive, particularly throughout distributed coaching.

- Excessive-throughput, Low-latency Networks: Required to switch mannequin parameters rapidly throughout parallel processing.

- Software program-Outlined Networking (SDN): Permits versatile and programmable community administration in cloud-native environments.

- ML Platforms (e.g., TensorFlow, PyTorch): Present abstractions for constructing and coaching fashions.

- MLOps Instruments (e.g., MLflow, Kubeflow): Allow model management, CI/CD, monitoring, and reproducibility.

- Mannequin Serving Infrastructure: Facilitates deploying fashions as APIs or microservices utilizing instruments like TensorFlow Serving, TorchServe, or Nvidia Triton.

AI infrastructure allows organizations to coach fashions quicker, iterate rapidly, and scale deployments seamlessly. Distributed compute clusters, parallel processing, and automatic pipelines cut back time-to-market and allow steady experimentation.

Mature AI infrastructure helps versioning of datasets, fashions, and experiments, guaranteeing reproducibility — a cornerstone of scientific rigor and regulatory compliance. That is notably important in sectors like healthcare and finance the place choices should be explainable and auditable.

A well-architected AI infrastructure incorporates mechanisms for bias detection, explainability, mannequin drift monitoring, and entry management. This aligns AI growth with moral frameworks and reduces the danger of unintended penalties or discriminatory outcomes.

By optimizing compute allocation, storage utilization, and automating redundant duties, infrastructure reduces the whole value of AI possession. Cloud-native AI infrastructure additionally helps dynamic scaling primarily based on utilization.

Establishing on-premises AI infrastructure is capital-intensive, requiring funding in specialised {hardware} (e.g., NVIDIA A100 GPUs), cooling programs, and community structure. Even cloud-native infrastructure can accrue important prices if not optimized correctly.

Integrating varied parts — information pipelines, mannequin coaching environments, CI/CD workflows, and monitoring instruments — requires deep experience. Misalignment amongst groups (Information Engineering, DevOps, Information Science) can result in siloed programs and inefficiencies.

AI programs usually deal with delicate information (PII, monetary, or well being data). Infrastructure should adjust to rules comparable to GDPR, HIPAA, and AI-specific legal guidelines (e.g., EU AI Act). Encryption, entry management, audit trails, and information masking are important.

Mannequin growth doesn’t finish at deployment. Drift, adversarial assaults, and information adjustments necessitate steady monitoring, retraining, and updates. Sustaining model management and traceability is a persistent problem in lots of organizations.

Constructing AI infrastructure requires cross-disciplinary experience throughout software program engineering, DevOps, information engineering, cybersecurity, and AI/ML. This expertise is in excessive demand and brief provide, usually making staffing a bottleneck.

Cloud suppliers (e.g., AWS SageMaker, Azure ML, Google Vertex AI) provide end-to-end AI infrastructure platforms, decreasing the barrier to entry and enabling smaller organizations to leverage enterprise-grade tooling.

Frameworks like Hugging Face, ONNX, and OpenVINO are enabling the open-source group to entry production-grade infrastructure. This democratization accelerates innovation and community-driven growth.

Vitality-efficient {hardware} (e.g., ARM-based chips), carbon-aware scheduling, and mannequin compression methods are gaining consideration to cut back the environmental influence of AI workloads.

Rising architectures allow AI coaching with out centralizing information, utilizing methods like federated studying, differential privateness, and homomorphic encryption. This shift is important in healthcare, finance, and protection sectors.

As quantum computing matures, hybrid architectures that offload sure workloads to quantum processors could revolutionize AI infrastructure, notably for optimization and simulation duties.

AI infrastructure is the spine of contemporary AI programs — enabling not simply the coaching and deployment of fashions, but additionally guaranteeing scalability, effectivity, ethics, and resilience. As AI continues to evolve, so should its supporting infrastructure.

From on-premise GPU clusters to serverless inference, from handbook pipelines to automated MLOps, the transformation is each fast and relentless.

Organizations that make investments strategically in AI infrastructure in the present day are laying the groundwork for tomorrow’s clever, moral, and high-impact options.

- Hugging Face. (n.d.). Hugging Face. https://huggingface.co/

- Arm. (n.d.). Silicon IP: CPU merchandise. https://www.arm.com/products/silicon-ip-cpu

- ONNX. (n.d.). Open Neural Community Change. https://onnx.ai/

- Intel Company. (2025). OpenVINO™ documentation — Model 2025. https://docs.openvino.ai/2025/index.html

- Google Cloud. (n.d.). Vertex AI. https://cloud.google.com/vertex-ai

- Way forward for Life Institute. (n.d.). EU Synthetic Intelligence Act: Up-to-date developments and analyses of the EU AI Act. https://artificialintelligenceact.eu/

- HumanSignal, Inc. (n.d.). Label Studio. https://labelstud.io/

- Kreutz, D., Ramos, F. M. V., Verissimo, P. E., Rothenberg, C. E., Azodolmolky, S., & Uhlig, S. (2015). A survey on software-defined networking. IET Networks, 4(2), 1–15. https://doi.org/10.1049/iet-net.2018.5082

- Ajayi, D. (2020, December 3). How BERT and GPT fashions change the sport for NLP. IBM. https://www.ibm.com/think/insights/how-bert-and-gpt-models-change-the-game-for-nlp