Wish to know what attracts me to soundscape evaluation?

It’s a discipline that mixes science, creativity, and exploration in a approach few others do. To begin with, your laboratory is wherever your toes take you — a forest path, a metropolis park, or a distant mountain path can all change into areas for scientific discovery and acoustic investigation. Secondly, monitoring a selected geographic space is all about creativity. Innovation is on the coronary heart of environmental audio analysis, whether or not it’s rigging up a customized system, hiding sensors in tree canopies, or utilizing solar energy for off-grid setups. Lastly, the sheer quantity of knowledge is actually unbelievable, and as we all know, in spatial evaluation, all strategies are truthful recreation. From hours of animal calls to the delicate hum of city equipment, the acoustic knowledge collected might be huge and complicated, and that opens the door to utilizing every thing from deep studying to geographical data methods (GIS) in making sense of all of it.

After my earlier adventures with soundscape analysis of one of Poland’s rivers, I made a decision to lift the bar and design and implement an answer able to analysing soundscapes in actual time. On this weblog publish, you’ll discover a description of the proposed technique, together with some code that powers your entire course of, primarily utilizing an Audio Spectrogram Transformer (AST) for sound classification.

Strategies

Setup

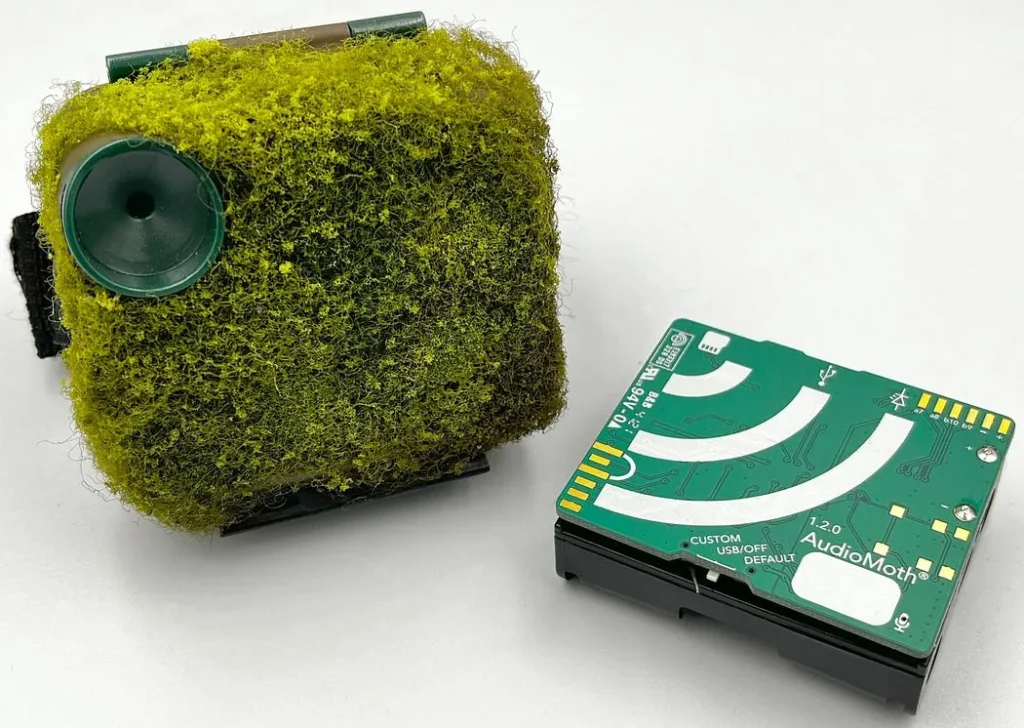

There are numerous the reason why, on this specific case, I selected to make use of a mixture of Raspberry Pi 4 and AudioMoth. Consider me, I examined a variety of gadgets — from much less power-hungry fashions of the Raspberry Pi household, by varied Arduino variations, together with the Portenta, all the best way to the Jetson Nano. And that was just the start. Choosing the proper microphone turned out to be much more sophisticated.

Finally, I went with the Pi 4 B (4GB RAM) due to its strong efficiency and comparatively low energy consumption (~700mAh when operating my code). Moreover, pairing it with the AudioMoth in USB microphone mode gave me quite a lot of flexibility throughout prototyping. AudioMoth is a robust system with a wealth of configuration choices, e.g. sampling fee from 8 kHz to gorgeous 384 kHz. I’ve a powerful feeling that — in the long term — this may show to be an ideal selection for my soundscape research.

Capturing sound

Capturing audio from a USB microphone utilizing Python turned out to be surprisingly troublesome. After fighting varied libraries for some time, I made a decision to fall again on the great outdated Linux arecord. The entire sound seize mechanism is encapsulated with the next command:

arecord -d 1 -D plughw:0,7 -f S16_LE -r 16000 -c 1 -q /tmp/audio.wavI’m intentionally utilizing a plug-in system to allow computerized conversion in case I want to introduce any modifications to the USB microphone configuration. AST is run on 16 kHz samples, so the recording and AudioMoth sampling are set to this worth.

Take note of the generator within the code. It’s essential that the system repeatedly captures audio on the time intervals I specify. I aimed to retailer solely the latest audio pattern on the system and discard it after the classification. This strategy will probably be particularly helpful later throughout larger-scale research in city areas, because it helps guarantee individuals’s privateness and aligns with GDPR compliance.

import asyncio

import re

import subprocess

from tempfile import TemporaryDirectory

from typing import Any, AsyncGenerator

import librosa

import numpy as np

class AudioDevice:

def __init__(

self,

identify: str,

channels: int,

sampling_rate: int,

format: str,

):

self.identify = self._match_device(identify)

self.channels = channels

self.sampling_rate = sampling_rate

self.format = format

@staticmethod

def _match_device(identify: str):

traces = subprocess.check_output(['arecord', '-l'], textual content=True).splitlines()

gadgets = [

f'plughw:{m.group(1)},{m.group(2)}'

for line in lines

if name.lower() in line.lower()

if (m := re.search(r'card (d+):.*device (d+):', line))

]

if len(gadgets) == 0:

increase ValueError(f'No gadgets discovered matching `{identify}`')

if len(gadgets) > 1:

increase ValueError(f'A number of gadgets discovered matching `{identify}` -> {gadgets}')

return gadgets[0]

async def continuous_capture(

self,

sample_duration: int = 1,

capture_delay: int = 0,

) -> AsyncGenerator[np.ndarray, Any]:

with TemporaryDirectory() as temp_dir:

temp_file = f'{temp_dir}/audio.wav'

command = (

f'arecord '

f'-d {sample_duration} '

f'-D {self.identify} '

f'-f {self.format} '

f'-r {self.sampling_rate} '

f'-c {self.channels} '

f'-q '

f'{temp_file}'

)

whereas True:

subprocess.check_call(command, shell=True)

knowledge, sr = librosa.load(

temp_file,

sr=self.sampling_rate,

)

await asyncio.sleep(capture_delay)

yield knowledgeClassification

Now for probably the most thrilling half.

Utilizing the Audio Spectrogram Transformer (AST) and the superb HuggingFace ecosystem, we are able to effectively analyse audio and classify detected segments into over 500 classes.

Word that I’ve ready the system to assist varied pre-trained fashions. By default, I exploit MIT/ast-finetuned-audioset-10–10–0.4593, because it delivers the perfect outcomes and runs nicely on the Raspberry Pi 4. Nonetheless, onnx-community/ast-finetuned-audioset-10–10–0.4593-ONNX can also be value exploring — particularly its quantised model, which requires much less reminiscence and serves the inference outcomes faster.

You could discover that I’m not limiting the mannequin to a single classification label, and that’s intentional. As a substitute of assuming that just one sound supply is current at any given time, I apply a sigmoid operate to the mannequin’s logits to acquire unbiased possibilities for every class. This enables the mannequin to specific confidence in a number of labels concurrently, which is essential for real-world soundscapes the place overlapping sources — like birds, wind, and distant site visitors — typically happen collectively. Taking the high 5 outcomes ensures that the system captures the most probably sound occasions within the pattern with out forcing a winner-takes-all resolution.

from pathlib import Path

from typing import Non-obligatory

import numpy as np

import pandas as pd

import torch

from optimum.onnxruntime import ORTModelForAudioClassification

from transformers import AutoFeatureExtractor, ASTForAudioClassification

class AudioClassifier:

def __init__(self, pretrained_ast: str, pretrained_ast_file_name: Non-obligatory[str] = None):

if pretrained_ast_file_name and Path(pretrained_ast_file_name).suffix == '.onnx':

self.mannequin = ORTModelForAudioClassification.from_pretrained(

pretrained_ast,

subfolder='onnx',

file_name=pretrained_ast_file_name,

)

self.feature_extractor = AutoFeatureExtractor.from_pretrained(

pretrained_ast,

file_name=pretrained_ast_file_name,

)

else:

self.mannequin = ASTForAudioClassification.from_pretrained(pretrained_ast)

self.feature_extractor = AutoFeatureExtractor.from_pretrained(pretrained_ast)

self.sampling_rate = self.feature_extractor.sampling_rate

async def predict(

self,

audio: np.array,

top_k: int = 5,

) -> pd.DataFrame:

with torch.no_grad():

inputs = self.feature_extractor(

audio,

sampling_rate=self.sampling_rate,

return_tensors='pt',

)

logits = self.mannequin(**inputs).logits[0]

proba = torch.sigmoid(logits)

top_k_indices = torch.argsort(proba)[-top_k:].flip(dims=(0,)).tolist()

return pd.DataFrame(

{

'label': [self.model.config.id2label[i] for i in top_k_indices],

'rating': proba[top_k_indices],

}

)To run the ONNX model of the mannequin, it’s essential add Optimum to your dependencies.

Sound strain stage

Together with the audio classification, I seize data on sound strain stage. This strategy not solely identifies what made the sound but in addition good points perception into how strongly every sound was current. In that approach, the mannequin captures a richer, extra sensible illustration of the acoustic scene and may ultimately be used to detect finer-grained noise air pollution data.

import numpy as np

from maad.spl import wav2dBSPL

from maad.util import mean_dB

async def calculate_sound_pressure_level(audio: np.ndarray, achieve=10 + 15, sensitivity=-18) -> np.ndarray:

x = wav2dBSPL(audio, achieve=achieve, sensitivity=sensitivity, Vadc=1.25)

return mean_dB(x, axis=0)The achieve (preamp + amp), sensitivity (dB/V), and Vadc (V) are set primarily for AudioMoth and confirmed experimentally. In case you are utilizing a distinct system, you will need to establish these values by referring to the technical specification.

Storage

Knowledge from every sensor is synchronised with a PostgreSQL database each 30 seconds. The present city soundscape monitor prototype makes use of an Ethernet connection; due to this fact, I’m not restricted by way of community load. The system for extra distant areas will synchronise the information every hour utilizing a GSM connection.

label rating system sync_id sync_time

Hum 0.43894055 yor 9531b89a-4b38-4a43-946b-43ae2f704961 2025-05-26 14:57:49.104271

Mains hum 0.3894045 yor 9531b89a-4b38-4a43-946b-43ae2f704961 2025-05-26 14:57:49.104271

Static 0.06389702 yor 9531b89a-4b38-4a43-946b-43ae2f704961 2025-05-26 14:57:49.104271

Buzz 0.047603738 yor 9531b89a-4b38-4a43-946b-43ae2f704961 2025-05-26 14:57:49.104271

White noise 0.03204195 yor 9531b89a-4b38-4a43-946b-43ae2f704961 2025-05-26 14:57:49.104271

Bee, wasp, and many others. 0.40881288 yor 8477e05c-0b52-41b2-b5e9-727a01b9ec87 2025-05-26 14:58:40.641071

Fly, housefly 0.38868183 yor 8477e05c-0b52-41b2-b5e9-727a01b9ec87 2025-05-26 14:58:40.641071

Insect 0.35616025 yor 8477e05c-0b52-41b2-b5e9-727a01b9ec87 2025-05-26 14:58:40.641071

Speech 0.23579548 yor 8477e05c-0b52-41b2-b5e9-727a01b9ec87 2025-05-26 14:58:40.641071

Buzz 0.105577625 yor 8477e05c-0b52-41b2-b5e9-727a01b9ec87 2025-05-26 14:58:40.641071Outcomes

A separate utility, constructed utilizing Streamlit and Plotly, accesses this knowledge. At the moment, it shows details about the system’s location, temporal SPL (sound strain stage), recognized sound lessons, and a spread of acoustic indices.

And now we’re good to go. The plan is to increase the sensor community and attain round 20 gadgets scattered round a number of locations in my city. Extra details about a bigger space sensor deployment will probably be obtainable quickly.

Furthermore, I’m gathering knowledge from a deployed sensor and plan to share the information package deal, dashboard, and evaluation in an upcoming weblog publish. I’ll use an attention-grabbing strategy that warrants a deeper dive into audio classification. The primary thought is to match completely different sound strain ranges to the detected audio lessons. I hope to discover a higher approach of describing noise air pollution. So keep tuned for a extra detailed breakdown quickly.

Within the meantime, you may learn the preliminary paper on my soundscapes research (headphones are compulsory).

This publish was proofread and edited utilizing Grammarly to enhance grammar and readability.