For those who’ve used LLMs to code, debug, or discover new instruments over a number of classes, you’ve seemingly run into the identical frustration I’ve — the mannequin doesn’t bear in mind something. Every immediate seems like a clear slate. Even with immediate tuning or retrieval-based hacks, the shortage of continuity reveals up quick.

The basis drawback? LLMs don’t have persistent reminiscence. Most of what we name “reminiscence” in present setups is simply non permanent context. For AI to be really useful in long-term workflows — particularly ones that evolve over time — it must study and adapt, not simply react. That’s the place one thing like SEAL (Self-Adapting Language Fashions), proposed by MIT researchers, begins getting attention-grabbing.

Present LLMs (like Claude, Gemini, GPT, and so on.) are highly effective, however static. They’re educated as soon as on large datasets — and that data is frozen post-training. If you would like them to include one thing new (say, a framework replace or an edge-case habits), your choices aren’t nice:

- Finetuning is pricey and impractical for many use circumstances.

- Search-based retrieval helps, however doesn’t retain something.

- In-context studying is restricted by immediate size and doesn’t “stick.”

Evaluate this with people — we take notes, rephrase, revisit, and retain. We adapt naturally. SEAL tries to imitate that course of contained in the mannequin itself.

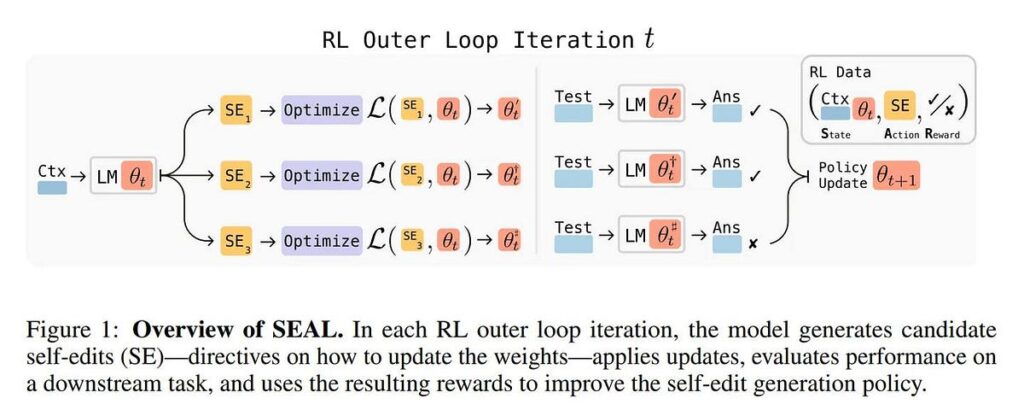

SEAL works by letting the mannequin generate “self-edits” — rephrasings, examples, summaries — after which study from them by way of reinforcement studying. It’s like letting the mannequin create its personal research materials and work out what helps it enhance.

There are two loops concerned:

- Internal loop: The mannequin takes a activity and produces a self-edit (e.g., reworded reality, distilled key factors).

- Outer loop: It evaluates how properly that edit improved efficiency, then retains the efficient ones and drops the remaining.

This makes the mannequin iteratively higher — much like how we study by rewriting notes or fixing variations of the identical drawback.

In empirical checks, SEAL enabled smaller fashions to outperform even setups utilizing GPT-4.1-generated information. That’s spectacular. It confirmed beneficial properties in:

- Studying new details from uncooked textual content (e.g., bettering on SQuAD duties with out re-seeing the passage).

- Adapting to novel reasoning duties with few examples (on the ARC dataset)

For builders, that would imply LLMs that keep up to date with altering APIs or evolving frameworks — with out fixed handbook prompting or reliance on exterior instruments. For researchers and educators, it opens up the thought of LLMs that evolve with use.