With the dataset correctly break up and stratified, the following step was to establish probably the most related predictors for modeling accident severity.

Efficient characteristic choice is vital on this context — not solely to enhance mannequin efficiency and cut back overfitting, but in addition to make sure that the ensuing insights are interpretable and actionable for real-world highway security functions.

The characteristic choice course of on this undertaking concerned three key elements:

- Predictive Power Evaluation: The Info Worth (IV) of every variable was calculated utilizing supervised optimum binning, carried out through the OptBinning Python library (Navas-Palencia, 2022). This technique leverages convex optimization to generate binning schemes that finest separate the binary end result lessons. IV scores served as a quantitative metric of every variable’s predictive energy in distinguishing deadly from non-fatal outcomes.

- Redundancy & Multicollinearity Evaluation: To handle redundancy and multicollinearity, Pearson correlation coefficients had been computed for numerical options, whereas Normalized Mutual Info (NMI) was used for categorical ones. Variables with excessive correlation or dependency had been eliminated, with precedence given to these providing stronger predictive worth and higher interpretability.

- Ahead Stepwise Choice: As a last refinement step, a ahead stepwise characteristic choice course of was utilized. Guided by ROC-AUC efficiency, this method incrementally added variables that contributed meaningfully to the mannequin’s discriminative capability, yielding a compact and efficient characteristic set for subsequent modeling.

Part 1: Predictive Power Evaluation

The primary part of characteristic choice centered on evaluating the particular person predictive energy of every characteristic utilizing Info Worth (IV) — a broadly used metric in credit score threat modeling. IV quantifies how nicely a variable separates “good” and “dangerous” outcomes primarily based on the distribution of binned values, and is particularly helpful when mixed with Weight of Proof (WOE) transformation for interpretability.

IV was computed for every of the preliminary 51 options within the dataset utilizing supervised optimum binning, a way that partitions variables in a approach that maximizes class separation. Based mostly on this evaluation (Desk 2), 41 out of 51 options demonstrated IV values higher than 0.02, indicating a minimum of weak predictive energy. These had been retained for the following stage of research.

The remaining 10 options, with IV values at or beneath 0.02, had been excluded as non-predictive, in step with thresholds advisable by Siddiqi (2006). This helped cut back noise and focus the mannequin on options almost certainly to contribute significant insights.

Part 2: Redundancy & Multicollinearity Evaluation

After filtering primarily based on Info Worth, the following step was to take away redundant options that would introduce multicollinearity — an issue that may distort mannequin interpretation and inflate the variance of parameter estimates.

This part handled numerical and categorical variables individually, utilizing statistical measures suited to their information varieties:

- For numerical options, pairwise Pearson correlation coefficients had been computed. Pairs with an absolute correlation above |r| > 0.7 had been flagged as extremely correlated — a typical threshold in predictive modeling observe.

- For categorical options, inter-variable dependence was assessed utilizing Normalized Mutual Info (NMI), which captures each linear and nonlinear associations. A threshold of NMI > 0.4 was used to establish strongly dependent pairs.

In each circumstances, when a extremely dependent pair was recognized, the characteristic with the decrease IV rating was eliminated — preserving variables with increased predictive power.

Because of this course of:

- 2 out of 17 numerical options had been eliminated as a consequence of excessive Pearson correlation (see Determine 1).

- 6 out of 24 categorical options had been excluded primarily based on excessive NMI (see Determine 2).

This step ensured the ultimate characteristic set was not solely predictive, but in addition freed from redundancy, serving to produce extra secure and interpretable fashions.

Part 3: Ahead Stepwise Characteristic Choice

To additional refine the characteristic set, a ahead stepwise choice technique was employed — guided by mannequin efficiency as measured by ROC-AUC. The aim of this part was to establish a subset of options that meaningfully improved the mannequin’s capability to differentiate between deadly and non-fatal outcomes.

The process used 5-fold stratified cross-validation on the coaching dataset, making certain that the proportion of deadly (“dangerous”) and non-fatal (“good”) circumstances remained constant in every fold. Inside every fold, options had been added separately to a logistic regression mannequin, following a descending order of their Info Worth (IV).

After every characteristic was added, the mannequin was retrained and evaluated on the validation break up, and the ensuing ROC-AUC was recorded. This course of was repeated throughout all 5 folds.

To judge the outcomes, an AUC trajectory plot was generated (Determine 3), exhibiting the common ROC-AUC rating throughout folds as options had been incrementally added. This plot helped visualize how every characteristic influenced the mannequin’s efficiency over time.

Slightly than relying strictly on the order of addition, last characteristic choice was guided by visible inspection of the AUC trajectory. Options that contributed to notable upward shifts in efficiency had been prioritized — permitting for a extra versatile, judgment-driven inclusion course of that balanced each early and late-stage predictors.

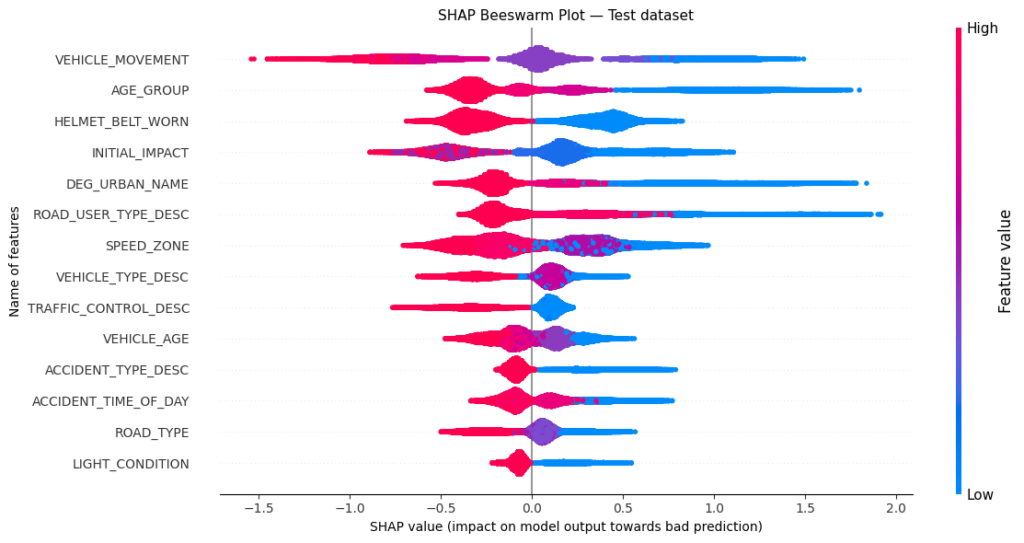

Because of this part, the next 14 options had been chosen to be used in subsequent modeling phases: