In case you’re constructing or deploying giant language fashions (LLMs), this publish is your wake-up name.

Generative AI is altering the sport — however it’s additionally introducing a complete new class of threats. Neglect SQL injections and CSRF for a second. Now it’s important to take care of adversarial prompts, rogue software invocations, jailbreak personas, immediate injection, and even hidden code inside SVGs.

I spent the previous week diving deep into how attackers exploit LLM-powered apps — and how one can defend them for certainly one of my assignments. Right here, I’ll share evolving of panorama of LLM safety.

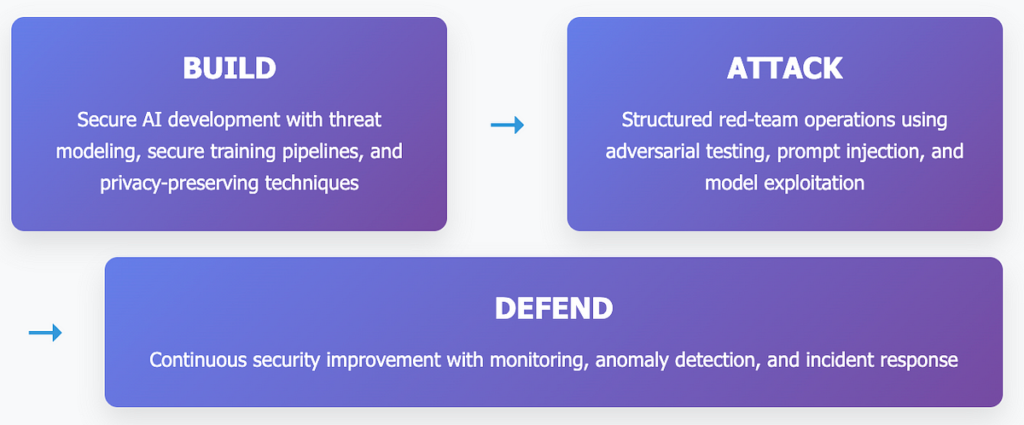

We’ll discover the distinctive threats posed by these highly effective fashions, element numerous assault vectors, and description sensible methods for pink teaming and protection. Our objective is to offer cybersecurity professionals, red-team operators, safety auditors, and AI builders with a complete understanding of learn how to proactively determine, exploit, and mitigate weaknesses in LLM-powered functions.

Why LLM and Mannequin Safety Matter Now Extra Than Ever

Because the adoption of Giant Language Fashions (LLMs) accelerates throughout industries, the significance of securing these methods has turn into vital and pressing. We’re not within the experimentation part — LLMs are actually embedded in customer-facing functions, inner instruments, and decision-making workflows.