Collection: Studying ML, The Proper Method — A beginner-friendly assortment of blogs exploring core machine studying ideas with readability, depth, and keenness.

Mastering ROC Curves, AUC, and Actual-World Threshold Tuning

Key phrases: ROC Curve, AUC, Threshold Tuning, Precision-Recall Curve, Imbalanced Information, Classification Metrics, Machine Studying

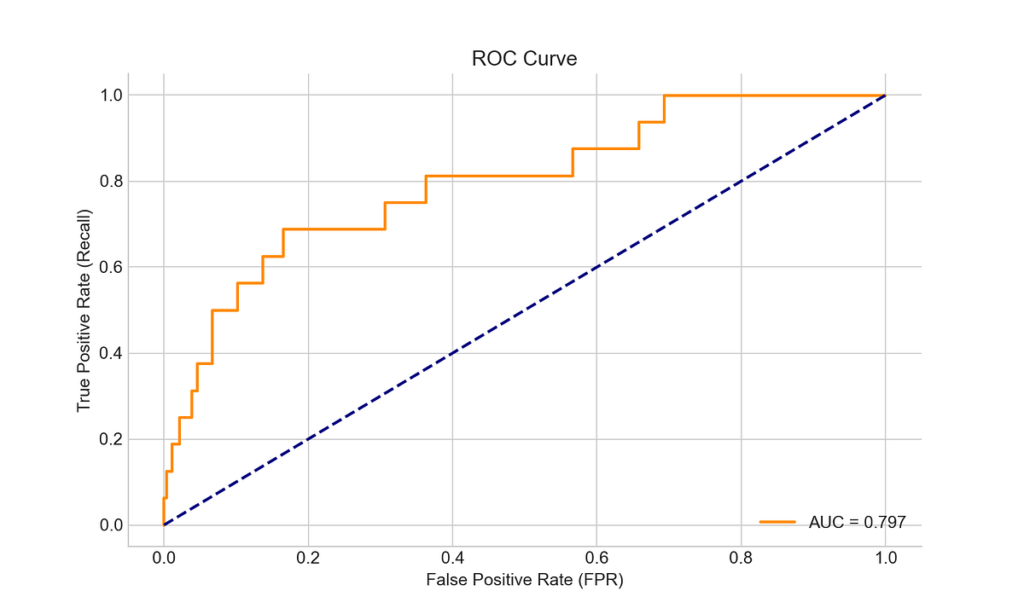

Recap from Half 1: We debunked the parable of “95% accuracy,” explored the confusion matrix, and dived into precision, recall, and F1-score. However what in case your mannequin’s predictions are probabilistic? How do you deal with imbalanced information the place 99% of instances are “False” and 1% are “True”? Enter ROC curves and AUC — the dynamic duo for evaluating mannequin efficiency past mounted thresholds.

What it tells you:

- How effectively your mannequin separates courses (e.g., “True” vs. “False”) at each attainable threshold.

Core Elements:

- True Constructive Charge (TPR/Recall):

TP / (TP + FN)

Instance: “Out of all True instances, what % did we catch?” - False Constructive Charge (FPR):

FP / (FP + TN)

Instance: “Out of all False instances, what % did we falsely flag as True?”

How one can Learn the Curve:

- Prime-left nook (0,1): Good classifier

- Diagonal line: Random guessing

- Your mannequin’s curve: The nearer it goes to the top-left, the higher

What it tells you:

- The likelihood that your mannequin ranks a random “True” occasion larger than a random “False” one.

Key Perception (AUC SCORE):

- 0.9–1.0 = Wonderful discrimination

- 0.8–0.9 = Good

- 0.7–0.8 = Truthful

- 0.5–0.7 = Poor

- Random Guessing classifier: AUC = 0.5 (diagonal line)

Why it’s highly effective:

- Threshold-agnostic: Evaluates efficiency throughout all thresholds.

- Nice for imbalanced information: Measures discriminative energy, not uncooked accuracy.

When ROC/AUC isn’t sufficient:

- In situations with uncommon “True” instances (e.g., 99% False, 1% True), FPR will be deceptive. PR curves give attention to the constructive (“True”) class.

The way it works:

- X-axis: Recall (What number of “True” instances did we catch?)

- Y-axis: Precision (Once we predict “True”, how usually are we proper?)

- Baseline: Horizontal line at

% of True instances in information.

Instance:

Decreasing thresholds catches extra “True” instances (↑ recall) however will increase false alarms (↓ precision).

Why Accuracy Fails Right here:

Default thresholds (e.g., 0.5) not often align with enterprise prices:

- False Unfavorable value: Lacking a “True” case (e.g., $100k loss).

- False Constructive value: Wrongly flagging “False” as “True” (e.g., $5 value).

Methods to Optimize Thresholds:

- Value-based tuning: Decrease

Whole Value = (FN × C_FN) + (FP × C_FP). - Youden’s J Statistic: Maximize

J = TPR - FPR. - Goal recall/precision: E.g., Medical analysis: ‘Guarantee 95% recall, Spam detection: ‘Keep 90% precision.

- Begin with ROC/AUC: Test class separation (particularly for balanced information).

- Change to PR curves when:

* Constructive class < 10% .

* False positives are expensive.

* You care extra in regards to the minority class. - Tune thresholds: Based mostly on enterprise prices, not default values.

- Monitor in manufacturing: Metrics drift as information evolves!

“A mannequin with 0.99 AUC can nonetheless fail if thresholds ignore enterprise realities.”

ROC, AUC, and PR curves arm you towards imbalanced information and probabilistic predictions. However the journey doesn’t finish right here:

- Log loss, calibration, and multi-class metrics are additionally part of this collection.

Bear in mind: Metrics are conversations together with your mannequin. Ask the appropriate questions.

PART 1

- “How do YOU select thresholds in manufacturing? What challenges have you ever confronted? Talk about in feedback