underneath uncertainty. Not simply as soon as, however in a sequence over time. We depend on our previous experiences and expectations of the long run to take advantage of knowledgeable and optimum decisions doable.

Consider a enterprise that provides a number of merchandise. These merchandise are procured at a value and bought for a revenue. Nevertheless, unsold stock could incur a restocking charge, could carry salvage worth, or in some instances, should be scrapped totally.

Companies, due to this fact, faces a vital query: how a lot to inventory? This choice should typically be made earlier than demand is absolutely identified; that’s, underneath censored demand. If the enterprise overstocks, it observes the complete demand, since all buyer requests are fulfilled. But when it understocks, it solely sees that demand exceeded provide and the precise demand stays unknown, making it a censored commentary.

This kind of downside is sometimes called a Newsvendor Mannequin. In fields similar to operations analysis and utilized arithmetic, the optimum stocking choice has been studied by framing it as a basic newspaper stocking downside; therefore the title.

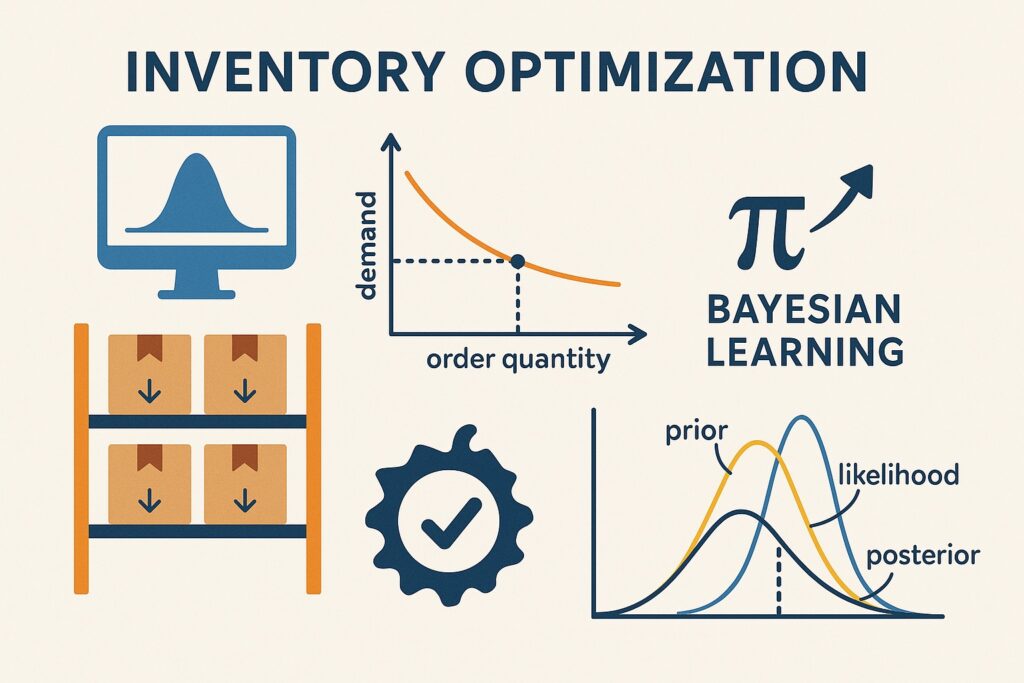

On this article, we discover a Sequential Resolution-Making framework for the stocking downside underneath uncertainty and develop a dynamic optimization algorithm utilizing Bayesian studying.

Our method carefully follows the framework laid out by Warren B. Powell, Reinforcement Learning and Stochastic Optimization (2019) and implements the paper from Negoescu, Powell, and Frazier (2011), Optimal Learning Policies for the Newsvendor Problem with Censored Demand and Unobservable Lost Sales, printed in Operations Analysis.

Downside Setup

Following an analogous setup to that of Negoescu et al., we body the issue as optimizing the stock degree for a single merchandise over a sequence of time steps. The associated fee and promoting worth are thought of fastened. Unsold stock is discarded with no salvage worth, whereas every unit bought generates income. Demand is unknown, and when the out there inventory is lower than precise demand, the demand commentary is taken into account censored.

Demand ( W ) in every interval is drawn from an exponential distribution with an unknown fee parameter, for simulation functions.

[

begin{aligned}

x &in mathbb{R}_+ &&: text{Order quantity (decision variable)}

W &sim mathrm{Exponential}(lambda) &&: text{Random demand with unknown rate parameter } lambda

lambda &&&: text{Demand rate (unknown, to be estimated)}

c &&&: text{Unit cost to procure or produce the item}

p &&&: text{Unit selling price (assume } p > c text{ for profitability)}

end{aligned}

]

The parameter (lambda) within the exponential distribution represents the fee of demand; that’s, how rapidly demand occasions happen. The common demand is given by (mathbb{E}[W] = frac{1}{lambda}).

We will observe from the Chance Density Operate (PDF) of the Exponential distribution that greater values of demand (W) turn into much less possible. Thus, the Exponential distribution serves as an acceptable alternative for demand modeling.

Sequential Resolution Formulation

We formulate the stock management downside as sequential choice course of underneath uncertainty. The purpose is to maximise whole anticipated revenue over a finite time horizon ( N ), whereas studying unknown demand fee by making use of Bayesian Studying principals.

We outline a mannequin with an preliminary state and a probabilistic mannequin that represents its perception about future states over time. At every time step, the mannequin decides primarily based on a coverage that maps its present perception to an motion. The purpose is to search out the optimum coverage that maximizes a predefined reward operate.

After taking an motion, the mannequin observes the ensuing state and updates its perception, accordingly, persevering with this cycle of choice, commentary, and perception replace.

1) State Variable

We mannequin demand in every interval as a random variable drawn from an Exponential distribution with an unknown fee parameter λ. Since ( lambda ) shouldn’t be immediately observable, we encode our uncertainty about its worth utilizing a Gamma prior:

[

lambda sim mathrm{Gamma}(a_0, b_0)

]

The parameters ( a_0 ) and ( b_0 ) outline the form and fee of our preliminary perception concerning the demand fee. These two parameters function our state variables. At every time step, they summarize all previous data and are up to date as new demand observations turn into out there.

As we acquire extra knowledge, the posterior distribution over (lambda) evolves from a large and unsure form to a narrower and extra assured one, step by step concentrating across the true demand fee.

This course of is captured naturally by the Gamma distribution, which flexibly adjusts its form primarily based on the quantity of knowledge we’ve seen. Early on, the distribution is diffuse, signaling excessive uncertainty. As observations accumulate, the idea turns into sharper, permitting for extra dependable and responsive decision-making. Chance Density Operate (PDF) of Gamma distribution may be seen under:

We’ll later outline a transition operate that updates the state, that’s, how ( (a_n, b_n) ) evolves to ( (a_{n+1}, b_{n+1}) ), primarily based on newly noticed knowledge. This permits the mannequin to repeatedly refine its perception about demand and make extra knowledgeable stock selections over time.

Be aware that anticipated worth of the Gamma distribution is outlined as:

[

mathbb{E}[lambda] = frac{a}{b}

]

2) Resolution Variable

The choice variable at time ( n ) is the stocking degree:

[

x_n in mathbb{R}_+

]

That is the variety of models to order earlier than demand ( W_{n+1} ) is realized. The choice relies upon solely on the present perception ( (a_n, b_n) ).

3) Exogenous Info

After deciding on ( x_n ), demand ( W_{n+1} ) is revealed:

[

W_{n+1} sim text{Exp}(lambda)

]

Since ( lambda ) is unknown, demand is random. Observations are:

- Uncensored if ( W_{n+1} < x_n ) (we observe the precise demand)

- Censored if ( W_{n+1} ge x_n ) (we solely know demand exceeding provide ranges)

This censoring limits the data out there for perception updating. Despite the fact that the complete demand isn’t noticed, the censored commentary nonetheless carries invaluable data and shouldn’t be ignored in our modeling method.

4) Transition Operate

The transition operate defines how the mannequin’s perception, represented by the state variables, is up to date over time. It maps the prior state to the anticipated future state, and in our case, this replace is ruled by Bayesian studying.

Bayesian Uncertainty Modelling

Bayes’ theorem combines prior perception with noticed knowledge to kind a posterior distribution. This up to date distribution displays each prior information and the newly noticed data.

[

p_{n+1}(lambda mid w_{n+1}) = frac{p(w_{n+1} mid lambda) cdot p_n(lambda)}{p(w_{n+1})}

]

The place:

[

p(w_{n+1} mid lambda) : text{ Likelihood of new observation at time } n+1

]

[

p_n(lambda) : text{ Prior at time } n

]

[

p(w_{n+1}) : text{ Marginal likelihood (normalizing constant) at time } n+1

]

[

p_{n+1}(lambda mid w_{n+1}) : text{ Posterior after observing } w_{n+1}

]

We arrange our downside such that in every interval, demand W is drawn from an Exponential distribution. Prior perception over λ can be modelled utilizing a Gamma distribution.

[

p_{n+1}(lambda mid w_{n+1})

=

frac{

underbrace{lambda e^{-lambda w_{n+1}}}_{text{Likelihood}}

cdot

underbrace{frac{b_n^{a_n}}{Gamma(a_n)} lambda^{a_n – 1} e^{-b_n lambda}}_{text{Prior (Gamma)}}

}{

underbrace{

int_0^infty lambda e^{-lambda w_{n+1}} cdot frac{b_n^{a_n}}{Gamma(a_n)} lambda^{a_n – 1} e^{-b_n lambda} , dlambda

}_{text{Marginal (evidence)}}

}

]

The Gamma and Exponential distributions kind a well known conjugate prior in Bayesian statistics. When utilizing a Gamma prior and an Exponential probability, the ensuing posterior can be a Gamma distribution. This property of the prior and posterior belonging to the identical distributional household is what defines a conjugate prior. Posterior additionally belongs to the Gamma household; a property that simplifies Bayesian updating considerably.

For reference, closed-form conjugate updates like this may be present in normal conjugate prior tables, such because the one on Wikipedia. Utilizing this reference, we are able to formulate the posterior as:

Let:

[

lambda mid w_1, dots, w_n sim mathrm{Gamma}left(a_0 + n, b_0 + sum_{i=1}^n w_iright)

]

[

lambda sim mathrm{Gamma}(a_0, b_0) quad : text{ Prior}

]

[

w sim mathrm{Exp}(lambda) quad : text{ Likelihood}

]

For n unbiased observations ( w_1, dots, w_n ), the Gamma prior and Exponential probability lead to a Gamma posterior:

After observing a single (uncensored) demand ( w ), the posterior simplifies to under by leveraging conjugate priors:

[

lambda mid w sim mathrm{Gamma}(a_0 + 1, b_0 + w)

]

- The form parameter will increase by 1 as a result of one new knowledge level has been noticed.

- The speed parameter will increase by ( w ) as a result of the Exponential probability contains the time period ( e^{-lambda w} ), which mixes with the prior’s exponential time period and provides to the entire exponent.

The Replace Operate

The posterior parameters (state variables) are up to date primarily based on the character of the commentary:

- Uncensored (( W_{n+1} < x_n )):

[

a_{n+1} = a_n + 1, quad b_{n+1} = b_n + W_{n+1}

]

- Censored (( W_{n+1} ge x_n )):

[

a_{n+1} = a_n, quad b_{n+1} = b_n + x_{n}

]

These updates mirror how every commentary, full or partial, informs the posterior perception over ( lambda ).

We will outline the transition operate in Python as under:

from typing import Tuple

def transition_a_b(

a_n: float,

b_n: float,

x_n: float,

W_n1: float

) -> Tuple[float, float]:

"""

Updates the posterior parameters (a, b) after observing demand.

Args:

a_n (float): Present form parameter of Gamma prior.

b_n (float): Present fee parameter of Gamma prior.

x_n (float): Order amount at time n.

W_n1 (float): Noticed demand at time n+1 (could also be censored).

Returns:

Tuple[float, float]: Up to date (a_{n+1}, b_{n+1}) values.

"""

if W_n1 < x_n:

# Uncensored: full demand noticed

a_n1 = a_n + 1

b_n1 = b_n + W_n1

else:

# Censored: solely know that W >= x

a_n1 = a_n

b_n1 = b_n + x_n

return a_n1, b_n15) Goal Operate

The mannequin seeks a coverage ( pi ), mapping beliefs to stocking selections with a view to maximize whole anticipated revenue.

- Revenue from ordering ( x_n ) models and going through demand ( W_{n+1} ):

[

F(x_n, W_{n+1}) = p cdot min(x_n, W_{n+1}) – c cdot x_n

]

- The cumulative goal is:

[

max_pi mathbb{E} left[ sum_{n=0}^{N-1} F(x_n, W_{n+1}) right]

]

- ( pi ) maps ( (a_n, b_n) ) to ( x_n )

- ( p ) is the promoting worth per unit bought

- ( c ) is the unit value of ordering

- Unsold models are discarded with no salvage worth

Be aware that this goal operate maximizes solely the anticipated rapid reward throughout the whole time horizon. Within the subsequent part, we introduce an expanded model that comes with the worth of future studying. This encourages the mannequin to discover, accounting for the data that censored demand can reveal over time.

We will outline the revenue operate in Python as under:

def profit_function(x: float, W: float, p: float, c: float) -> float:

"""

Revenue operate outlined as:

F(x, W) = p * min(x, W) - c * x

This represents the reward acquired when fulfilling demand W with stock x,

incomes worth p per unit bought and incurring value c per unit ordered.

Args:

x (float): Stock degree / choice variable.

W (float): Realized demand.

p (float, elective): Unit promoting worth.

c (float, elective): Unit value.

Returns:

float: The revenue (reward) for this era.

"""

return p * min(x, W) - c * xCoverage Capabilities

We’ll outline a number of coverage features as outlined by Negoescu et al, which is able to replace the worth of (x_{n+1}) (stocking degree) primarily based on our present perception of the state ((a_{n}, b_{n})).

1) Level Estimate Coverage

Below this coverage, mannequin estimates the unknown demand fee (lambda) utilizing the present posterior and chooses and order amount ( x_{n+1} ) to maximise the rapid anticipated revenue.

At time (n), present posterior about (lambda ~ Gamma(a_{n}, b_{n})) is:

[

hat{lambda}_n = frac{a_n}{b_n}

]

We deal with this estimate because the “true” worth of (lambda) and assume demand (W sim textual content{Exp}(hat{lambda}_n)).

Anticipated Worth

The revenue for order amount (x) and realized demand (W) is:

[

F(x, W) = p cdot min(x, W) – c cdot x

]

We search to maximise the anticipated revenue.

[

max_{x geq 0} quad mathbb{E}_W left[ p min(x, W) – c x right]

]

Anticipated worth of a random variable is:

[

mathbb{E}[X] = int_{-infty}^{infty} x cdot f(x) , dx

]

Thus, the target operate may be written as:

[

max_{x geq 0} left[ p left( int_0^x w f_W(w) , dw + x int_x^infty f_W(w) , dw right) – c x right]

]

The place:

- (f_W(x)): Chance density operate (PDF) of demand evaluated at (x)

PDF of (Exponential(lambda)) is:

[

f_W(w) = hat{lambda}_n e^{-hat{lambda}_n w}

]

This may be solved as:

[

mathbb{E}[F(x, W)] = p cdot frac{1 – e^{-hat{lambda}_n x}}{hat{lambda}_n} – c x

]

First Order Optimality Situation

We set the spinoff of the anticipated revenue operate to zero, and resolve for (x) to search out the stocking worth which maximize the anticipated revenue:

[

frac{d}{dx} mathbb{E}[F(x, W)] = p e^{-hat{lambda}_n x} – c = 0

]

[

e^{-hat{lambda}_n x^*} = frac{c}{p}

quad Rightarrow quad

x^* = frac{1}{hat{lambda}_n} logleft( frac{p}{c} right)

]

Substitute (hat{lambda}_n = frac{a_n}{b_n}):

[

x_n = frac{b_n}{a_n} logleft( frac{p}{c} right)

]

Python implementation:

import math

def point_estimate_policy(

a_n: float,

b_n: float,

p: float,

c: float

) -> float:

"""

Level Estimate Coverage, chooses x_n primarily based on posterior imply at time n.

Args:

a_n (float): Gamma form parameter at time n.

b_n (float): Gamma fee parameter at time n.

p (float): Promoting worth per unit.

c (float): Unit value.

Returns:

float: Stocking degree x_n

"""

lambda_hat = a_n / b_n

return (1 / lambda_hat) * math.log(p / c)2) Distribution Coverage

The Distribution Coverage optimizes the anticipated rapid revenue by integrating over the whole present perception distribution of the demand fee (lambda). Not like the Level Estimate Coverage, it doesn’t collapse the posterior to a single worth.

At time (n), the idea about (lambda) is:

[

lambda sim text{Gamma}(a_n, b_n)

]

Demand is modelled as:

[

W sim text{Exp}(lambda)

]

This coverage chooses order amount (x_{n}) by maximizing the anticipated rapid revenue, averaged over each the uncertainty in demand and the uncertainty in (lambda)

[

x_n = argmax_{x ge 0} mathbb{E}_{lambda sim text{Gamma}(a_n, b_n)} left[ mathbb{E}_{W sim text{Exp}(lambda)} left[ p cdot min(x, W) – c x right] proper]

]

That is the anticipated rapid revenue, averaged over each the uncertainty in demand and the uncertainty in (lambda).

Anticipated Worth

From the earlier coverage, we all know that:

[

mathbb{E}_W[min(x, W)] = frac{1 – e^{-hat{lambda}_n x}}{hat{lambda}_n}

]

Thus:

[

mathbb{E}_{lambda} left[ mathbb{E}_{W mid lambda}[min(x, W)] proper]

= mathbb{E}_{lambda} left[ frac{1 – e^{-lambda x}}{lambda} right]

]

If we denote the Gamma density as:

[

f(lambda) = frac{b^a}{Gamma(a)} lambda^{a – 1} e^{-b lambda}

]

Then expectation turns into:

[

mathbb{E}_lambda left[ frac{1 – e^{-lambda x}}{lambda} right]

=int_0^infty frac{1 – e^{-lambda x}}{lambda} f(lambda) , dlambda

= frac{b^a}{Gamma(a)} int_0^infty (1 – e^{-lambda x}) lambda^{a – 2} e^{-b lambda} , dlambda

]

With out going over the complete proof, expectation turns into:

[

mathbb{E}[text{Profit}] = p cdot mathbb{E}_{lambda} left[ frac{1 – e^{-lambda x}}{lambda} right] – c x

= p cdot frac{b}{a – 1} left(1 – left( frac{b}{b + x} proper)^{a – 1} proper) – c x

]

First Order Optimality Situation

Once more, we set the spinoff of the anticipated revenue operate to zero, and resolve for (x) to search out the stocking worth which maximize the anticipated revenue:

[

frac{d}{dx} mathbb{E}[text{Profit}]

= frac{d}{dx} left[ p cdot frac{b}{a – 1} left(1 – left( frac{b}{b + x} right)^{a – 1} right) – c x right] = 0

]

With out going over the proof, closed kind expresion primarily based on Negoescu et al’s paper is:

[

x_n = b_n left( left( frac{p}{c} right)^{1/a_n} – 1 right)

]

Python implementation:

def distribution_policy(

a_n: float,

b_n: float,

p: float,

c: float

) -> float:

"""

Distribution Coverage, chooses x_n by integrating over full posterior at time n.

Args:

a_n (float): Gamma form parameter at time n.

b_n (float): Gamma fee parameter at time n.

p (float): Promoting worth per unit.

c (float): Unit value.

Returns:

float: Stocking degree x_n

"""

return b_n * ((p / c) ** (1 / a_n) - 1)Data Gradient (KG) Coverage

The Data Gradient (KG) coverage is a Bayesian studying coverage that balances exploitation (maximizing rapid revenue) and exploration (ordering to realize details about demand for future selections).

As a substitute of simply maximizing at the moment’s revenue, KG chooses the order amount that maximizes:

Revenue now + Worth of knowledge gained for the long run

[

x_n = argmax_x mathbb{E}left[ p cdot min(x, W_{n+1}) – c x + V(a_{n+1}, b_{n+1}) mid a_n, b_n, x right]

]

The place:

- (W_{n+1} sim textual content{Exp}(lambda)) (with (lambda sim textual content{Gamma}(a_n, b_n)))

- (V(a_{n+1}, b_{n+1})) is the worth of anticipated future income underneath up to date beliefs after observing (W_{n+1})

We have no idea (a_{n+1}, b_{n+1}) at time (n) as a result of we haven’t but noticed demand. So, we compute their anticipated worth underneath the doable commentary outcomes (censored vs uncensored).

The KG coverage then evaluates every candidate stocking amount (x) by:

- Simulating its impact on posterior beliefs

- Computing the rapid revenue

- Computing the worth of future studying primarily based on perception updates

Goal Operate

We outline the entire worth of selecting (x) at time (n) as:

[

F_{text{KG}}(x) = underbrace{mathbb{E}[p cdot min(x, W) – c x]}_{textual content{Speedy revenue}} + underbrace{(N – n) cdot mathbb{E}_{textual content{posterior}} left[ max_{x’} mathbb{E}_{lambda sim text{posterior}}[ p cdot min(x’, W) – c x’ ] proper]}_{textual content{Worth of studying}}

]

- The primary time period is simply anticipated rapid revenue.

- The second time period accounts for a way this alternative improves future revenue by sharpening our perception about (lambda).

- Horizon Issue ((N-n)): We’ll make ((N-n)) extra selections sooner or later. So the worth of higher selections resulting from studying at the moment will get multiplied by this issue.

- Posterior Averaging (mathbb{E}_{textual content{posterior}}[⋅]): This implies we’re averaging over all of the doable posterior beliefs we’d find yourself with after observing the end result of demand; as a result of demand is random and probably censored, we gained’t get good data, however we’ll replace our perception.

The paper makes use of beforehand mentioned Distribution Coverage as proxy for estimating the Future Worth operate. Thus:

[

x^*(a, b) = V(a, b) = frac{b p}{a – 1} left( 1 – left( frac{b}{b + x^*} right)^{a – 1} right) – c x^* = b left( left( frac{p}{c} right)^{1/a} – 1 right)

]

Anticipated Worth

Anticipated worth of (V) is expressed as under per Negoescu et al. Because the proof of this equation is sort of complicated, we’ll not be going over the main points.

[

begin{align*}

mathbb{E}[V] &= mathbb{E} left[ mathbb{E} left[ b^{n+1} left( frac{p}{a^{n+1} – 1} left( 1 – left( frac{c}{p} right)^{1 – frac{1}{a^{n+1}}} right) – c left( left( frac{c}{p} right)^{-frac{1}{a^{n+1}}} – 1 right) right) Big| lambda right] Large| a^n, b^n, x^n proper]

&= mathbb{E} left[ int_0^{x^n} left( b^n + y right) left( frac{p}{a^n} left( 1 – left( frac{c}{p} right)^{1 – frac{1}{a^{n+1}}} right) – c left( left( frac{c}{p} right)^{-frac{1}{a^{n+1}}} – 1 right) right) lambda e^{-lambda y} , dy right.

&quad + left. int_{x^n}^{infty} left( b^n + x^n right) left( frac{p}{a^n – 1} left( 1 – left( frac{c}{p} right)^{1 – frac{1}{a^n}} right) – c left( left( frac{c}{p} right)^{-frac{1}{a^n}} – 1 right) right) lambda e^{-lambda y} , dy right].

finish{align*}

]

As we already know the anticipated worth of the rapid revenue operate as described underneath earlier insurance policies, we are able to categorical the additive anticipated worth of KG coverage as summation. As this equation is sort of lengthy, we’ll not be going over the main points, however it may be discovered within the paper.

First Order Optimality Situation

On this coverage as nicely, we set the spinoff of the anticipated revenue operate to zero, and resolve for (x) to search out the stocking worth which maximize the anticipated revenue. Closed kind answer of the equation primarily based on the paper is:

[

x_n = b_n left[ left( frac{r}{1 + (N – n) cdot left( 1 + frac{a_n r}{a_n – 1} – frac{(a_n + 1) r}{a_n} right)} right)^{-1 / a_n} – 1 right]

]

The place:

- (r = frac{c}{p}): Price to cost ratio

Python implementation:

def knowledge_gradient_policy(

a_n: float,

b_n: float,

p: float,

c: float,

n: int,

N: int

) -> float:

"""

Data Gradient Coverage, one-step lookahead coverage for exponential demand

with Gamma(a_n, b_n) posterior.

Args:

a_n (float): Gamma form parameter at time n.

b_n (float): Gamma fee parameter at time n.

p (float): Promoting worth per unit.

c (float): Unit value per unit.

n (int): Present interval index (0-based).

N (int): Complete variety of intervals within the horizon.

Returns:

float: Stocking degree x_n

"""

a = max(a_n, 1.001) # Keep away from division by zero for small form values

r = c / p

future_factor = (N - (n + 1)) / N

adjustment = 1.0 - future_factor * (1.0 / a)

adjusted_r = min(max(r * adjustment, 1e-4), 0.99)

return b_n * ((1 / adjusted_r) ** (1 / a) - 1)Monte Carlo Coverage Analysis

To guage a coverage (pi) in a stochastic surroundings, we simulate its efficiency over a number of pattern demand paths.

Let:

- (M) be the variety of unbiased simulations (demand paths), every denoted (omega^m) for (m = 1, 2, dots, M)

- (N) be the time horizon

- (p_n(omega^m)) be the simulated worth at time (n) on path (m)

- (x_n(omega^m)) be the choice taken at time (n) underneath coverage (pi) on path (n)

Cumulative Reward on a Single Path

For every pattern path (omega^m), compute the entire reward:

[

hat{F}^pi(omega^m) = sum_{n=0}^{N-1} left[ p cdot minleft(x_n(omega^m), W_{n+1}(omega^m)right) – c cdot x_n(omega^m) right]

]

This represents the realized worth of the coverage (pi) alongside that particular trajectory.

Python implementation:

import numpy as np

def simulate_policy(

N: int,

a_0: float,

b_0: float,

lambda_true: float,

policy_name: str,

p: float,

c: float,

seed: int = 42

) -> float:

"""

Simulates the sequential stock decision-making course of utilizing a specified coverage.

Args:

N (int): Variety of time intervals.

a_0 (float): Preliminary form parameter of Gamma prior.

b_0 (float): Preliminary fee parameter of Gamma prior.

lambda_true (float): True exponential demand fee.

policy_name (str): One in every of {'point_estimate', 'distribution', 'knowledge_gradient'}.

p (float): Promoting worth per unit.

c (float): Procurement value per unit.

seed (int): Random seed for reproducibility.

Returns:

float: Complete cumulative reward over N intervals.

"""

np.random.seed(seed)

a_n, b_n = a_0, b_0

rewards = []

for n in vary(N):

# Select order amount primarily based on specified coverage

if policy_name == "point_estimate":

x_n = point_estimate_policy(a_n=a_n, b_n=b_n, p=p, c=c)

elif policy_name == "distribution":

x_n = distribution_policy(a_n=a_n, b_n=b_n, p=p, c=c)

elif policy_name == "knowledge_gradient":

x_n = knowledge_gradient_policy(a_n=a_n, b_n=b_n, p=p, c=c, n=n, N=N)

else:

elevate ValueError(f"Unknown coverage: {policy_name}")

# Pattern demand

W_n1 = np.random.exponential(1 / lambda_true)

# Compute revenue and replace perception

reward = profit_function(x_n, W_n1, p, c)

rewards.append(reward)

a_n, b_n = transition_a_b(a_n, b_n, x_n, W_n1)

return sum(rewards)Estimate Anticipated Worth by Averaging

The anticipated reward of coverage (pi) is approximated utilizing the pattern common throughout all (M) simulations:

[

bar{F}^pi = frac{1}{N} sum_{m=1}^{N} hat{F}^pi(omega^m)

]

This (bar{F}^pi) is an unbiased estimator of the true anticipated reward underneath coverage (pi).

Python implementation:

import numpy as np

def policy_monte_carlo(

N_sim: int,

N: int,

a_0: float,

b_0: float,

lambda_true: float,

policy_name: str,

p: float = 10.0,

c: float = 4.0,

base_seed: int = 42

) -> float:

"""

Runs a number of Monte Carlo simulations to guage the typical cumulative reward

for a given stock coverage underneath exponential demand.

Args:

N_sim (int): Variety of Monte Carlo simulations to run.

N (int): Variety of time steps in every simulation.

a_0 (float): Preliminary Gamma form parameter.

b_0 (float): Preliminary Gamma fee parameter.

lambda_true (float): True fee of exponential demand.

policy_name (str): Identify of the coverage to make use of: {"point_estimate", "distribution", "knowledge_gradient"}.

p (float): Promoting worth per unit.

c (float): Procurement value per unit.

base_seed (int): Seed offset for reproducibility throughout simulations.

Returns:

float: Common cumulative reward throughout all simulations.

"""

total_rewards = []

for i in vary(N_sim):

reward = simulate_policy(

N=N,

a_0=a_0,

b_0=b_0,

lambda_true=lambda_true,

policy_name=policy_name,

p=p,

c=c,

seed=base_seed + i

)

total_rewards.append(reward)

return np.imply(total_rewards)# Parameters

N_sim = 10000 # Variety of simulations

N = 100 # Variety of time intervals

a_0 = 10.0 # Preliminary form parameter of Gamma prior

b_0 = 5.0 # Preliminary fee parameter of Gamma prior

lambda_true = 0.25 # True fee of exponential demand

p = 26.0 # Promoting worth per unit

c = 20.0 # Unit value

base_seed = 1234 # Base seed for reproducibility

outcomes = {

coverage: policy_monte_carlo(

N_sim=N_sim,

N=N,

a_0=a_0,

b_0=b_0,

lambda_true=lambda_true,

policy_name=coverage,

p=p,

c=c,

base_seed=base_seed

)

for coverage in ["point_estimate", "distribution", "knowledge_gradient"]

}

print(outcomes)Outcomes

The left plot reveals how common cumulative revenue evolves over time, whereas the precise plot reveals the typical reward per time step. From this simulation, we observe that the Data Gradient (KG) coverage considerably outperforms the opposite two, because it optimizes not solely rapid rewards but in addition the long run worth of cumulative rewards. Each the Level Estimate and Distribution insurance policies carry out equally.

We will observe from above plots that the Bayesian Studying algorithm step by step converges to the true imply demand (W).

These findings spotlight the significance of incorporating the worth of knowledge in sequential choice making underneath uncertainty. Whereas less complicated heuristics just like the Level Estimate and Distribution insurance policies focus solely on rapid beneficial properties, the Data Gradient coverage leverages future studying potential, yielding superior long-term efficiency.