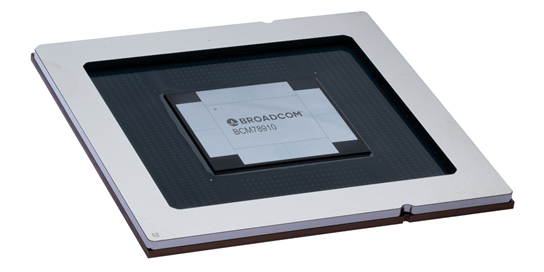

PALO ALTO, Calif., July 15, 2025 — Broadcom Inc. (NASDAQ:AVGO), a worldwide chief in semiconductor and infrastructure software program options, at present introduced the cargo of its breakthrough Ethernet swap — the Tomahawk Extremely. Engineered to rework the Ethernet swap for high-performance computing (HPC) and AI workloads, Tomahawk Extremely delivers industry-leading ultra-low latency, huge throughput, and lossless networking.

“Tomahawk Extremely is a testomony to innovation, involving a multi-year effort by lots of of engineers who reimagined each facet of the Ethernet swap,” stated Ram Velaga, senior vp and normal supervisor of Broadcom’s Core Switching Group. “This highlights Broadcom’s dedication to spend money on advancing Ethernet for high-performance networking and AI scale-up.”

Constructed from the bottom as much as meet the acute calls for of HPC environments and tightly coupled AI clusters, Tomahawk Extremely redefines what an Ethernet swap can ship. Lengthy perceived as higher-latency and lossy, Ethernet takes on a brand new function:

- Extremely-low latency: Achieves 250ns swap latency at full 51.2 Tbps throughput.

- Excessive efficiency: Delivers line-rate switching efficiency even at minimal packet sizes of 64 bytes, supporting as much as 77 billion packets per second.

- Adaptable, optimized Ethernet headers: Reduces header overhead from 46 bytes all the way down to as little as 10 bytes, whereas sustaining full Ethernet compliance —boosting community effectivity and enabling versatile, application-specific optimizations.

- Lossless material: Implements Hyperlink Layer Retry (LLR) and Credit score-Based mostly Movement Management (CBFC) to remove packet loss and guarantee reliability.

“AI and HPC workloads are converging into tightly coupled accelerator clusters that demand supercomputer-class latency — essential for inference, reliability, and in-network intelligence from the material itself,” stated Kunjan Sobhani, lead semiconductor analyst, Bloomberg Intelligence. “Demonstrating that open-standards Ethernet can now ship sub-microsecond switching, lossless transport, and on-chip collectives marks a pivotal step towards assembly these calls for of an AI scale-up stack — projected to be double digit billions in just a few years.”

Tomahawk Extremely is optimized for the tightly coupled, low-latency communication patterns present in each high-performance computing programs and AI clusters. With ultra-low latency switching and adaptable optimized Ethernet headers, it gives predictable, high-efficiency efficiency for large-scale simulations, scientific computing, and synchronized AI mannequin coaching and inference.

When deployed with Scale-Up Ethernet (SUE specification out there here), Tomahawk Extremely permits sub-400ns XPU-to-XPU communication latency, together with the swap transit time — setting a brand new benchmark for tightly synchronized AI compute at scale.

By lowering Ethernet header overhead from 46 bytes to only 10 bytes, whereas sustaining full Ethernet compliance, Tomahawk Extremely dramatically improves community effectivity. This optimized header is adaptable per software, providing each flexibility and efficiency positive aspects throughout numerous HPC and AI workloads.

Tomahawk Extremely incorporates lossless material expertise that eliminates packet drops throughout high-volume knowledge switch. Incorporating LLR, the swap detects hyperlink errors utilizing Ahead Error Correction and mechanically retransmits packets, avoiding drops on the wire degree. Concurrently, CBFC prevents buffer overflows that historically precipitated packet loss. Collectively, these mechanisms create a very lossless Ethernet material, delivering the extent of reliability demanded by at present’s most data-intensive workloads.

Tomahawk Extremely additionally accelerates efficiency by means of In-Community Collectives fixing one of the crucial persistent bottlenecks in AI and machine studying workloads. Fairly than burdening XPUs with collective operations like AllReduce, Broadcast, or AllGather, Tomahawk Extremely executes these instantly inside the swap chip. This may scale back job completion time and enhance utilization of pricey compute sources. Importantly, this functionality is endpoint-agnostic, enabling quick adoption throughout a variety of system architectures and vendor ecosystems.

Designed with improvements in topology-aware routing to assist superior HPC topologies together with Dragonfly, Mesh and Torus, Tomahawk Extremely can also be compliant with the UEC customary and embraces the openness and wealthy ecosystem of Ethernet networking.

As a part of Broadcom’s Ethernet-forward technique for AI scale-up, the corporate has launched SUE-Lite — an optimized model of the SUE specification tailor-made for energy and area-sensitive accelerator functions. SUE-Lite retains the important thing low-latency and lossless traits of full SUE, whereas additional lowering the silicon footprint and energy consumption of Ethernet interfaces on AI XPUs and CPUs.

This light-weight variant permits simpler integration of standards-compliant Ethernet materials in AI platforms, selling broader adoption of Ethernet because the interconnect of alternative in scale-up architectures.

Along with the 102.4 Tbps Tomahawk 6, Tomahawk Extremely kinds the inspiration of a unified Ethernet structure: enabling scale-up Ethernet for AI, and scale-out Ethernet for HPC and distributed workloads.

Tomahawk Extremely is 100% pin-compatible with Tomahawk 5, making certain a really quick time-to-market. It’s delivery now for deployment in rack-scale AI coaching clusters and supercomputing environments.