is the science of offering LLMs with the proper context to maximise efficiency. If you work with LLMs, you usually create a system immediate, asking the LLM to carry out a sure activity. Nevertheless, when working with LLMs from a programmer’s perspective, there are extra components to contemplate. It’s a must to decide what different information you possibly can feed your LLM to enhance its capacity to carry out the duty you requested it to do.

On this article, I’ll talk about the science of context engineering and how one can apply context engineering strategies to enhance your LLM’s efficiency.

It’s also possible to learn my articles on Reliability for LLM Applications and Document QA using Multimodal LLMs

Desk of Contents

Definition

Earlier than I begin, it’s necessary to outline the time period context engineering. Context engineering is actually the science of deciding what to feed into your LLM. This will, for instance, be:

- The system immediate, which tells the LLM the way to act

- Doc information fetch utilizing RAG vector search

- Few-shot examples

- Instruments

The closest earlier description of this has been the time period immediate engineering. Nevertheless, immediate engineering is a much less descriptive time period, contemplating it implies solely altering the system immediate you might be feeding to the LLM. To get most efficiency out of your LLM, you need to contemplate all of the context you might be feeding into it, not solely the system immediate.

Motivation

My preliminary motivation for this text got here from studying this Tweet by Andrej Karpathy.

I actually agreed with the purpose Andrej made on this tweet. Immediate engineering is unquestionably an necessary science when working with LLMs. Nevertheless, immediate engineering doesn’t cowl every thing we enter into LLMs. Along with the system immediate you write, you even have to contemplate components corresponding to:

- Which information must you insert into your immediate

- How do you fetch that information

- Easy methods to solely present related data to the LLM

- And so on.

I’ll talk about all of those factors all through this text.

API vs Console utilization

One necessary distinction to make clear is whether or not you might be utilizing the LLMs from an API (calling it with code), or through the console (for instance, through the ChatGPT website or utility). Context engineering is unquestionably necessary when working with LLMs via the console; nevertheless, my focus on this article might be on API utilization. The explanation for that is that when utilizing an API, you have got extra choices for dynamically altering the context you might be feeding the LLM. For instance, you are able to do RAG, the place you first carry out a vector search, and solely feed the LLM crucial bits of knowledge, fairly than your complete database.

These dynamic adjustments aren’t out there in the identical approach when interacting with LLMs via the console; thus, I’ll deal with utilizing LLMs via an API.

Context engineering strategies

Zero-shot prompting

Zero-shot prompting is the baseline for context engineering. Doing a activity zero-shot means the LLM is performing a activity it hasn’t seen earlier than. You’re basically solely offering a activity description as context for the LLM. For instance, offering an LLM with an extended textual content and asking it to categorise the textual content into class A or B, in response to some definition of the courses. The context (immediate) you might be feeding the LLM may look one thing like this:

You're an skilled textual content classifier, and tasked with classifying texts into

class A or class B.

- Class A: The textual content comprises a optimistic sentiment

- Class B: The subsequent comprises a unfavourable sentiment

Classify the textual content: {textual content}Relying on the duty, this might work very properly. LLMs are generalists and are in a position to carry out most straightforward text-based duties. Classifying a textual content into one in all two courses will often be a easy activity, and zero-shot prompting will thus often work fairly properly.

Few-shot prompting

This infographic highlights the way to carry out few-shot prompting:

The follow-up from zero-shot prompting is few-shot prompting. With few-shot prompting, you present the LLM with a immediate just like the one above, however you additionally present it with examples of the duty it should carry out. This added context will assist the LLM enhance at performing the duty. Following up on the immediate above, a few-shot immediate may appear to be:

You're an skilled textual content classifier, and tasked with classifying texts into

class A or class B.

- Class A: The textual content comprises a optimistic sentiment

- Class B: The subsequent comprises a unfavourable sentiment

{textual content 1} -> Class A

{textual content 2} -> class B

Classify the textual content: {textual content}You may see I’ve supplied the mannequin some examples wrapped in

Few-shot prompting works properly since you are offering the mannequin with examples of the duty you might be asking it to carry out. This often will increase efficiency.

You may think about this works properly on people as properly. When you ask a human a activity they’ve by no means achieved earlier than, simply by describing the duty, they may carry out decently (in fact, relying on the problem of the duty). Nevertheless, if you happen to additionally present the human with examples, their efficiency will often enhance.

General, I discover it helpful to consider LLM prompts as if I’m asking a human to carry out a activity. Think about as an alternative of prompting an LLM, you merely present the textual content to a human, and also you ask your self the query:

Given this immediate, and no different context, will the human have the ability to carry out the duty?

If the reply isn’t any, you need to work on clarifying and bettering your immediate.

I additionally wish to point out dynamic few-shot prompting, contemplating it’s a method I’ve had loads of success with. Historically, with few-shot prompting, you have got a set record of examples you feed into each immediate. Nevertheless, you possibly can usually obtain greater efficiency utilizing dynamic few-shot prompting.

Dynamic few-shot prompting means deciding on the few-shot examples dynamically when creating the immediate for a activity. For instance, in case you are requested to categorise a textual content into courses A and B, and you have already got an inventory of 200 texts and their corresponding labels. You may then carry out a similarity search between the brand new textual content you might be classifying and the instance texts you have already got. Persevering with, you possibly can measure the vector similarity between the texts and solely select essentially the most related texts (out of the 200 texts) to feed into your immediate as context. This manner, you’re offering the mannequin with extra related examples of the way to carry out the duty.

RAG

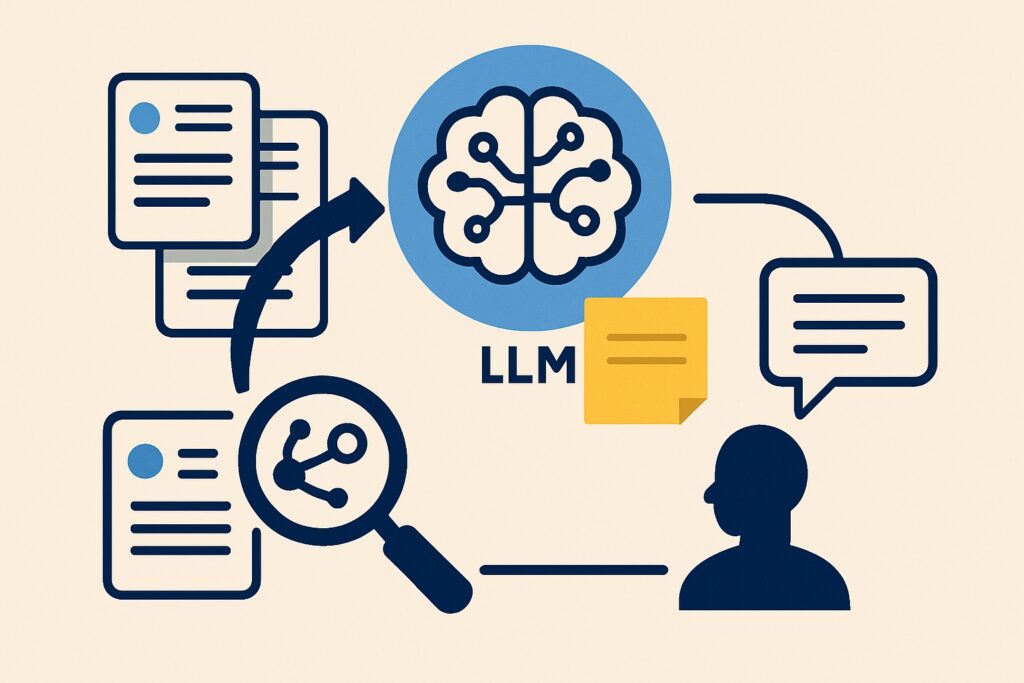

Retrieval augmented technology is a widely known method for rising the information of LLMs. Assume you have already got a database consisting of 1000’s of paperwork. You now obtain a query from a consumer, and need to reply it, given the information inside your database.

Sadly, you possibly can’t feed your complete database into the LLM. Though now we have LLMs corresponding to Llama 4 Scout with a 10-million context size window, databases are often a lot bigger. You subsequently have to seek out essentially the most related data within the database to feed into your LLM. RAG does this equally to dynamic few-shot prompting:

- Carry out a vector search

- Discover essentially the most related paperwork to the consumer query (most related paperwork are assumed to be most related)

- Ask the LLM to reply the query, given essentially the most related paperwork

By performing RAG, you might be doing context engineering by solely offering the LLM with essentially the most related information for performing its activity. To enhance the efficiency of the LLM, you possibly can work on the context engineering by bettering your RAG search. This will, for instance, be achieved by bettering the search to seek out solely essentially the most related paperwork.

You may learn extra about RAG in my article about creating a RAG system in your private information:

Instruments (MCP)

It’s also possible to present the LLM with instruments to name, which is a crucial a part of context engineering, particularly now that we see the rise of AI brokers. Device calling right this moment is usually achieved utilizing Model Context Protocol (MCP), a concept started by Anthropic.

AI brokers are LLMs able to calling instruments and thus performing actions. An instance of this may very well be a climate agent. When you ask an LLM with out entry to instruments in regards to the climate in New York, it won’t be able to offer an correct response. The explanation for that is naturally that details about the climate must be fetched in actual time. To do that, you possibly can, for instance, give the LLM a instrument corresponding to:

@instrument

def get_weather(metropolis):

# code to retrieve the present climate for a metropolis

return climate

When you give the LLM entry to this instrument and ask it in regards to the climate, it might then seek for the climate for a metropolis and give you an correct response.

Offering instruments for LLMs is extremely necessary, because it considerably enhances the skills of the LLM. Different examples of instruments are:

- Search the web

- A calculator

- Search through Twitter API

Subjects to contemplate

On this part, I make a couple of notes on what you need to contemplate when creating the context to feed into your LLM

Utilization of context size

The context size of an LLM is a crucial consideration. As of July 2025, you possibly can feed most frontier mannequin LLMs with over 100,000 enter tokens. This gives you with loads of choices for the way to make the most of this context. It’s a must to contemplate the tradeoff between:

- Together with loads of data in a immediate, thus risking a number of the data getting misplaced within the context

- Lacking some necessary data within the immediate, thus risking the LLM not having the required context to carry out a particular activity

Often, the one approach to determine the steadiness, is to check your LLMs efficiency. For instance with a classificaition activity, you possibly can test the accuracy, given completely different prompts.

If I uncover the context to be too lengthy for the LLM to work successfully, I typically cut up a activity into a number of prompts. For instance, having one immediate summarize a textual content, and a second immediate classifying the textual content abstract. This will help the LLM make the most of its context successfully and thus enhance efficiency.

Moreover, offering an excessive amount of context to the mannequin can have a big draw back, as I describe within the subsequent part:

Context rot

Final week, I learn an interesting article about context rot. The article was about the truth that rising the context size lowers LLM efficiency, though the duty issue doesn’t enhance. This means that:

Offering an LLM irrelevant data, will lower its capacity to carry out duties succesfully, even when activity issue doesn’t enhance

The purpose right here is actually that you need to solely present related data to your LLM. Offering different data decreases LLM efficiency (i.e., efficiency shouldn’t be impartial to enter size)

Conclusion

On this article, I’ve mentioned the subject of context engineering, which is the method of offering an LLM with the proper context to carry out its activity successfully. There are loads of strategies you possibly can make the most of to refill the context, corresponding to few-shot prompting, RAG, and instruments. These are all highly effective strategies you need to use to considerably enhance an LLM’s capacity to carry out a activity successfully. Moreover, you even have to contemplate the truth that offering an LLM with an excessive amount of context additionally has downsides. Rising the variety of enter tokens reduces efficiency, as you might examine within the article about context rot.

👉 Observe me on socials:

🧑💻 Get in touch

🔗 LinkedIn

🐦 X / Twitter

✍️ Medium

🧵 Threads