TL;DR

- Small language fashions (SLMs) are optimized generative AI options that provide cheaper and quicker alternate options to large AI methods, like ChatGPT

- Enterprises undertake SLMs as their entry level to generative AI on account of decrease coaching prices, lowered infrastructure necessities, and faster ROI. These fashions additionally present enhanced knowledge safety and extra dependable outputs.

- Small language fashions ship focused worth throughout key enterprise features – from 24/7 buyer assist to offline multilingual help, sensible ticket prioritization, safe doc processing, and different comparable duties

Everybody desires AI, however few know the place to begin.

Enterprises face evaluation paralysis with implementing AI successfully when large, costly fashions really feel like overkill for routine duties. Why deploy a $10 million resolution simply to reply FAQs or course of paperwork? The reality is, most companies don’t want boundless AI creativity; they want centered, dependable, and cost-efficient automation.

That’s the place small language fashions (SLMs) shine. They ship fast wins – quicker deployment, tighter knowledge management, and measurable return on funding (ROI) – with out the complexity or threat of outsized AI.

Let’s uncover what SLMs are, how they’ll assist your online business, and the way to proceed with implementation.

What are small language fashions?

So, what does an SLM imply?

Small language fashions are optimized generative AI (Gen AI) instruments that ship quick, cost-efficient outcomes for particular enterprise duties, resembling customer support or doc processing, with out the complexity of large methods like ChatGPT. SLMs run affordably in your current infrastructure, permitting you to keep up safety and management and providing centered efficiency the place you want it most.

How SLMs work, and what makes them small

Small language fashions are designed to ship high-performance outcomes with minimal sources. Their compact dimension comes from these strategic optimizations:

- Targeted coaching knowledge. Small language fashions prepare on curated datasets in your online business area, resembling industry-specific content material and inner paperwork, slightly than your complete web. This focused strategy eliminates irrelevant noise and sharpens efficiency in your precise use circumstances.

- Optimized structure. Every SLM is engineered for a selected enterprise operate. Each mannequin has simply sufficient layers and connections to excel at their designated duties, which makes them outperform bulkier options.

- Information distillation. Small language fashions seize the essence of bigger AI methods via a “teacher-student” studying course of. They solely take probably the most impactful patterns from expansive LLMs, preserving what issues for his or her designated duties.

- Devoted coaching methods. There are two major coaching methods that assist SLMs to keep up focus:

- Pruning removes pointless elements of the mannequin. It systematically trims underutilized connections from the AI’s neural community. Very like pruning a fruit tree to spice up yield, this course of strengthens the mannequin’s core capabilities whereas eradicating wasteful parts.

- Quantization simplifies calculation, making it run quicker with out demanding costly, highly effective {hardware}. It converts the mannequin’s mathematical operations in a method that it makes use of entire numbers as a substitute of fractions.

Small language mannequin examples

Established tech giants like Microsoft, Google, IBM, and Meta have constructed their very own small language fashions. One SLM instance is DistilBert. This mannequin relies on Google’s Bert basis mannequin. DistilBert is 40% smaller and 60% faster than its mother or father mannequin whereas conserving 97% of the LLM’s capabilities.

Different small language mannequin examples embody:

- Gemma is an optimized model of Google’s Gemini

- GPT-4o mini is a distilled model of GPT-4o

- Phi is an SML go well with from Microsoft that incorporates a number of small language fashions

- Granite is the IBM’s giant language mannequin sequence that additionally incorporates domain-specific SLMs

SLM vs. LLM

You most likely hear about large language models (LLMs) extra typically than small language fashions. So, how are they totally different? And when to make use of both one?

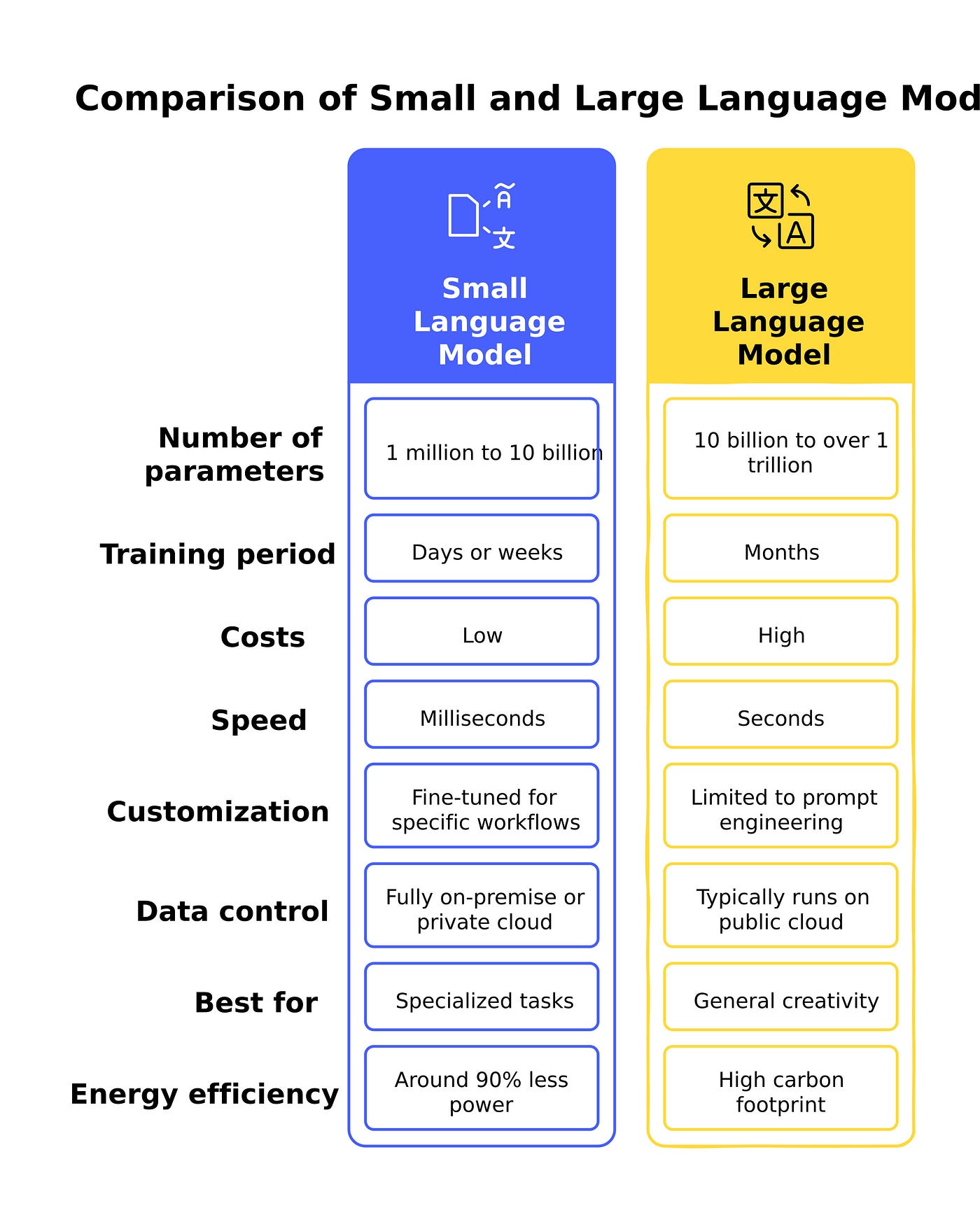

As introduced within the desk under, LLMs are a lot bigger and pricier than SLMs. They’re costly to train and use, and their carbon footprint could be very excessive. A single ChatGPT question consumes as a lot vitality as ten Google searches.

Moreover, LLMs have the historical past of subjecting corporations to embarrassing knowledge breaches. As an illustration, Samsung prohibited employees from using ChatGPT after it uncovered the corporate’s inner supply code.

An LLM is a Swiss military knife – versatile however cumbersome, whereas an SLM is a scalpel – smaller, sharper, and ideal for exact jobs.

The desk under presents an SLM vs. LLM comparability

Is one language mannequin higher than the opposite? The reply is – no. All of it will depend on your online business wants. SLMs help you rating fast wins. They’re quicker, cheaper to deploy and keep, and simpler to regulate. Giant language fashions, alternatively, allow you to scale your online business when your use circumstances justify it. But when corporations use LLMs for each activity that requires Gen AI, they’re working a supercomputer the place a workstation will do.

Why use small language fashions in enterprise?

Many forward-thinking corporations are adopting small language fashions as their first step into generative AI. These algorithms align completely with enterprise wants for effectivity, safety, and measurable outcomes. Choice-makers select SLM due to:

- Decrease entry boundaries. Small language fashions require minimal infrastructure as they’ll run on current firm {hardware}. This eliminates the necessity for expensive GPU clusters or cloud charges.

- Sooner ROI. With deployment timelines measured in weeks slightly than months, SLMs ship tangible worth shortly. Their lean structure additionally permits fast iterations primarily based on real-world suggestions.

- Information privateness and compliance. In contrast to cloud-based LLMs, small language fashions can function fully on-premises or in personal cloud environments, conserving delicate knowledge inside company management and simplifying regulatory compliance.

- Process-specific optimization. Educated for centered use circumstances, SLMs outperform general-purpose fashions in accuracy, as they don’t include any irrelevant capabilities that might compromise efficiency.

- Future-proofing. Beginning with small language fashions builds inner AI experience with out overcommitment. As wants develop, these fashions could be augmented or integrated with bigger methods.

- Diminished hallucination. Their slender coaching scope makes small language fashions much less vulnerable to producing false info.

When must you use small language fashions?

SLMs supply quite a few tangible advantages, and plenty of corporations choose to make use of them as their gateway to generative AI. However there are eventualities the place small language fashions should not a very good match. As an illustration, a activity that requires creativity and multidisciplinary information will profit extra from LLMs, particularly if the finances permits it.

In case you nonetheless have doubts about whether or not an SLM is appropriate for the duty at hand, think about the picture under.

Key small language fashions use circumstances

SLMs are the best resolution when companies want cost-effective AI for specialised duties the place precision, knowledge management, and fast deployment matter most. Listed below are 5 use circumstances the place small language fashions are an amazing match:

- Buyer assist automation. SLMs deal with steadily requested questions, ticket routing, and routine buyer interactions throughout electronic mail, chat, and voice channels. They cut back the workload on human workers whereas responding to clients 24/7.

- Inner information base help. Educated on firm documentation, insurance policies, and procedures, small language fashions function on-demand assistants for workers. They supply fast entry to correct info for HR, IT, and different departments.

- Help ticket classification prioritization. In contrast to generic fashions, small language fashions fine-tuned on historic assist tickets can categorize and prioritize points extra successfully. They ensure that important tickets are processed promptly, lowering response instances and rising consumer satisfaction.

- Multilingual assist in resource-constrained environments. SLMs allow fundamental translation in areas the place cloud-based AI could also be impractical, like in offline or low-connectivity settings, resembling manufacturing websites or distant workplaces.

- Regulatory doc processing. Industries with strict compliance necessities use small language fashions to evaluation contracts, extract key clauses, and generate experiences. Their capability to function on-premises makes them best for dealing with delicate authorized and monetary documentation.

Actual-life examples of corporations utilizing SLMs

Ahead-thinking enterprises throughout totally different sectors are already experimenting with small language fashions and seeing outcomes. Check out these examples for inspiration:

Rockwell Automation

This US-based industrial automation chief deployed Microsoft’s Phi-3 small language mannequin to empower machine operators with on the spot entry to manufacturing experience. By querying the mannequin with pure language, technicians shortly troubleshoot gear and entry procedural information – all with out leaving their workstations.

Cerence Inc.

Cerence Inc., a software program growth firm specializing in AI-assisted interplay applied sciences for the automotive sector, has not too long ago introduced CaLLM Edge. It is a small language mannequin embedded into Cerence’s automotive software program that drivers can entry with out cloud connectivity. It may well react to a driver’s instructions, seek for locations, and help in navigation.

Bayer

This life science big constructed its small language mannequin for agriculture – E.L.Y. This SLM can reply troublesome agronomic questions and assist farmers make choices in actual time. Many agricultural professionals already use E.L.Y. of their every day duties with tangible productiveness features. They report saving up to four hours per week and attaining a 40% enchancment in choice accuracy.

Epic Techniques

Epic Techniques, a serious healthcare software program supplier, reports adopting Phi-3 in its affected person assist system. This SLM operates on premises, conserving delicate well being info secure and complying with HIPAA.

How one can undertake small language fashions: a step-by-step information for enterprises

To reiterate, for enterprises seeking to harness AI with out extreme complexity or value, SLMs present a sensible, results-driven pathway. This part gives a strategic framework for profitable SLM adoption – from preliminary evaluation to organization-wide scaling.

Step 1: Align AI technique with enterprise worth

Earlier than diving into implementation, align your AI strategy with clear enterprise targets.

- Establish high-impact use circumstances. Deal with repetitive, rules-based duties the place specialised AI excels, resembling buyer assist ticket routing, contract clause extraction, HR coverage queries, and many others. Keep away from over-engineering; begin with processes which have measurable inefficiencies.

- Consider knowledge readiness. SLMs require clear, structured datasets. Assessment the standard and accessibility of your knowledge, resembling assist tickets and different inner content material. In case your information base is fragmented, prioritize cleanup earlier than mannequin coaching. If an organization lacks inner experience, think about hiring an exterior data consultant that will help you craft an effective data strategy. This initiative will repay sooner or later.

- Safe stakeholder buy-in. Have interaction division heads early to determine ache factors and outline success metrics.

Step 2: Pilot strategically

A centered pilot minimizes threat whereas demonstrating early ROI.

- Launch in a managed surroundings. Deploy a small language mannequin for a single workflow, like automating worker onboarding questions, the place errors are low-stakes however financial savings are tangible. Use pre-trained fashions, resembling Microsoft Phi-3 and Mistral 7B, to speed up deployment.

- Prioritize compliance and safety. Go for personal SLM deployments to maintain delicate knowledge in-house. Contain authorized and compliance groups from the begin to handle GDPR, HIPAA, and AI transparency.

- Set clear KPIs. Monitor measurable metrics like question decision velocity, value per interplay, and consumer satisfaction.

You too can start with AI proof-of-concept (PoC) development. It means that you can validate your speculation on a good smaller scale. Yow will discover extra info in our information on how AI PoC can help you succeed.

Step 3: Scale strategically

With pilot success confirmed, broaden small language mannequin adoption systematically.

- Increase to adjoining features. As soon as validated in a single area, apply the mannequin to different associated duties.

- Construct hybrid AI methods. Create an AI system the place totally different AI-powered instruments cooperate collectively. In case your small language mannequin can accomplish 80% of the duties, reroute the remaining site visitors to cloud-based LLMs or different AI instruments.

- Help your workers. Practice groups to fine-tune prompts, replace datasets, and monitor outputs. Empowering non-technical employees with no-code instruments or dashboards accelerates adoption throughout departments.

Step 4: Optimize for enduring affect

Deal with your SLM deployment as a dwelling system, not a one-off initiative.

- Monitor efficiency rigorously. Monitor error charges, consumer suggestions, and value effectivity. Use A/B testing to match small language mannequin outputs to human or LLM benchmarks.

- Retrain with new datasets. Replace fashions recurrently with new paperwork, terminology, assist tickets, or some other related knowledge. This can forestall “idea drift” by aligning the SLM with evolving enterprise language.

- Elicit suggestions. Encourage workers and clients to flag inaccuracies or recommend mannequin enhancements.

Conclusion: sensible AI begins small

Small language fashions symbolize probably the most pragmatic entry level for enterprises exploring generative AI. As this text reveals, SLMs ship focused, cost-effective, and safe AI capabilities with out the overhead of large language fashions.

For the adoption course of to go easily, it’s important to staff up with a dependable generative AI development partner.

What makes ITRex your best AI companion?

ITRex is an AI-native firm that makes use of the know-how to hurry up manufacturing and supply cycles. We satisfaction ourselves on utilizing AI to reinforce our staff’s effectivity whereas sustaining shopper confidentiality.

We differentiate ourselves via:

- Providing a staff of execs with various experience. Now we have Gen AI consultants, software engineers, knowledge governance specialists, and an revolutionary R&D staff.

- Constructing clear, auditable AI fashions. With ITRex, you gained’t get caught with a “black field” resolution. We develop explainable AI fashions that justify their output and adjust to regulatory requirements like GDPR and HIPAA.

- Delivering future-ready structure. We design small language fashions that seamlessly combine with LLMs or multimodal AI when your wants evolve – no rip-and-replace required.

Schedule your discovery name at the moment, and we’ll determine your highest-impact alternatives. Subsequent, you’ll obtain a tailor-made proposal with clear timelines, milestones, and value breakdown, enabling us to launch your AI undertaking instantly upon approval.

FAQs

- How do small language fashions differ from giant language fashions?

Small language fashions are optimized for particular duties, whereas giant language fashions deal with broad, artistic duties. SLMs don’t want specialised infrastructure as they run effectively on current {hardware}, whereas LLMs want cloud connection or GPU clusters. SLMs supply stronger knowledge management through on-premise deployment, in contrast to cloud-dependent LLMs. Their centered coaching reduces hallucinations, making SLMs extra dependable for structured workflows like doc processing.

- What are the primary use circumstances for small language fashions?

SLMs excel in repetitive duties, together with however not restricted to buyer assist automation, resembling FAQ dealing with and ticket routing; inner information help, like HR and IT queries; and regulatory doc evaluation. They’re best for multilingual assist in offline environments (e.g., manufacturing websites). Industries like healthcare use small language fashions for HIPAA-compliant affected person knowledge processing.

- Is it potential to make use of each small and enormous language fashions collectively?

Sure, hybrid AI methods mix SLMs for routine duties with LLMs for advanced exceptions. For instance, small language fashions can deal with commonplace buyer queries, escalating solely nuanced points to an LLM. This strategy balances value and suppleness.

Prepared for an SLM that really works for your online business? Let ITRex design your precision AI mannequin – get in touch at the moment.

Initially revealed at https://itrexgroup.com on Could 14, 2025.

The publish Small language models (SLMs): a Smarter Way to Get Started with Generative AI appeared first on Datafloq.