In recent times, transformer-based fashions have revolutionized pure language processing. For instance GPT-2 whichs stands out as one of the vital extensively used fashions for language era duties. GPT-2, developed by OpenAI, is predicated on the transformer structure and excels at producing contextually related and coherent textual content. Nonetheless, there are at all times alternatives to enhance and lengthen the capabilities of those fashions. One such alternative includes including a Future Prediction Layer, which leverages hidden states from all layers of the mannequin to enhance textual content era and prediction capabilities. Due to its compact structure and small file dimension I can be utilizing GPT-2.

On this article, we’ll discover how introducing this extra layer — FutureTransformerEncoder — can enhance GPT-2’s capacity to foretell future phrases or sequences, enhancing its output and efficiency. We’ll additionally focus on the method of extracting hidden states from all layers of the mannequin and the way this method contributes to extra knowledgeable and correct predictions.

Earlier than we dive into the modifications, it’s important to know what hidden states are and why they’re helpful in transformer-based fashions like GPT-2.

In a transformer mannequin, every layer processes the enter sequence, producing intermediate representations referred to as hidden states. These hidden states seize data from the enter and its context at varied ranges of abstraction, from easy token-level relationships within the earlier layers to extra complicated semantic buildings within the deeper layers. GPT-2, as a deep transformer community, produces a hidden state at every of its layers.

By the top of the mannequin’s processing, the hidden state on the remaining layer holds essentially the most contextually knowledgeable illustration, which is used to generate the following token in a sequence. Nonetheless, this remaining hidden state doesn’t leverage the richer data captured by earlier layers. The innovation in our method is to make use of hidden states from all layers and go them into a brand new Future Prediction Layer, thereby bettering the mannequin’s capacity to foretell the following token extra precisely.

The Future Prediction Layer is an revolutionary modification designed to boost the predictive capabilities of transformer-based fashions, similar to GPT-2. This layer works by leveraging the hidden states generated by all layers of the GPT-2 mannequin, versus simply counting on the ultimate hidden state. By incorporating data from a number of layers, the Future Prediction Layer helps the mannequin make extra knowledgeable and correct predictions, which in the end improves textual content era and sequence prediction duties.

Let’s break down how the Future Prediction Layer works intimately:

1. Extracting Hidden States from Each Layer

In a standard transformer structure like GPT-2, the mannequin processes the enter sequence by way of a number of layers. Every of those layers generates hidden states (additionally known as activations or intermediate representations) that seize completely different ranges of details about the enter sequence. These hidden states are representations of the enter, however at various ranges of abstraction, relying on which layer they arrive from.

- Early layers of the transformer mannequin are likely to concentrate on extra native token relationships (e.g., syntax or grammar), capturing fine-grained interactions between tokens within the enter sequence.

- Deeper layers, then again, seize extra summary, semantic relationships (e.g., which means, context, discourse construction).

In a typical transformer-based mannequin, solely the hidden state from the remaining layer is used to make predictions in regards to the subsequent token within the sequence. It is because the ultimate hidden state is believed to carry essentially the most contextually knowledgeable illustration. Nonetheless, this method ignores the wealthy contextual data which will exist within the earlier layers. Hidden states from all layers present a richer, extra nuanced understanding of the enter sequence, as every layer builds upon the earlier one in its illustration of the enter.

In our method, we extract hidden states from all layers of GPT-2 fairly than simply from the ultimate one. This permits us to make the most of the contextual information from every layer to enhance predictions. For instance:

- Layer 1 would possibly concentrate on syntactic particulars, similar to grammar and token-level interactions.

- Layer 6 would possibly start capturing sentence construction and longer-range dependencies between phrases.

- Layer 12 may encapsulate extra summary information in regards to the total which means of the sentence, together with context past the sentence itself.

By utilizing all these hidden states, we’re capturing a complete view of the enter sequence, enabling the mannequin to make higher predictions.

2. Passing Hidden States right into a Transformer Encoder

As soon as we now have extracted the hidden states from each layer, we go them into the FutureTransformerEncoder, which is a customized layer designed particularly for this function. The FutureTransformerEncoder is basically one other transformer encoder that processes the sequence of hidden states, learns from them, and predicts future tokens or sequences.

- Why an encoder? A transformer encoder is well-suited for this activity as a result of it’s designed to course of sequences and construct complicated, context-sensitive representations. It makes use of self-attention to seize long-range dependencies within the knowledge, permitting it to concentrate on essentially the most related components of the enter sequence, regardless of how far aside the tokens are.

- How does it work? The FutureTransformerEncoder takes the extracted hidden states from GPT-2 and applies its personal self-attention mechanism. It learns to prioritize completely different layers’ representations of the enter relying on the context and the duty at hand. For instance, it might give extra weight to earlier layers for syntactic data and to deeper layers for semantic understanding.

After processing the hidden states, the FutureTransformerEncoder generates future predictions primarily based on this enriched context. These predictions are primarily the mannequin’s knowledgeable guesses in regards to the subsequent token or sequence of tokens, contemplating the mixed insights from all layers.

3. Improved Predictions

One of many core advantages of this method is that we’re not relying solely on the ultimate layer’s hidden state. By incorporating data from all layers of the mannequin, the Future Transformer Encoder permits the mannequin to study from a extra complete set of options.

- Richer Context: The hidden states from the deeper layers are higher at capturing complicated, high-level relationships within the textual content, whereas the shallower layers seize extra localized dependencies. By utilizing each, the mannequin could make predictions that replicate each fine-grained particulars and the broader context.

- Capturing Nuanced Patterns: Every layer has completely different strengths in the way it understands the enter. Some layers is perhaps extra delicate to syntax (e.g., the location of commas or the construction of phrases), whereas others are higher at understanding long-range dependencies or summary which means. By aggregating data from all these layers, the Future Prediction Layer can seize a wider vary of patterns, which improves its accuracy in producing textual content or predicting future tokens.

- Extra Sturdy Predictions: The FutureTransformerEncoder has the flexibility to mix this wealthy data in a manner that enhances the robustness of the mannequin’s predictions. As an example, if one layer captures a possible ambiguity within the textual content, one other layer could assist disambiguate it primarily based on broader context, resulting in a extra correct prediction of the following phrase.

In Abstract:

The Future Prediction Layer enhances GPT-2’s capacity to foretell future tokens by using the hidden states from all layers of the transformer mannequin. The method works as follows:

- Hidden states are extracted from all layers of GPT-2, capturing a complete illustration of the enter sequence at varied ranges of abstraction.

- These hidden states are handed right into a Transformer Encoder (the FutureTransformerEncoder), which processes them to make extra knowledgeable predictions.

- By using hidden states from a number of layers, the mannequin can study from a wider array of patterns and contexts, leading to extra correct and sturdy predictions.

This method ensures that the mannequin has entry to a richer context when making predictions, in the end bettering its efficiency in producing coherent, contextually related, and correct textual content.

- Improved Contextual Consciousness: By combining the strengths of all layers, the mannequin higher understands the enter and its context, resulting in improved textual content era high quality.

- Higher Dealing with of Ambiguity: The mannequin can resolve ambiguities by combining the insights from a number of layers, resulting in extra nuanced and coherent predictions.

- Flexibility: This method is just not restricted to GPT-2; it may be utilized to different transformer fashions, together with GPT-3, BERT, and T5, additional enhancing their efficiency in varied NLP duties.

This detailed incorporation of hidden states from each layer permits transformer fashions to transcend the restrictions of conventional strategies, unlocking the potential for extra superior and sturdy pure language understanding and era.

Beneath is the Python code that demonstrates how we implement the GPT-2 mannequin with an extra Future Prediction Layer. The layer captures the hidden states from all layers and makes predictions primarily based on this richer context.

from transformers import GPT2Tokenizer, GPT2LMHeadModel

import torch

import torch.nn as nntokenizer = GPT2Tokenizer.from_pretrained("gpt2")

mannequin = GPT2LMHeadModel.from_pretrained("gpt2")

class FutureTransformerEncoder(nn.Module):

def __init__(self, embed_dim, num_heads, num_layers):

tremendous(FutureTransformerEncoder, self).__init__()

self.encoder_layer = nn.TransformerEncoderLayer(d_model=embed_dim, nhead=num_heads)

self.transformer_encoder = nn.TransformerEncoder(self.encoder_layer, num_layers=num_layers)

self.fc_out = nn.Linear(embed_dim, embed_dim)

def ahead(self, x):

transformer_output = self.transformer_encoder(x)

future_predictions = self.fc_out(transformer_output)

return future_predictions, transformer_output

class GPT2WithFuture(nn.Module):

def __init__(self, mannequin, embed_dim, num_heads, num_layers):

tremendous(GPT2WithFuture, self).__init__()

self.gpt2_model = mannequin

self.future_predictor = FutureTransformerEncoder(embed_dim, num_heads, num_layers)

def ahead(self, input_ids, labels=None):

gpt2_outputs = self.gpt2_model(input_ids=input_ids, labels=labels, output_hidden_states=True)

current_features = gpt2_outputs.hidden_states[-1]

future_features, transformer_output = self.future_predictor(current_features)

if labels is just not None:

loss = gpt2_outputs[0]

return future_features, loss, transformer_output

else:

return future_features, transformer_output

embed_dim = 768

num_heads = 12

num_layers = 12

gpt2_with_future_model = GPT2WithFuture(mannequin, embed_dim=embed_dim, num_heads=num_heads, num_layers=num_layers)

input_text = "What's the capital of France?"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

future_features, loss, transformer_output = gpt2_with_future_model(input_ids)

generated_output = mannequin.generate(input_ids=input_ids, max_length=50, num_beams=5, no_repeat_ngram_size=2, top_k=50, top_p=0.95)

decoded_output = tokenizer.decode(generated_output[0], skip_special_tokens=True)

print("Generated Textual content:", decoded_output)

print("nFuture Options Output Form:", future_features.form)

print("Transformer Output Form:", transformer_output.form)

- GPT-2 Mannequin Loading:

- The GPT-2 mannequin is loaded utilizing the

GPT2LMHeadModel.from_pretrained("gpt2")methodology, and the corresponding tokenizer can also be loaded.

2. Future Transformer Encoder:

- This tradition layer processes the hidden states from all GPT-2 layers and makes predictions about future states. It helps the mannequin to study from a number of ranges of abstraction, bettering the general prediction high quality.

3. GPT-2 with Future Prediction:

- We combine the FutureTransformerEncoder with the GPT-2 mannequin within the

GPT2WithFutureclass. This class combines the present GPT-2 structure with the added future prediction mechanism, permitting us to seize richer context and enhance predictions.

To evaluate the impression of including the Future Prediction Layer, we have to consider the mannequin’s efficiency towards a benchmark. I used perplexity approach for analysis. Here’s what perplexity is:

- Perplexity is a standard metric used to judge language fashions, significantly in pure language processing (NLP). It measures how effectively a language mannequin predicts a pattern. A decrease perplexity signifies that the mannequin is making higher predictions, whereas the next perplexity suggests poorer efficiency.

- In less complicated phrases, perplexity could be considered a measure of uncertainty or shock the mannequin has when predicting the following phrase in a sequence. Right here’s the way it works:

- Perplexity and Chances: Perplexity is carefully tied to the probability of the expected phrases within the mannequin. If a mannequin assigns excessive chance to the proper subsequent phrase, the perplexity can be low. If it assigns low chance, the perplexity can be excessive.

- Mathematical Definition: Mathematically, perplexity is the exponentiation of the typical unfavorable log-likelihood of the expected phrases. The method for perplexity is: Perplexity = 2^Cross-Entropy Loss

- Interpretation: A decrease perplexity means the mannequin is extra assured in its predictions and is best at modeling the underlying distribution of the textual content. A larger perplexity signifies extra confusion or uncertainty in predictions, implying the mannequin is just not performing effectively.

In abstract, a decrease perplexity means higher efficiency, because the mannequin’s predictions are extra correct and assured.

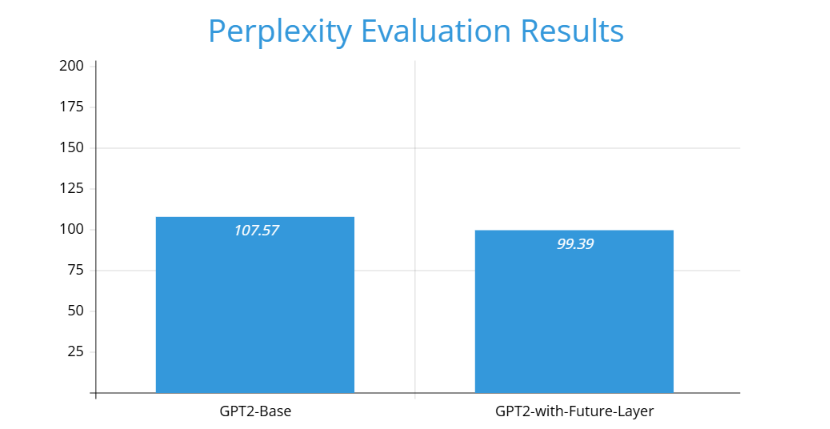

Benchmark was made on a pattern knowledge from wikitext dataset’s wikitext-2-raw-v1 take a look at part. Listed here are the outcomes:

As we are able to see on the chart, GPT2-with-Future-Layer has a greater benchmark consequence.

By utilizing hidden states from all layers of GPT-2, we are able to construct a way more highly effective language mannequin. The Future Prediction Layer makes the mannequin extra sturdy by contemplating not simply the final layer’s context however all obtainable context from throughout the layers. This leads to better-informed predictions, resulting in extra coherent and correct textual content era.

This method demonstrates that even present fashions like GPT-2 could be enhanced by exploiting all obtainable layers of knowledge. As transformer fashions proceed to develop, such modifications can open the door to much more superior language understanding and era methods.

GitHub hyperlink: https://github.com/suayptalha/GPT2-with-Future-Layer

-Şuayp Talha Kocabay