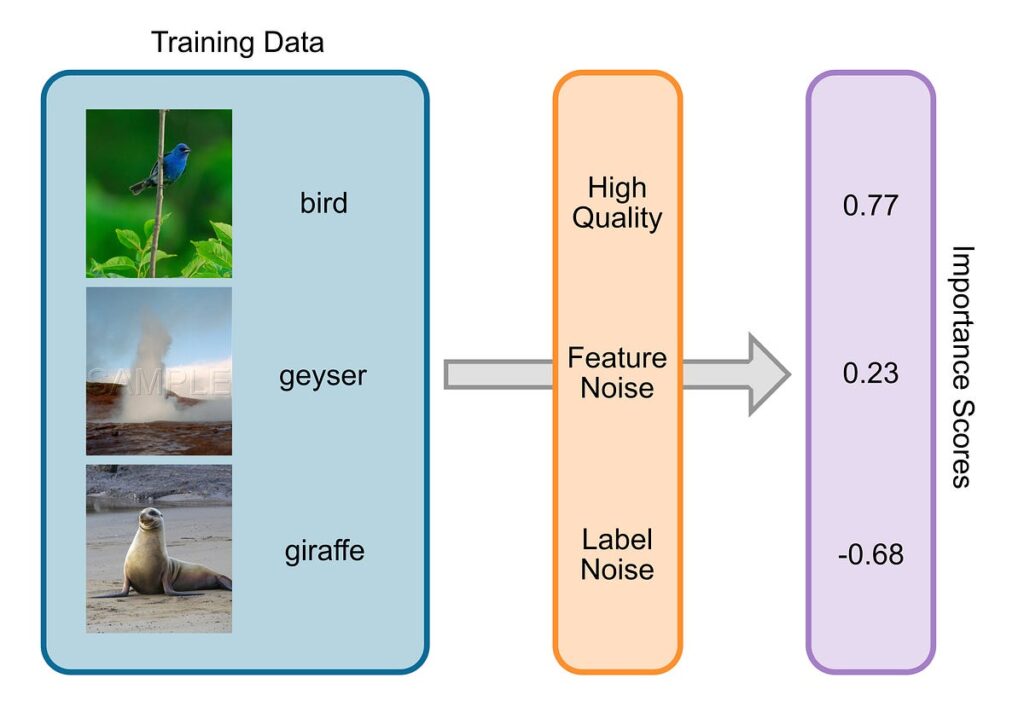

ChatGPT and comparable LLMs had been skilled on insane quantities of information. OpenAI and Co. scraped the web, gathering books, articles, and social media posts to coach their fashions. It’s straightforward to think about that among the texts (like scientific or information articles) had been extra vital than others (corresponding to random Tweets). That is true for nearly any dataset used to coach machine studying fashions; they comprise nearly all the time noisy samples, have mistaken labels, or have deceptive info.

The method that tries to grasp how vital totally different coaching samples are for the coaching strategy of a machine studying mannequin is named Knowledge Valuation. Knowledge Valuation is also referred to as Knowledge Attribution, Knowledge Affect Evaluation, and Representer Factors. There are lots of totally different approaches and functions, a few of which I’ll focus on on this article.

Knowledge Markets

AI will grow to be an vital financial issue within the coming years, however they’re hungry for knowledge. Excessive-quality knowledge is indispensable for coaching AI fashions, making it a worthwhile commodity. This results in the idea of information markets, the place consumers and sellers can commerce knowledge for cash. Knowledge Valuation is the idea for pricing the info, however there’s a catch: Sellers don’t wish to hold their knowledge personal till somebody buys it, however for consumers, it’s onerous to grasp how vital the info of that vendor can be with out having seen it. To dive deeper into this matter, contemplate taking a look on the papers “A Marketplace for Data: An Algorithmic Solution” and “A Theory of Pricing Private Data”.

Knowledge Poisoning

Knowledge poisoning poses a menace to AI fashions: Unhealthy actors may attempt to corrupt coaching knowledge in a strategy to hurt the machine studying coaching course of. This may be performed by subtly altering coaching samples in a approach that’s invisible for people, however very dangerous for AI fashions. Knowledge Valuation strategies can counter this as a result of they naturally assign a really low significance rating to dangerous samples (irrespective of in the event that they happen naturally, or by malice).

Explainability

Lately, explainable AI has gained loads of traction. The Excessive-Stage Specialists Group on AI of the EU even calls for the explainability of AI as foundational for creating trustworthy AI. Understanding how vital totally different coaching samples are for an AI system or a selected prediction of an AI system is vital for explaining its behaviour.

Energetic Studying

If we will higher perceive how vital which coaching samples of a machine studying mannequin are, then we will use this methodology to amass new coaching samples which are extra informative for our mannequin. Say, you’re coaching a brand new giant language mannequin and discover out that articles from the Portuguese Wikipedia web page are tremendous vital on your LLM. Then it’s a pure subsequent step to attempt to purchase extra of these articles on your mannequin. In a similar way, we used Knowledge Valuation in our paper on “LossVal” to amass new automobile crash checks to enhance the passive security methods of vehicles.

Now we all know how helpful Knowledge Valuation is for various functions. Subsequent, we are going to take a look at understanding how Knowledge Valuation works. As described in our paper, Knowledge Valuation strategies might be roughly divided into three branches:

- Retraining-Based mostly Approaches

- Gradient-Based mostly Approaches

- Knowledge-Based mostly Approaches

- “Others”

Retraining-Based mostly Approaches

The widespread scheme of retraining-based approaches is that they practice a machine studying mannequin a number of instances to achieve perception into the coaching dynamics of the mannequin, and in the end, into the significance of every coaching pattern. Essentially the most primary method (which was introduced in 1977 by Dennis Cook) merely retrains the machine studying mannequin with out a knowledge level to find out the significance of that time. If eradicating the info level decreases the efficiency of the machine studying mannequin on a validation dataset, then we all know that the info level was dangerous for the mannequin. Reversely, we all know that the info level was good (or informative) for the mannequin if the mannequin’s efficiency on the validation set will increase. Repeat the retraining for every knowledge level, and you’ve got worthwhile significance scores on your full dataset. This sort of rating is named the Depart-One-Out error (LOO). Fully retraining your machine studying mannequin for each single knowledge level could be very inefficient, however viable for easy fashions and small datasets.

Knowledge Shapley extends this concept utilizing the Shapley value. The concept was revealed concurrently by each Ghorbani & Zou and by Jia et al. in 2019. The Shapley worth is a assemble from sport principle that tells you ways a lot every participant of a coalition contributed to the payout. A better-to-life instance is the next: Think about you share a Taxi with your folks Bob and Alice on the way in which dwelling from a celebration. Alice lives very near your beginning vacation spot, however Bob lives a lot farther away, and also you’re someplace in between. After all, it wouldn’t be honest if every of you pays an equal share of the ultimate value, despite the fact that you and Bob drive an extended distance than Alice. The Shapley worth solves this, by taking a look at all of the sub-coalitions: What if solely you and Alice shared the taxi? What if Bob drove alone? And so forth. This fashion, the Shapley worth will help you all three pay a justifiable share in the direction of the ultimate taxi value. This can be utilized to knowledge: Retrain a machine studying mannequin on totally different subsets of the coaching knowledge to pretty assign an “significance” to every of the coaching samples. Sadly, that is extraordinarily inefficient: calculating the precise Shapley values would wish greater than the O(2ⁿ) retrainings of your machine studying mannequin. Nonetheless, Knowledge Shapley might be approximated rather more effectively utilizing Monte Carlo strategies.

Many various strategies have been proposed, for instance, Data-OOB and Average Marginal Effect (AME). Retraining-based approaches wrestle with giant coaching units, due to the repeated retraining. Significance scores calculated utilizing retraining might be imprecise due to the impact of randomness in neural networks.

Gradient-Based mostly Approaches

Gradient-based approaches solely work for machine studying algorithms primarily based on gradient, corresponding to Synthetic Neural Networks or linear and logistic regression.

Affect capabilities are a staple in statistics and had been proposed by Dennis Cook, who was talked about already above. Affect capabilities use the Hessian matrix (or an approximation of it) to grasp how the mannequin’s efficiency would change if a sure coaching pattern was ignored. Utilizing Affect Features, there isn’t any must retrain the mannequin. This works for easy regression fashions, but additionally for neural networks. Calculating affect capabilities is sort of inefficient, however approximations have been proposed.

Different approaches, like TraceIn and TRAK monitor the gradient updates in the course of the coaching of the machine studying mannequin. They will efficiently use this info to grasp how vital an information level is for the coaching with no need to retrain the mannequin. Gradient Similarity is one other methodology that tracks the gradients however makes use of them to check the similarity of coaching and validation gradients.

For my grasp’s thesis, I labored on a brand new gradient-based Knowledge Valuation methodology that exploits gradient info within the loss operate, known as LossVal. We launched a self-weighting mechanism into normal loss capabilities like imply squared error and cross-entropy loss. This permits to assign significance scores to coaching samples in the course of the first coaching run, making gradient monitoring, Hessian matrix calculation, and retraining pointless, whereas nonetheless attaining state-of-the-art outcomes.

Knowledge-Based mostly Approaches

All strategies we touched on above are centered round a machine studying mannequin. This has the benefit, that they inform you how vital coaching samples are on your particular use case and your particular machine studying mannequin. Nonetheless, some functions (like Knowledge Markets) can revenue from “model-agnostic” significance scores that aren’t primarily based on a selected machine studying mannequin, however as a substitute solely construct upon the info.

This may be performed in numerous methods. For instance, one can analyze the distance between the coaching set and a clear validation set or use a volume measure to quantify the range of the info.

“Others”

Below this class, I subsume all strategies that don’t match into the opposite classes. For instance, utilizing Ok-nearest neighbors (KNN) permits a a lot more efficient computation of Shapley values with out retraining. Sub-networks that result from zero-masking might be analyzed to grasp the significance of various knowledge factors. DAVINZ analyzes the change in efficiency when the coaching knowledge adjustments by trying on the generalization boundary. Simfluence runs simulated coaching runs and may estimate how vital every coaching pattern relies on that. Reinforcement learning and evolutionary algorithms can be used for Knowledge Valuation.

At present, there are some analysis developments in numerous instructions. Some analysis is being performed to convey different sport theoretic ideas, just like the Banzhaf Value or the Winter value, to Knowledge Valuation. Different approaches attempt to create joint significance scores that embrace different elements of the training course of within the valuation, such because the learning algorithm. Additional approaches work on private (the place the info doesn’t need to be disclosed) and personalized Knowledge Valuation (the place metadata is used to complement the info).

Knowledge Valuation is a rising matter, a number of different Knowledge Valuation strategies weren’t talked about on this article. Knowledge Valuation is a worthwhile instrument for higher understanding and deciphering machine studying fashions. If you wish to study extra about Knowledge Valuation, I can advocate the next articles: