Hey fellow machine learners,

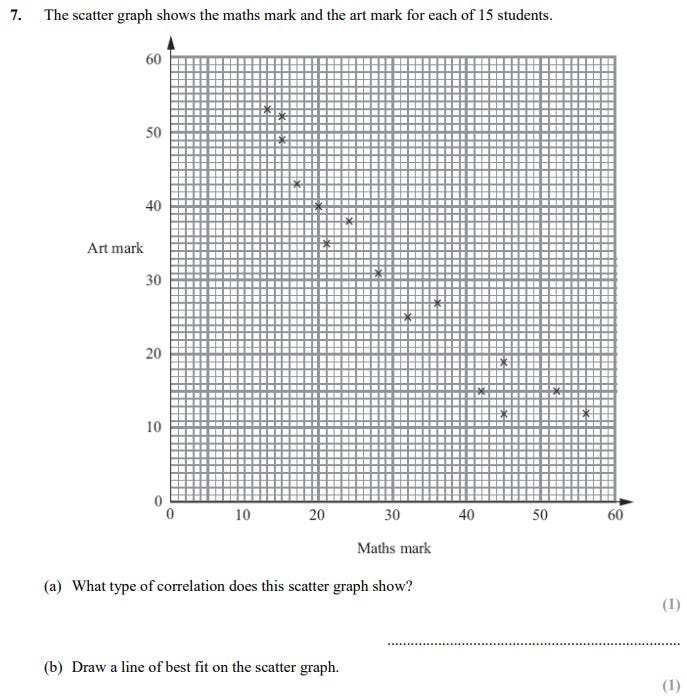

I would love you to solid your thoughts again to your secondary faculty science courses. Sooner or later, you had been most likely given a scatter plot and requested to attract a line of greatest match.

The road of greatest match is helpful, as a result of it permits us to deduce a y-coordinate worth, given an x-coordinate worth (or vice versa). Which means you don’t want each coordinates of a brand new knowledge level to be able to consider the place it ought to be plotted on the graph.

Hmm, wait a sec… predicting a y worth given an x worth that was not essentially current within the unique dataset … will we not have some flavours of machine studying right here?

The standard machine studying mannequin workflow is:

On this instance, the coaching part includes the pc drawing line of match (which we hope is definitely the ‘greatest’ match), after which predicting y-coordinates for the unexpected x-coordinates it’s given.

So, if the ‘line of greatest match’ train is one thing you probably did in school, then congratulations! Maybe with out realising, you may have already leveraged some concepts from machine studying earlier than. The technical time period for that is linear regression.

For the case of the secondary faculty workout routines, we people conduct this ‘coaching’ step visually. I think about you simply seemed on the factors, bought your ruler out and drew the road, bagging you a couple of straightforward marks in your GCSE chemistry/physics/biology/maths examination.

However how would a pc go about engaging in such a activity? Computer systems don’t see the information factors on the graph like we do- all they see are the coordinates of every level.

Is there a means we will mathematically outline the ‘line of greatest match’ drawback?

In the case of machine studying, the issue is often arrange in a mathematical means. Within the context of linear regression, this helps us to scrupulously outline what would make a line of ‘good’ match, a line of ‘dangerous’ match and, most significantly, a line of ‘greatest’ match.

We will mannequin a generic straight line as y=ax+b, the place the gradient of the road is given by ‘a’ and the y-intercept of the road is given by ‘b’. In full generality, our dataset of n factors is of the shape

We intention to optimise the values of a and b to make our line match the information in addition to attainable. To perform this, we are going to try the quantify how dangerous our line is at its job. We are going to name this worth the loss of our line. Thus, the optimum parameters (a, b) can be such that the loss is minimised. Now for the method for the loss:

Let’s unpack the place this method got here from:

- For every datapoint (x_i, y_i) within the dataset, examine the distinction between the precise y_i worth and the worth that the regression mannequin predicts. The mannequin’s prediction is given by ax_i+b. This distinction is known as the residual.

- We compute the sq. of the residual. (We’ll get again to this in a sec.)

- We then sum up the error incurred for every datapoint, as indicated by the summation from i=1 to n. This provides us the whole error.

Effectively, if we simply took the residuals to be (y_i-(ax_i+b)), then the algorithm could not assure that the proposed parameters give us the ‘greatest’ line.

Contemplate the instance the place the dataset consists of simply two factors: {(0,1), (1,0)}.

With none calculations, it’s clear that the road of greatest match is just y = 1 — x.

In response to the non-squared residual, the loss for this line is (1–1) + (0–0) = 0.

However what if we use the road y = x + 1? Our loss for this line is (1–1) + (0–2) = -2.

And -2 < 0, so in accordance with the algorithm, the second line gives a greater match to the information, as a result of it incurs a smaller loss worth.

This setup is illustrated beneath:

Hmm… what went flawed?

Effectively, for the reason that residuals weren’t squared, adverse values are thought-about decrease than any constructive residual values.

Sq. numbers can’t be adverse, which helps us deal with the issue within the picture. Certainly, with squaring we arrive at residuals of 0 and 4 for the blue and inexperienced traces respectively, with 0 < 4 ✅

With the same old squared-residuals method, the bottom attainable worth we may get for L(a, b) could be the case the place the summand losses are all zero, leading to L(a, b)=0. This may solely be the outcome from the algorithm if our knowledge factors fashioned a precise straight line, which is nearly by no means the case in real-world knowledge.

Good! We now have a method that signifies dangerous our line is. Subsequent step: how will we make our line ‘much less dangerous’?

The method for the loss L is dependent upon the parameters a and b. So the most effective match line will come from the gradient-intercept pair (a, b) that minimise L.

I declare that the answer is given by:

Within the above, we use the bar notation to indicate the empirical common, i.e.

Take note of the bar placement. Specifically, discover the distinction between the 2 following expressions:

We observe the derivation offered in The Book of Statistical Proofs which we modify barely right here to account for some small variations. The chain rule yields

and

Setting the second partial spinoff equal to zero and rearranging, we get

and this leaves us with the optimum worth for b of

We repeat the method for a:

Substituting in our express b worth, we get

which we will rearrange to get the optimum worth for a of

Derivation full ✅

Now you know the way linear regression fashions acquire their optimum gradient and y-intercept values. Nice stuff!

For the next, we are going to use the diabetes dataset offered within the scikit-learn docs.

Keep in mind the next from the documentation:

“Ten baseline variables, age, intercourse, physique mass index, common blood strain, and 6 blood serum measurements had been obtained for every of n = 442 diabetes sufferers, in addition to the response of curiosity, a quantitative measure of illness development one 12 months after baseline.

Observe: Every of those 10 function variables have been imply centered and scaled by the usual deviation occasions the sq. root of n_samples (i.e. the sum of squares of every column totals 1).”

For this text, we are going to keep on with the standardised values. If you wish to work with the uncooked values as an alternative, you’ll be able to scrape them your self from the source.

Let’s see how the ‘standardised’ BMI correlates with the Illness Development:

If we leverage scikit-learn’s built-in linear_model operate, we will plot the road of greatest match and compute the worth for the sum of the squared residuals.

The proposed line of greatest match actually seems cheap. However yikes. That’s a big error worth.

It is sensible although, given the size of the y-axis, in addition to the truth that our factors, though demonstrating some constructive correlation, are under no circumstances collinear.

As per the title, the 2 traces of code I used for the mannequin:

After all, there’s a bit of knowledge pre-processing concerned beforehand .

Here is a hyperlink to the complete Python pocket book.

Mess around with the code your self. For instance, are you able to make regression plots for the opposite options within the dataset?

We didn’t really need it within the code, proper?

True, however that’s as a result of we used the scikit-learn package deal which handles the algorithm for us. When you wished to code the mannequin from scratch, you have to know the closed kind resolution, if not the derivation for it.

In any other case, you’ll simply be blindly trusting the software program packages that others have written. And the place’s the enjoyable in that?

Linear regression is a straightforward ML method. However we are going to quickly cowl fashions with totally different formulations and, particularly, totally different use instances. And also you gained’t be capable to critically consider good mannequin selections except you may have a minimum of a imprecise understanding of what’s occurring below the hood.

And if my maths background has taught me something, it’s this: it’s best to at all times be capable to justify why you’re doing what you’re doing, a minimum of in some half. That is what, I consider, units a ‘good’ knowledge scientist aside from a ‘nice’ one.

Or quite, it’s what distinguishes between an information scientist of ‘good’ match, and an information scientist of ‘greatest’ match 😉

Maybe the complete mathematical derivation isn’t crucial.

However the underlying ideas will take you a good distance.

Plus, I discover it enjoyable to discover the formulation that underpin all this ‘machine studying’ enterprise.

And that is my try at placing the ‘Unpacked’ in ‘Machine Studying Algorithms Unpacked’.

Let’s summarise what we’ve realized this week:

- The road of greatest match train presents, consider it or not, a bona fide instance of machine studying in motion. We had been all mainly budding ML engineers again in our GCSE science days, who would’ve guessed.

- The method of coaching a linear regression mannequin includes minimising the sum of the squared residuals with respect to the gradient and intercept of the road. The concept of minimising our loss corresponds on to the road of greatest match. Maintain the concept of optimisation in thoughts, as we are going to come throughout it once more sooner or later.

- Now we have to compute the sum of the squared variations to be able to keep away from the problem of errors cancelling one another out.

- You don’t have to memorise any formulation as such, however it’s best to now be capable to clarify to a different machine learner how a linear regression mannequin obtains its optimum parameters.

I actually hope you loved studying!

There’s a bit extra occurring on this article than the earlier ones- do go away a remark in the event you suppose I’ve made any errors, brought about confusion, and so on.

Till subsequent Sunday,

Ameer