With an expertise of 25+ full stack Machine Studying tasks, this should be one of many coolest ones I’ve labored on thus far! On this weblog, we’ll talk about how we will develop a Signal Language Translator app utilizing Streamlit and YOLOv5 base mannequin in lower than an hour. So let’s get began. 🙂

1. Knowledge Assortment

To coach the YOLOv5 mannequin for signal language detection, step one is to get a dataset of hand indicators and gestures. You possibly can seek advice from this YouTube video for studying some primary hand gestures in American Signal Language. I used the webcam to seize real-time photos of hand gestures, recorded with variations in lighting, backgrounds, and angles to make sure a various dataset, utilizing the next python script:

import os

import cv2

import time

import uuid

IMAGE_PATH = “CollectedImages”

labels = [‘Hello’,’Yes’,’No’,’Thanks’,’IloveYou’,’Please’, ‘Help’, ‘What’, ‘Repeat’, ‘Eat’, ‘Fine’, ‘Sorry’]

number_of_images=20

for label in labels:

img_path = os.path.be a part of(IMAGE_PATH, label)

os.makedirs(img_path)

cap=cv2.VideoCapture(0)

print(‘Accumulating photos for {}’.format(label))

time.sleep(5)

for imgnum in vary(number_of_images):

ret,body=cap.learn()

imagename=os.path.be a part of(IMAGE_PATH,label,label+’.’+’{}.jpg’.format(str(uuid.uuid1())))

cv2.imwrite(imagename,body)

cv2.imshow(‘body’,body)

time.sleep(2)

if cv2.waitKey(1) & 0xFF==ord(‘q’):

break

cap.launch()

2. Knowledge Annotation

As soon as the information is collected, it should be annotated to outline the areas the place indicators/gestures seem within the frames. I used RoboFlow device to attract bounding packing containers round hand gestures in every picture or video body. Assign class labels to every gesture or signal. This labeling knowledge was utilized by the YOLOv5 mannequin to study the positions and courses of gestures. The annotations are saved within the YOLO format the place every line within the annotation file represents an object with the format:

3. YOLO v5 Set up

Subsequent, I put in and set YOLO v5 up for the challenge by cloning its official GitHub repository in Google Colaboratory (for T4 GPU entry for mannequin coaching), utilizing the next instructions:

!git clone https://github.com/ultralytics/yolov5.git

%cd yolov5

4. Knowledge Loading

As soon as the dataset is ready and annotated, it must be loaded into the YOLOv5 framework. The dataset is organized into folders: ‘practice/’ and ‘val/’, and a YAML file is created to outline the dataset’s construction. The YAML file incorporates data akin to the trail to the photographs, the category labels, and the cut up between coaching and validation knowledge. The code snippet and its output is proven beneath:

import zipfile

import os

zip_path = “/content material/Sign_language_data.zip”

with zipfile.ZipFile(zip_path, ‘r’) as zip_ref:

zip_ref.extractall(“/content material/Sign_language_data”)%cat /content material/Sign_language_data/knowledge.yaml

5. Mannequin Configuration

This step includes organising the YOLOv5 mannequin to detect hand gestures with the specified specs. This consists of defining the variety of courses and adjusting hyperparameters akin to the training fee, batch measurement, and picture measurement. The configuration is adjusted within the .yaml file.

%%writetemplate /content material/yolov5/fashions/custom_yolov5s.yaml

nc: {num_classes}

depth_multiple: 0.33

width_multiple: 0.50anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

spine:

[[-1, 1, Focus, [64, 3]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, BottleneckCSP, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 9, BottleneckCSP, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, BottleneckCSP, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 1, SPP, [1024, [5, 9, 13]]],

[-1, 3, BottleneckCSP, [1024, False]], # 9

]

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, ‘nearest’]],

[[-1, 6], 1, Concat, [1]], # cat spine P4

[-1, 3, BottleneckCSP, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, ‘nearest’]],

[[-1, 4], 1, Concat, [1]], # cat spine P3

[-1, 3, BottleneckCSP, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, BottleneckCSP, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, BottleneckCSP, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

6. Mannequin Coaching

The coaching course of utilizing Weights and Biases (for effecting monitoring and visualizing the coaching metrics of our machine studying mannequin) begins by executing the next instructions:

!wandb login — relogin

%%time

%cd /content material/yolov5/

!python practice.py — img 416 — batch 16 — epochs 600 — knowledge ‘/content material/Sign_language_data/knowledge.yaml’ — cfg ./fashions/custom_yolov5s.yaml — weights ‘yolov5s.pt’ — title yolov5s_results — cache

This may practice my YOLOv5 mannequin on the collected dataset for 600 epochs with a batch measurement of 16 and picture decision of 416×416 pixels. The mannequin makes use of switch studying by loading the pre-trained yolov5s.pt weights to hurry up coaching and enhance accuracy, particularly if the dataset is small.

7. Mannequin Analysis

After coaching, the mannequin’s efficiency is evaluated on the validation and testing datasets. YOLOv5 mannequin outputs metrics akin to precision, recall, and imply Common Precision (mAP), which signifies how nicely the mannequin is detecting hand gestures.

The outcomes are visualized utilizing a Tensorboard as proven, by means of confusion matrices and precision-recall curves to establish areas for enchancment. The mannequin will be fine-tuned by adjusting hyperparameters or including extra knowledge to optimize its efficiency.

8. Actual-time Prediction Testing

After the mannequin is skilled and evaluated, it’s examined in real-time utilizing the webcam. OpenCV captures the dwell video feed, which is fed into the YOLOv5 mannequin for gesture detection. The mannequin performs inference on every body on the native machine as proven, drawing bounding packing containers across the detected gestures and displaying the category labels on the display.

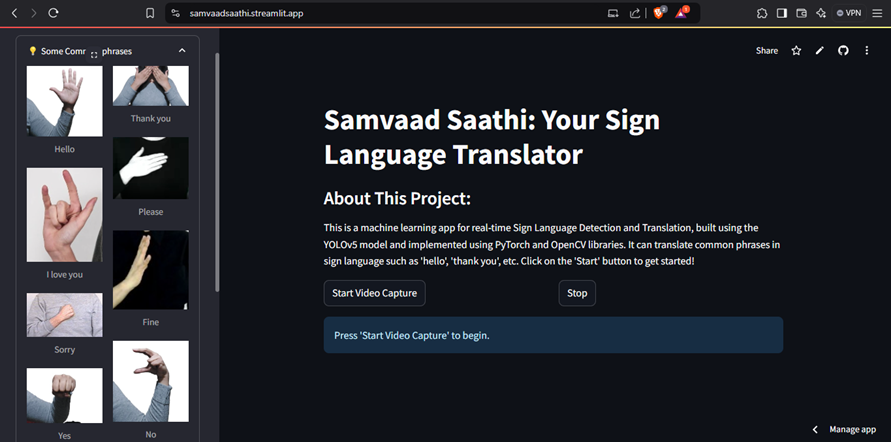

9. Net Software Growth utilizing Streamlit

I’ll be utilizing Streamlit to construct a easy, interactive web-based interface the place customers can check the signal language detection system. The appliance permits customers to see the outcomes of gesture detection in real-time and you’ll present extra performance by translating the detected indicators into textual content or speech.

I gained’t be sharing the code right here to maintain the size of this weblog brief and exact, however you possibly can go to my GitHub Repository to get an thought of how I made it.

10. App Deployment

Lastly, a static Streamlit utility will be deployed utilizing Streamlit Cloud for straightforward entry and utilization, permitting the applying to be shared by way of a public URL. It’s because Streamlit Cloud presently doesn’t help internet cam. For a dynamic deployment with internet cam enabled, one might use Hugging Face Spaces. Nonetheless, remember the fact that you can not add your mannequin pickle file with .pkl or .pt extensions, as they gained’t be detected within the HuggingFace Areas repository. A greater possibility could be to transform the pickle file right into a .safetensors file, a subject which I’ll almost certainly deal with in my subsequent weblog. That is how my static app appears to be like on the Streamlit Cloud:

For any points, be happy to achieve my GitHub repository out for all recordsdata at https://github.com/SemalJohari/Samvaad-Saathi.

Cheers and joyful machine studying! ❤