Within the previous article, we noticed how LLMs suppose and course of info briefly. On this article we dive deeper into the structure behind LLMs, particularly Transformers.

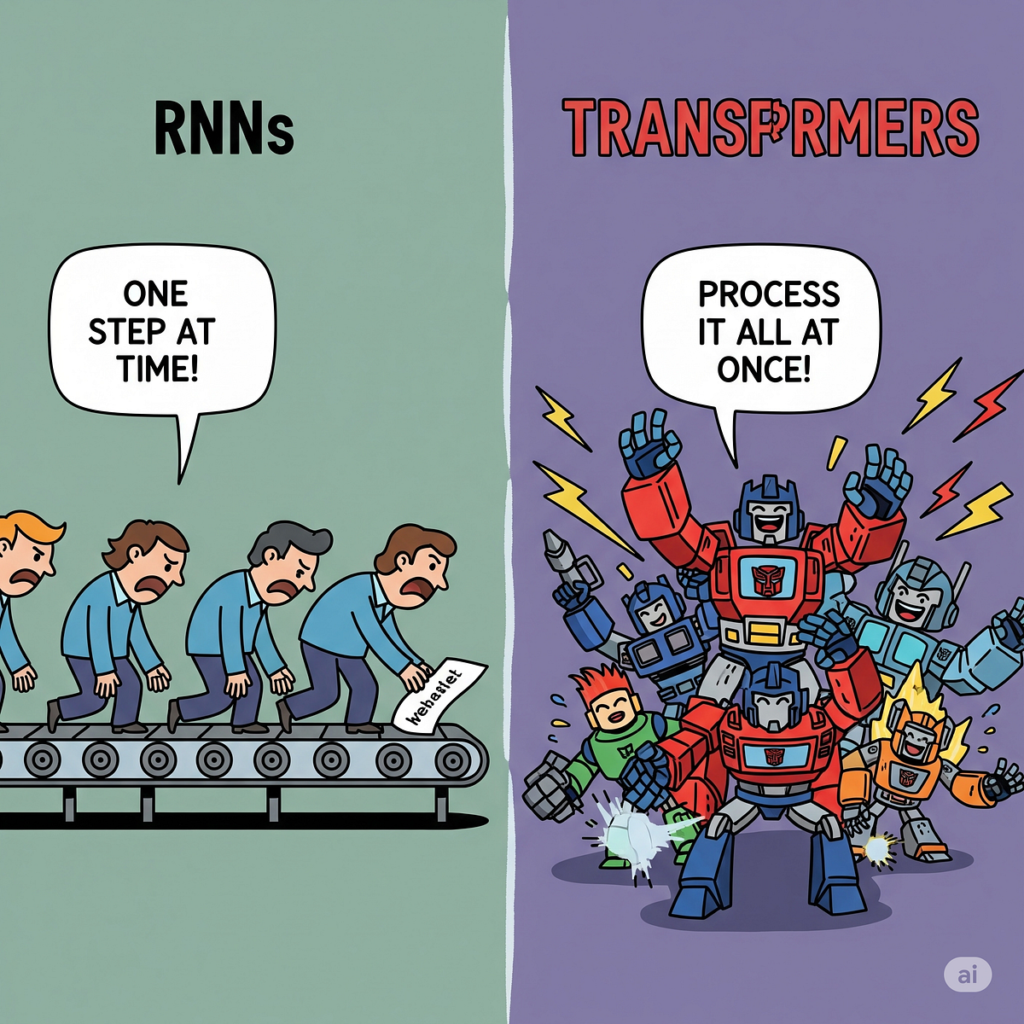

Transformers have been launched first in 2017, within the well-known paper “Attention is All You Need”. Do checkout the paper after studying this text for those who wanna perceive the place it began. Earlier than transformers, textual content was processed phrase by phrase in a sequential method which had many drawbacks. With the appearance of transformers, complete textual content sequences could possibly be processed concurrently, whereas sustaining an understanding of how every phrase pertains to each different phrase within the context.

Think about you’re attempting to grasp how a transformer reads and processes textual content, identical to the way you may learn a sentence and perceive its that means. Let me stroll you thru this fascinating journey step-by-step, with out getting misplaced in complicated technical particulars.

Step 1: Breaking Down the Textual content Into Digestible Items

While you give a transformer some textual content to work with, the very first thing it does is break that textual content down into smaller, manageable items referred to as tokens. For instance, for those who enter the sentence “The cat sat on the mat,” the transformer splits this into particular person tokens: [“The”, “cat”, “sat”, “on”, “the”, “mat”].

This course of known as tokenization. It is essential as a result of transformers can’t work with uncooked textual content immediately. Generally, these tokens could be entire phrases, however they can be components of phrases and even particular person characters, relying on how the mannequin was designed. The important thing level right here is that the transformer must have a constant strategy to deal with any textual content you throw at it.

Step 2: Changing Phrases Into Numbers the Pc Can Perceive

As soon as the textual content is damaged into tokens, every token will get transformed into an extended record of numbers referred to as an embedding. Consider this as giving every phrase a novel mathematical fingerprint that captures its that means. As an illustration, phrases with comparable meanings like “glad” and “joyful” would have comparable quantity patterns, whereas fully totally different phrases like “cat” and “arithmetic” would have very totally different patterns.

These embeddings are sometimes 512 numbers lengthy in lots of transformer fashions, making a wealthy mathematical illustration of every phrase’s that means. The gorgeous factor about this course of is that the transformer learns these quantity representations throughout coaching, so phrases which can be utilized in comparable contexts find yourself with comparable mathematical fingerprints.

Step 3: Including Place Data — As a result of Order Issues

Right here’s the place transformers face an fascinating problem. Not like people who naturally learn from left to proper and perceive that phrase order issues, transformers initially see all of the tokens directly with out realizing which comes first. To resolve this, they add particular positional info to every token’s embedding.

Consider this like including timestamps to every phrase . “The” will get a marker saying “I’m place 1,” “cat” will get “I’m place 2,” and so forth. This positional encoding makes use of mathematical capabilities based mostly on sine and cosine waves to create distinctive place signatures. This manner, the transformer is aware of that “The cat chased the canine” means one thing totally different from “The canine chased the cat.”

Step 4: The Consideration Mechanism — The place the Magic Occurs!

Now comes essentially the most fascinating a part of how transformers work: the eye mechanism! Think about you’re studying a sentence and attempting to grasp what every phrase means in context. You naturally have a look at surrounding phrases to get the total image. That’s precisely what the eye mechanism does, however in a way more refined means.

When processing every phrase, the transformer asks three vital questions by means of what are referred to as Question, Key, and Worth vectors. Consider it like this: for each phrase, the Question asks “What ought to I take note of?”, the Key responds “I’m such a info,” and the Worth offers “Right here’s my precise content material”. The transformer then calculates how a lot consideration every phrase ought to pay to each different phrase within the sentence.

For instance, when processing the phrase “sat” in “The cat sat on the mat,” the eye mechanism may decide that “sat” ought to pay excessive consideration to “cat” (as a result of that’s who’s doing the sitting) and average consideration to “mat” (as a result of that’s the place the sitting occurs). This occurs concurrently for each phrase within the sentence, making a wealthy net of relationships.

Step 5: Multi-Head Consideration — Taking a look at A number of Views

To make this course of much more highly effective, transformers don’t simply use one consideration mechanism — they use a number of in parallel, referred to as multi-head consideration. Consider this like having a number of folks learn the identical sentence, the place every individual focuses on totally different points. One “head” may give attention to grammatical relationships, one other on semantic meanings, and one more on long-distance phrase connections.

All these totally different views get mixed to create a complete understanding of how every phrase pertains to all of the others within the sentence. That is why transformers are so good at understanding context and nuance in language.

Step 6: Processing By A number of Layers

The transformer doesn’t cease after only one spherical of consideration. As an alternative, it passes the processed info by means of a number of layers, each refining and enhancing the understanding. Consider this like studying a fancy paragraph a number of instances, and every time by means of you decide up new particulars and connections you might need missed earlier than.

Every layer builds upon the work of the earlier layers, steadily growing a extra refined understanding of the textual content. A typical transformer might need 6, 12, or much more layers, with every layer including its personal insights to the general comprehension course of.

Step 7: Producing the Last Output

In any case this processing, the transformer has developed a wealthy, contextual understanding of the enter textual content. Within the remaining step, this understanding will get transformed into no matter output format is required. For a language mannequin like ChatGPT, this implies predicting what phrase ought to come subsequent. For a translation mannequin, it means producing the equal sentence in one other language.

The transformer appears to be like in any respect the processed info and calculates possibilities for every potential subsequent phrase in its vocabulary. It then selects the phrase with the best chance as its prediction. This course of can proceed phrase by phrase to generate complete sentences, paragraphs, and even longer texts.

Understanding transformers is essential as a result of they kind the muse of just about all fashionable Massive Language Fashions. Whether or not we’re speaking about GPT-4, Claude, or every other state-of-the-art language mannequin, they’re all constructed on transformer structure ideas.

The affect of transformers extends far past simply language fashions. Their versatility has led to functions throughout quite a few domains, essentially altering how we method synthetic intelligence. In pure language processing, transformers energy the whole lot from serps to chatbots, from translation companies to content material technology instruments. Past textual content, transformers have been tailored for laptop imaginative and prescient (Imaginative and prescient Transformers), audio processing (speech recognition and technology), code technology (GitHub Copilot), and even scientific functions like protein construction prediction. This versatility demonstrates the basic energy of the eye mechanism and the transformer structure.

Understanding transformers offers you with the muse to grasp just about any fashionable AI system you encounter. Whether or not you’re focused on utilizing these instruments in your work, growing new functions, or just understanding the know-how that’s reshaping our world, the ideas we’ve lined right here will function your information. I discovered this materials significantly helpful to grasp the working of Transformers, do test it out link (This hyperlink will take you exterior medium)

The journey from the sequential limitations of RNNs to the parallel energy of transformers represents one of the vital vital advances in synthetic intelligence historical past. As you proceed exploring the world of generative AI, keep in mind that on the coronary heart of those seemingly magical techniques lies the elegant simplicity of consideration — the flexibility to give attention to what issues most, simply as we do after we learn, pay attention, and perceive.

Hope you loved studying the article. You should definitely give me a couple of claps for those who discovered the article helpful. Within the subsequent article we’ ll have a look at the several types of transformer architectures.