Introduction to Mannequin Serving

Earlier than diving into mannequin serving, let’s first perceive why it’s wanted.

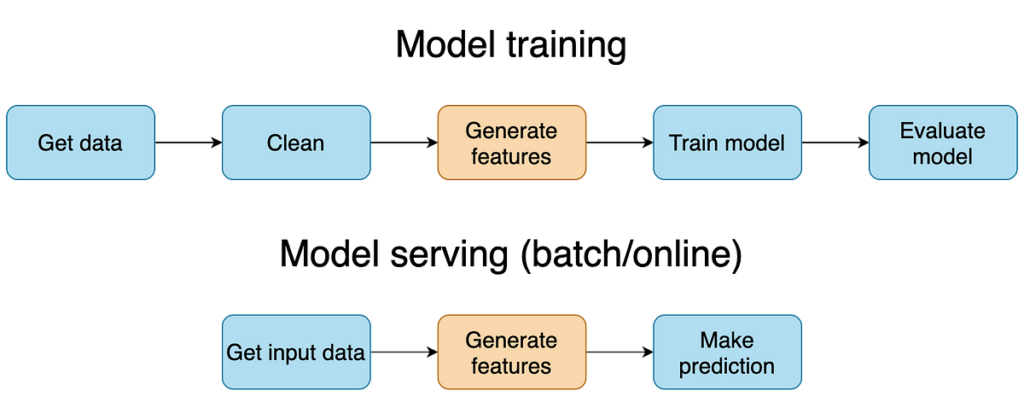

In a typical machine studying mannequin lifecycle, as soon as a mannequin is educated and evaluated, the subsequent step is to deploy it so it may generate predictions for real-world use circumstances. However how do you make it accessible for broader utilization?

A easy option to share a mannequin with fellow builders is by pushing it to a model management system like GitHub. Different builders can then pull the repository, load the mannequin, and run predictions utilizing a script (e.g., fundamental.py).

Alternatively, should you’re working in a cloud surroundings, you possibly can log the educated mannequin utilizing MLflow, Vertex AI Mannequin Registry, SageMaker Mannequin Registry, or different comparable mannequin administration and registry providers, making it simpler to handle mannequin variations and artifacts.

However making the mannequin accessible is simply step one — the subsequent and essential step is serving the mannequin in order that it may generate predictions for real-world functions, both on-demand or in bulk.

What’s Mannequin Serving?

Mannequin serving is the method of creating a educated machine studying mannequin accessible for inference (predictions) in manufacturing environments, usually by way of APIs, pipelines, or batch processing techniques.

It basically allows seamless interplay between customers or functions and the machine studying mannequin — reworking a static mannequin artifact into an actively usable system element.

Actual-time vs Batch Inferencing

Mannequin serving could be broadly achieved by way of two main routes — Actual-time Inferencing and Batch Inferencing — and the selection between them largely depends upon how shortly predictions are wanted in your use case.

Batch Inferencing

Batch processing is right when predictions don’t have to be generated immediately. As an alternative of processing particular person information factors in actual time, this method collects information over an outlined interval and processes them collectively in bulk. The output is then saved in a goal location like a database, information warehouse, or file storage.

This method is usually utilized in eventualities like buyer segmentation, churn prediction, propensity fashions, or danger scoring — the place mannequin inference jobs could be scheduled to run at common intervals (day by day, weekly, hourly) with out impacting person expertise.

→In batch processing, API endpoints are normally not needed. As an alternative, a scheduled job or pipeline hundreds information, runs inference utilizing a saved mannequin (like from MLflow), and shops the outcomes for later use.

Actual-time Inferencing

Actual-time or close to real-time inferencing is used when predictions are wanted with minimal latency — usually inside milliseconds or just a few seconds of receiving the enter. The precise acceptable latency can range relying on the appliance; as an example, chatbots usually require sub-second responses, whereas some suggestion techniques may tolerate barely longer latencies. That is frequent in use circumstances like real-time suggestion techniques, real-time fraud detection at transaction time, chatbots, or digital assistants — the place a person or an software expects a speedy response.

Furthermore, real-time serving can observe both:

- Synchronous Inference: An immediate response containing the prediction is returned inside the similar request-response cycle. Whereas the processing of a single request is commonly sequential, the server may deal with a number of concurrent synchronous requests in parallel. It’s extra correct to outline it by the consumer’s ready habits slightly than solely as sequential processing.

- Asynchronous Inference: A request is submitted, and the processing occurs within the background. The result’s shared with the consumer later by way of a callback mechanism, notification (e.g., webhook), or by the consumer periodically polling a standing endpoint. Asynchronous inference usually leverages parallel processing to handle a number of background duties effectively, nevertheless it’s primarily outlined by the non-blocking nature of the consumer’s interplay.

“Synchronous inference returns predictions instantly inside the similar request, whereas asynchronous inference queues the request, processes it within the background, and returns the outcome later — making it supreme for long-running or resource-intensive predictions.”

Endpoints in Mannequin Serving

Endpoints play an important function in real-time or close to real-time serving. They act as a bridge between your mannequin and the surface world (functions/customers). Usually, these are REST APIs or gRPC providers.

In batch processing eventualities, endpoints won’t at all times be required — however that’s not a strict rule.

It’s not needed that batch inferencing can’t be achieved by way of endpoints; it completely depends upon the target at hand.

For instance, if the mannequin’s output is solely being up to date again to a database, batch pipelines suffice with none endpoint. But when customers or exterior techniques must set off batch inference jobs dynamically — say from a easy UI — an endpoint can settle for the request, set off the background batch job, and notify customers as soon as the job is full.

Batch vs Actual-time: Tradeoff Comparability

| Function | Batch Inferencing | Actual-time Inferencing |

|------------------------|---------------------------------------------|--------------------------------------------------|

| Use-case Suitability | Periodic, scheduled predictions | On the spot, on-demand predictions |

| Latency | Larger latency acceptable | Low latency required |

| Knowledge Processing | Processes massive chunks without delay | Processes single or few information per name |

| Infrastructure | Pipelines, Jobs, Scheduled duties | API Endpoints, Microservices |

| Complexity | Less complicated structure | Requires cautious scaling & monitoring |

| Instance Use-cases | Studies, Analytics, Segmentation | Chatbots, Fraud Detection, Suggestions |

Remaining Takeaways

- Mannequin serving is a essential step to operationalize machine studying fashions.

- Selecting between batch and real-time depends upon your use-case, latency necessities, and consumption sample.

- Batch inferencing is nice for periodic, large-scale processing with much less urgency.

- Actual-time inferencing shines when low latency and rapid interplay is required.

- Consider batch serving as operating experiences in a single day, and real-time serving as answering a person’s query in a reside chat — completely different speeds for various wants

- Endpoints are central to real-time serving however may allow batch processing in sure eventualities.