As a result of df2_final_latest_v3.csv Deserves Higher

Let’s admit it — we’ve all sinned.

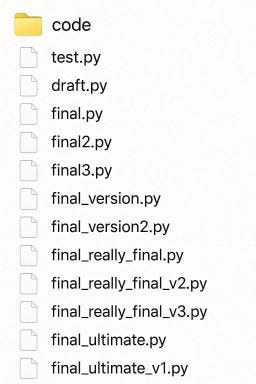

There’s a folder on my laptop computer proper now with recordsdata like:

final_model.ipynbfinal_model_NEW.ipynbfinal_model_FINAL_TRY2.ipynb

And let’s not even speak about variable names. I’ve seen temp, df, df2, x1, output3, and as soon as—hauntingly—simply zzz.

Early in my information science journey, I believed naming issues was a small element. An afterthought. One thing you would clear up on the finish (which in fact, by no means occurs). However over time, I’ve realized one thing highly effective:

Naming issues nicely isn’t a nice-to-have. It’s an expert ability.

Machine studying is about abstraction, certain. However each mannequin you construct lives in a sea of options, recordsdata, parameters, and outputs. When these are poorly named, you lose the very factor information science is meant to ship:

🧭 Readability.

I as soon as revisited a mannequin I constructed simply six months earlier. I had educated an excellent classifier. The efficiency metrics have been strong. However I couldn’t keep in mind what X_train_final3_latest really contained.

Was it earlier than SMOTE? After PCA? With log-transform or with out?

I had constructed a black field for myself.

- Self-sabotage

You suppose you’ll keep in mind whatdf_final_v2is tomorrow. You received’t.

2. Collaboration friction

Your colleague opens the pocket book and sees:

data2 = df[df2.columns[1:]].dropna()

and quietly screams inside.

3. Deployment drama

In manufacturing, ambiguous variable names flip debugging into archaeology. Particularly when the logs say:

In manufacturing, ambiguous variable names flip debugging into archaeology. Particularly when the logs say:

"Error in output_df3_cleaned_v2_step4"

4. Misplaced context = misplaced worth

When you may’t clarify your individual pipeline clearly, your mannequin isn’t simply exhausting to breed — it’s exhausting to belief.

Right here’s what I’ve discovered to like:

Naming nicely is about being form to your future self and your workforce.

- Be particular, not intelligent

Keep away from jokes or acronyms nobody else will get.

✅monthly_sales_by_region

❌msbrorthanos_snap

2. Describe the transformation or stage

Use suffixes like _raw, _cleaned, _filtered, _final, _pca meaningfully.

3. Keep on with constant prefixes

All options → X_, all targets → y_, all outputs → pred_, and many others.

4. Use dates properly

In case your information adjustments over time, embrace the interval:transactions_jan2024, not simply transactions_latest.

5. Identify your mannequin variations like a scientistxgb_churn_tuned_v1, xgb_churn_shap_v2, and many others.

Cease the insanity of final_final_last_try_really.ipynb.

Once I learn somebody’s code, I need to see the pipeline like a story:

- Right here’s the uncooked information

- Now we cleaned it

- Right here’s the characteristic matrix

- Right here’s the educated mannequin

- Right here’s the prediction

That’s not simply good follow — it’s good communication. Variable names are the plot units of your evaluation.

- All the time use lowercase + underscores:

model_experiments_july.csv - No areas, no caps, no particular characters

- Use semantic versioning if useful:

churn_model_v1.2.ipynb - Add objective:

eda_loyalty_vs_churn.ipynb, not simplyeda.ipynb

It’s possible you’ll not at all times have time to write down excellent feedback or README recordsdata. But when your variables are clear, your undertaking turns into self-documenting.

And that, my buddy, is what separates a scrappy script from production-grade work.

I used to suppose naming was one thing you cleaned up later. Now, I deal with it as a part of the modeling course of. It displays how nicely I perceive my very own work.

So the subsequent time you’re tempted to write down df2_final_v4—pause. Ask your self:

“If I noticed this title in 6 months… would I thank myself or curse myself?”

Make your future self proud.