TensorFlow is an open-source deep studying framework developed by Google Mind. Launched in 2015, it has develop into broadly utilized in each educational analysis and industrial initiatives.

- Low-level management: Tensor operations, automated differentiation, customized layer definition

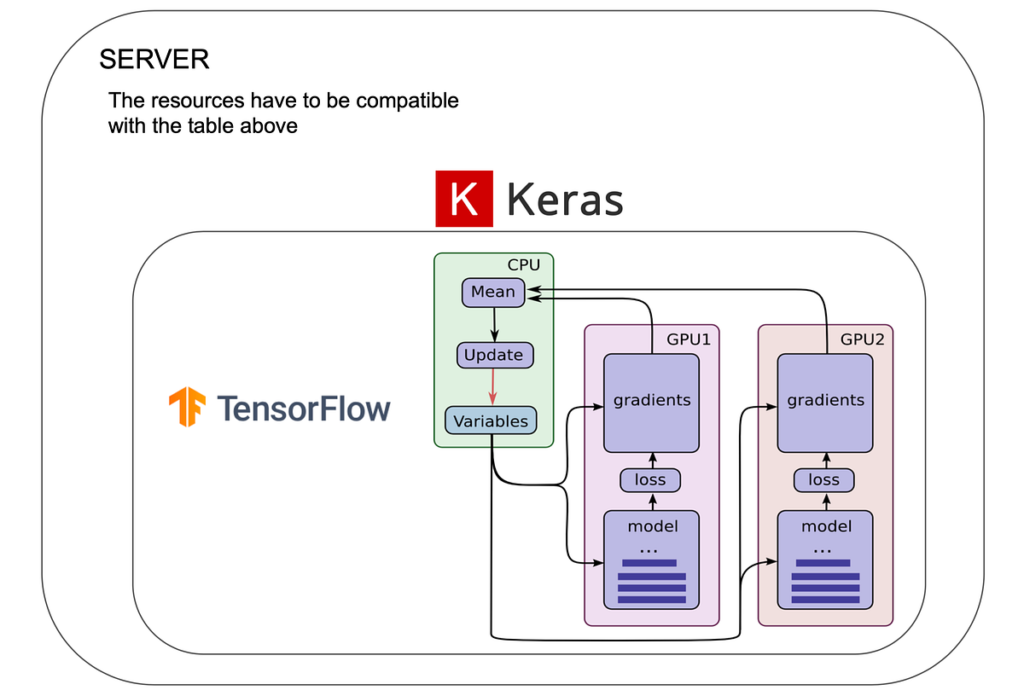

- Distributed coaching: Multi-GPU and TPU assist

- Graph-based computation: Static graphs in TF 1.x, keen execution in TF 2.x

- Wealthy ecosystem: TFX, TensorFlow Lite, TensorFlow Serving

Keras was initially developed as a standalone high-level API; right now, it’s totally built-in with TensorFlow 2.x as tf.keras Its simplicity and concise code construction make it ideally suited for speedy prototyping.

- Easy API: Helps Sequential and Practical API

- Mannequin abstraction: Straightforward definition of layers, optimizers, and loss features

- Backend integration: Runs on high of TensorFlow

TensorFlow gives the low-level computation engine, whereas Keras serves because the user-friendly API layer constructed on high of it.

- Keras = Abstraction layer / Straightforward to make use of

- TensorFlow Core = Low-level management / Flexibility

Use Keras (Speedy Prototyping)

- Shortly construct and take a look at fashions

- Excellent for Kaggle competitions

- Nice for baseline fashions

Use TensorFlow Core (Low-Degree Management)

- Customized loss features and layers

- Efficiency optimization

- {Hardware}-level configurations

The next instance demonstrates a easy classifier utilizing Keras API with TensorFlow backend:

import tensorflow as tf

from tensorflow.keras import layers, fashions# Load dataset

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

# Outline mannequin

mannequin = fashions.Sequential([

layers.Flatten(input_shape=(28, 28)),

layers.Dense(128, activation='relu'),

layers.Dropout(0.2),

layers.Dense(10, activation='softmax')

])

# Compile

mannequin.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Prepare

mannequin.match(x_train, y_train, epochs=5)

# Consider

mannequin.consider(x_test, y_test)

from tensorflow.keras.preprocessing.picture import ImageDataGenerator

import tensorflow as tfBATCH_SIZE = 16

print("nLoading coaching knowledge...")

training_data_generator = ImageDataGenerator(

rescale=1./255,

zoom_range=0.2,

rotation_range=15,

width_shift_range=0.05,

height_shift_range=0.05)

training_iterator = training_data_generator.flow_from_directory('/Customers/emirhanulker/Paperwork/PneumoniaDetectionUsingCNN/chest_xray/take a look at',class_mode='categorical',color_mode='grayscale',batch_size=BATCH_SIZE)

print("nLoading validation knowledge...")

validation_data_generator = ImageDataGenerator(

rescale=1./255

)

validation_iterator = validation_data_generator.flow_from_directory('/Customers/emirhanulker/Paperwork/PneumoniaDetectionUsingCNN/chest_xray/take a look at',class_mode='categorical', color_mode='grayscale',batch_size=BATCH_SIZE)

print("nBuilding mannequin...")

mannequin = tf.keras.Sequential()

mannequin.add(tf.keras.Enter(form=(256, 256, 1)))

mannequin.add(tf.keras.layers.Conv2D(2, 5, strides=3, activation="relu"))

mannequin.add(tf.keras.layers.MaxPooling2D(

pool_size=(5, 5), strides=(5,5)))

mannequin.add(tf.keras.layers.Conv2D(4, 3, strides=1, activation="relu"))

mannequin.add(tf.keras.layers.MaxPooling2D(

pool_size=(2,2), strides=(2,2)))

mannequin.add(tf.keras.layers.Flatten())

mannequin.add(tf.keras.layers.Dense(2,activation="softmax"))

mannequin.abstract()

print("nCompiling mannequin...")

mannequin.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.005),

loss=tf.keras.losses.CategoricalCrossentropy(),

metrics=[tf.keras.metrics.CategoricalAccuracy(),tf.keras.metrics.AUC()]

)

print("nTraining mannequin...")

mannequin.match(

training_iterator,

steps_per_epoch=int(training_iterator.samples / BATCH_SIZE),

epochs=5,

validation_data=validation_iterator,

validation_steps=int(validation_iterator.samples / BATCH_SIZE))

Benefits:

- Straightforward studying curve

- Speedy prototyping with minimal code

- Native integration with TensorFlow 2.x

Disadvantages:

- Restricted flexibility for very advanced fashions

- Requires switching to TensorFlow Core for low-level operations

Benefits:

- Excessive flexibility, customized operations potential

- Distributed coaching assist

- Appropriate for large-scale initiatives

Disadvantages:

- Steeper studying curve

- Extra boilerplate code required

- Handwritten Digit Recognition (MNIST)

- Easy Sentiment Evaluation (IMDB Evaluations)

- Cat vs. Canine Picture Classification

- Pneumonia Detection

When you’ve mastered the fundamentals of TensorFlow and Keras, listed here are the following steps to advance your deep studying journey:

1. Work with Extra Advanced Datasets

- Experiment with CIFAR-10, COCO, or ImageNet

- Apply switch studying utilizing pre-trained fashions (ResNet, MobileNet)

2. Be taught Mannequin Deployment

- Deploy to cellular/internet with TensorFlow Lite or TensorFlow.js

- Serve fashions through APIs utilizing TensorFlow Serving or Docker

3. Discover Specialised Deep Studying Fields

- Pc Imaginative and prescient

- Pure Language Processing (NLP)

- Speech and Audio Processing

4. Be a part of the Group and Construct a Portfolio

- Take part in Kaggle competitions

- Contribute to open-source initiatives on GitHub

- Write articles in Medium about your learnings to share information and showcase abilities

Recommendation: Begin constructing your personal mission concepts, share them on GitHub, and write about what you be taught. This accelerates your progress and helps create a powerful portfolio for future alternatives.